The risks of the “pathogen research enterprise”

By Malcolm Dando | March 4, 2009

In one of his wonderfully funny children’s books, Richard Scarry turned the idea of the clever fox on its head with his foxy character Mr. Fixit. Mr. Fixit is the repairman from hell, who wrecks everything he is called upon to repair. Rather unkindly perhaps, it was Mr. Fixit who appeared in my mind when I first heard that earlier this month the U.S. Army had stopped work at its Fort Detrick biodefense laboratories until it carried out an audit of what materials were in the labs. In expanding its research to protect against a perceived increase in the threat of bioterrorism, had the U.S. military inadvertently created undue risk? How might these risks compare with the original biowarfare and bioterrorism risks against which the defenses are being erected?

In trying to answer these questions, we need to have a reasonable definition of what we mean by risk. University of Sussex researcher Andrew Stirling usefully clarified the concept by examining the uncertainties of particular outcomes and of the probability that they will occur. Stirling noted that four possible combinations could arise: outcome known, probability known; outcome known, probability unknown; outcome unknown, probability known; outcome unknown, probability unknown. The first possible combination, “in which past experience or scientific models are judged to foster high confidence in both the possible outcomes and their respective probabilities,” constitutes the formal condition of risk and “it is under these conditions that conventional techniques of risk assessment offer a scientifically rigorous approach.”

Yes, efforts to improve defense measures against bioterrorism (and biowarfare) involve some level of risk that the pathogen research enterprise will have an inadvertent, malign mishap. But these aren’t the only dangers arising from the expansion of biodefense work on dangerous pathogens.”

Stirling labels the other possible combinations as uncertainty (when we know the outcome but not the probability), ambiguity (when we know the probability but not the outcome), and ignorance (when we cannot characterize either the outcome or the probability). With these latter three, science-based risk assessments cannot reasonably be applied. Indeed, the National Research Council’s Committee on Methodological Improvements to the Department of Homeland Security’s Biological Agent Risk Analysis criticized the Department of Homeland Security’s Bioterrorism Risk Assessment for claiming scientific precision regarding threats of unknown outcome and probability. Stirling acknowledges that in the real world it is rare to find the sharply defined, logically separate conditions he identifies, yet science-based analysis is possible when looking at the details of a particular issue, even if we use the term “risk” for convenience in general discussions.

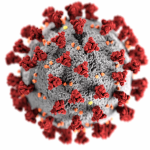

Health law professor Michael Baram properly framed the discussion for bioterrorism risks by categorizing the large-scale expansion of work on dangerous pathogens, particularly in the United States, as the “pathogen research enterprise.” While not denying that some socially desirable outcomes could be achieved through the research, he stressed the risks involved: “Because the national research enterprise involves producing samples of pathogens, shipping them to hundreds of laboratories, storing and handling of the pathogens by researchers, and using the pathogens in experiments which involve infecting a multitude of animals, there are many opportunities for mishaps and accidental exposures within the labs which infect researchers and other workers, and for accidental releases into host communities which endanger the public.”

Baram suggests that the release of a highly contagious pathogen could “spiral into a devastating epidemic of lethal disease at regional, national, or even global levels,” and defines two classes of risk scenarios. In a “primary release,” officials identify an event at a lab, and emergency responses quickly deal with the problem. In a “secondary release,” the response is slower. For example, if a lab worker is infected and leaves the lab unaware of the infection, emergency responses are likely to kick in only after the worker becomes ill. In such a secondary release, the danger to the public could be much greater. In spite of ongoing efforts to improve the management and regulation of the burgeoning U.S. pathogen research enterprise, Baram details the increasing incidence of these types of accidents.

So, yes, efforts to improve defense measures against bioterrorism (and biowarfare) involve some level of risk that the pathogen research enterprise will have an inadvertent, malign mishap. But these aren’t the only dangers arising from the expansion of biodefense work on dangerous pathogens. In order to see why, we need to look more carefully at what is being defended against.

Despite the recent attention given to bioterrorism in the media and policy-making circles, the historical record holds little unequivocal evidence of previous biological attacks when scientific knowledge of the nature of infectious diseases has been available. However, bioterrorism could increasingly be a threat in coming decades. The changing nature of warfare–toward messy internal conflicts, ethnic cleansing, and difficult outsider interventions, what Rupert Smith calls “war among the people”–could well make the use of chemical and biological weapons more attractive to those involved. Moreover, the biotech revolution increases both the range of possible attacks and the ease with which unskilled people could obtain the necessary agents. The leakage of agents or knowledge from state-level biodefense and offense programs is another possibility.

The historical record shows quite clearly that there were a series of major state-level offensive bioweapons programs in the last century and that biological weapons developed in some of these programs were used against animals, humans, and plants. Some state-level offensive programs might remain in place today despite the prohibitions embodied in the Biological and Toxin Weapons Convention and the Chemical Weapons Convention. We also know that difficulties in gathering intelligence on bioweapons programs led to misperceptions and to states ramping up their programs in response to what were later found to be inflated threats.

It is in this sense that the large-scale increase in biodefense work and the entire pathogen research enterprise embodies further risk. Without careful efforts to ensure transparency, benignly intended research could trigger responses in other countries that could also be misunderstood. Any unintended biological arms race would undoubtedly lead to the build up of more state-level offensive biological weapons programs, leading to untold risks.

Consider the scenario set out by Nathan Freier, a senior fellow at the Center for Strategic and International Studies, in his 2008 study, “Known Unknowns: Unconventional ‘Strategic Shocks’ in Defense Strategy Development.” Freier supposes that a state of significance with employable weapons of mass destruction capability–defined as “sizable nuclear or biological”–falls apart. The ensuing breakdown of order enflames domestic and foreign conflicts. And all of this occurs in “an environment where the surety of nuclear or biological weapons is in question, critical strategic resources are at risk, and/or the core interests of adjacent states are threatened by spill over.”

Freier’s paper is a powerful argument for rigorously analyzing and preparing for such possible strategic shocks. Yet, as I read the scenario, I couldn’t help but imagine how a state’s own actions could inadvertently add employable biological weapons to the mix. It would be an outcome of which Mr. Fixit would be proud!

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Topics: Biosecurity, Columnists