The possibility and risks of artificial general intelligence

By Émile P. Torres | April 29, 2019

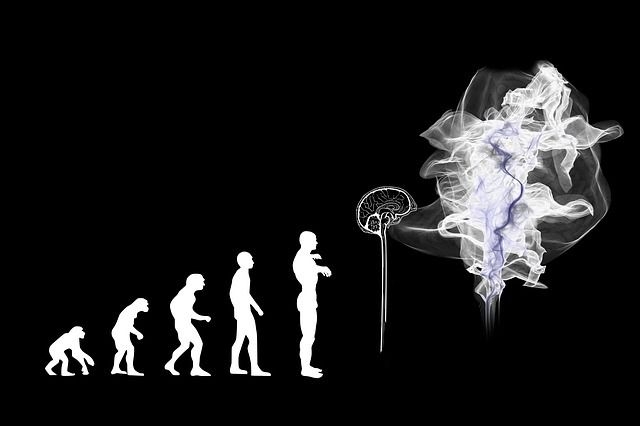

This article offers a survey of why artificial general intelligence (AGI) could pose an unprecedented threat to human survival on Earth. If we fail to get the “control problem” right before the first AGI is created, the default outcome could be total human annihilation. It follows that since an AI arms race would almost certainly compromise safety precautions during the AGI research and development phase, an arms race could prove fatal not just to states but for the entire human species. In a phrase, an AI arms race would be profoundly foolish. It could compromise the entire future of humanity.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.