How to make AI less racist

By Walter Scheirer | August 9, 2020

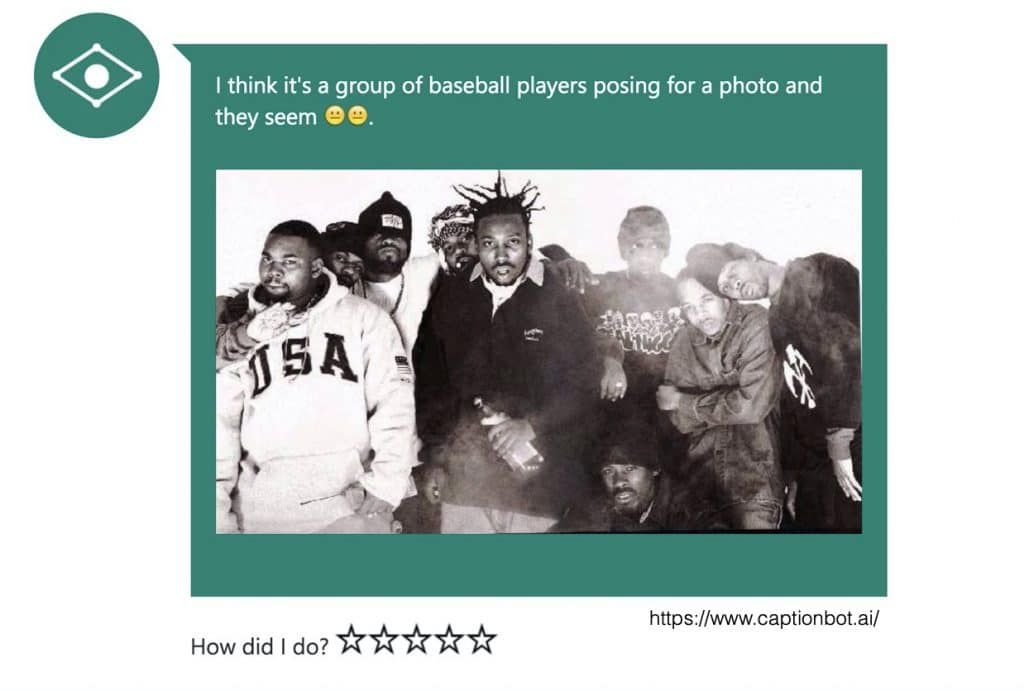

CaptionBot, an AI program that applies captions to images, mistakenly described the members of the hip hop group the Wu-Tang Clan as a group of baseball players. This type of mistake often occurs because of the way certain demographics are represented in the data used to train an AI system. Credit: Walter Scheirer/CaptionBot.

CaptionBot, an AI program that applies captions to images, mistakenly described the members of the hip hop group the Wu-Tang Clan as a group of baseball players. This type of mistake often occurs because of the way certain demographics are represented in the data used to train an AI system. Credit: Walter Scheirer/CaptionBot.

In 2006, a trio of artificial intelligence (AI) researchers published a useful resource for their community, a massive dataset consisting of images representing over 50,000 different noun categories that had been automatically downloaded from the internet. The dataset, dubbed Tiny Images, was an early example of the “big data” strategy in AI research, whereby an algorithm is shown as many examples as possible of what it is trying to learn in order for it to better understand a given task, like recognizing objects in a photo. By uploading small 32-by-32 pixel images, the Tiny Images researchers were relying on the ability of computers to exhibit the same “remarkable tolerance of the human visual system” and recognize even degraded images. They also, however, may have unintentionally succeeded in recreating another human characteristic in AI systems: racial and gender bias.

A pre-print academic paper revealed that Tiny Images used several categories for images labeled with racial and misogynistic slurs. For instance, a derogatory term for sex workers was one category; a slur for women, another. There was also a category of images labeled with a racist term for Black people. Any AI system trained on the dataset might recreate the biased categories as it sorted and identified objects. Tiny Images was such a large dataset and its contents so small, that it would have been a herculean, perhaps impossible, task to perform quality control to remove the offensive category labels and images. The researchers, Antonio Torralba, Rob Fergus, and Bill Freeman, made waves in the artificial intelligence world when they announced earlier this summer that they would be pulling the whole thing from public use.

“Biases, offensive and prejudicial images, and derogatory terminology alienates an important part of our community — precisely those that we are making efforts to include,” Torralba, Fergus, and Freeman wrote. “It also contributes to harmful biases in AI systems trained on such data.”

Developers used the Tiny Images data as raw material to train AI algorithms that computers use to solve visual recognition problems and recognize people, places, and things. Smartphone photo apps, for instance, use similar algorithms to automatically identify photos of skylines or beaches in your collection. The dataset was just one of many used in AI development.

While the pre-print’s shocking findings about Tiny Images was troubling in its own right, the issues the paper highlighted are also indicative of a larger problem facing all AI researchers: the many ways in which bias can creep into the development cycle.

The big data era and bias in AI training data. The internet reached an inflection point in 2008 with the introduction of Apple’s iPhone3G. This was the first smartphone with viable processing power for general internet use, and, importantly, it included a digital camera that could be available to the user at a moment’s notice. Many manufacturers followed Apple’s lead with similar competing devices, bringing the entire world online for the first time. A software innovation that appeared with these smartphones was the ability of an app running on a phone to easily share photos from the camera to privately owned cloud storage.

This was the launch of the era of big data for AI development, and large technology companies had an additional motive beyond creating a good user experience: By concentrating a staggering amount of user-generated content within their own platforms, companies could exploit the vast repositories of data they collected to develop other products. The prevailing wisdom in AI product development has been that if enough data is collected, any problem involving intelligence can be solved. This idea has been extended to everything from face recognition to self-driving cars, and is the dominant strategy for attempting to replicate the competencies of the human brain in a computer.

But using big data for AI development has been problematic in practice.

The datasets used for AI product development now contain millions of images, and nobody knows what exactly is in them. They are too large to examine manually in an exhaustive manner. When it comes to the use of these sets, the data can be labeled or unlabeled. If the data is labeled (as was the case with Tiny Images), those labels can be tags that were taken from the original source, new labels assigned by volunteers or people who have been paid to provide them, or automatically generated by an algorithm trained to label data.

Dataset labels can be naturally bad, reflecting the biases and outright malice of the humans who annotated them, or artificially bad, if the mistakes are made by algorithms. A dataset could even be poisoned by malicious actors intending to create problems for any algorithms that make use of it. Additionally, some datasets contain unlabeled data, but these datasets, used in conjunction with algorithms that are designed to explore a problem on their own, aren’t the antidote for poorly labeled data. As is also the case for labeled datasets, the information contained within unlabeled datasets can be a mismatch with the real world, for instance when certain demographics are under- or over-represented in the data.

In 2016, Microsoft released a web app called CaptionBot that automatically added captions to images. The app was meant to be a successful demonstration of the company’s computer vision API for third-party developers, but even under normal use, it could make some dubious mistakes. In one notable instance, it mislabeled a photo of the hip hop group Wu-Tang Clan as a group of baseball players. This type of mistake can occur when a bias exists in the dataset for a specific demographic. For example, Black athletes are often overrepresented in datasets assembled from public content on the internet. The prominent Labeled Faces in the Wild dataset for face recognition research contains this form of bias.

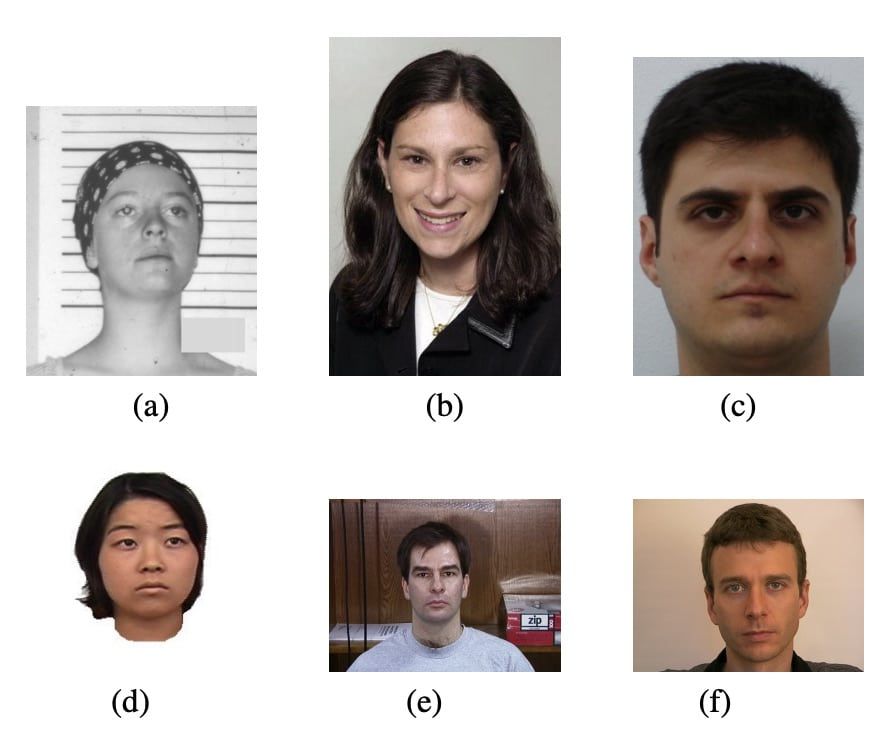

More problematic examples of this phenomenon have surfaced. Joy Buolamwini, a scholar at the MIT Media Lab, and Timnit Gebru, a researcher at Google, have shown that commonly used datasets for face recognition algorithm development are overwhelmingly composed of lighter skinned faces, causing face recognition algorithms to have noticeable disparities in matching accuracy between darker and lighter skin tones. Buolamwini, working with Deborah Raji, a student in her laboratory, has gone on to demonstrate this same problem in Amazon’s Rekognition face analysis platform. These studies have prompted IBM to announce it would exit the facial recognition and analysis market and Amazon and Microsoft to halt sales of facial recognition products to law enforcement agencies, which use the technology to identify suspects.

The manifestation of bias often begins with the choice of an application, and further crops up in the design and implementation of an algorithm — well before any training data is provided to it. For instance, while it’s not possible to develop an algorithm to predict criminality based on a person’s face, algorithms can seemingly produce accurate results on this task. This is because the datasets they are trained on and evaluated with have obvious biases, such as mugshot photos that contain easily identifiable technical artifacts specific to how booking photos are taken. If a development team believes the impossible to be possible, then the damage is already done before any tainted data makes its way into the system.

There are a lot of questions AI developers should consider before launching a project. Gebru and Emily Denton, also a researcher at Google, astutely point out in a recent tutorial they presented at the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition that interdisciplinary collaboration is a good path forward for the AI development cycle. This includes engagement with marginalized groups in a manner that fosters social justice, and dialogue with experts in fields outside of computer science. Are there unintended consequences of the work being undertaken? Will the proposed application cause harm? Does a non-data driven perspective reveal that what is being attempted is impossible? These are some of the questions that need to be asked by development teams working on AI technologies.

So, was it a good thing for the research team responsible for Tiny Images to issue a complete retraction of the dataset? This is a difficult question. At the time of this writing, the published paper on the dataset has collected over 1,700 citations according to Google Scholar and the dataset it describes is likely still being used by those who have it within their possession. The retraction of Tiny Images creates a secondary problem for researchers still working with algorithms developed with Tiny Images, many of which are not flawed and worthy of further study. The ability of researchers to replicate a study is a scientific necessity, and by removing the necessary data, this becomes impossible. And an outright ban on technologies like face recognition may not be a good idea. While AI algorithms can be anti-social in contexts like predictive policing, they can be socially acceptable in others, such as human-computer interaction.

Perhaps one way to address this is to issue a revision of a dataset that removes any problematic information, notes what has been removed from the previous version, and provides an explanation for why the revision was necessary. This may not completely remove the bias within a dataset, especially if it is very large, but it is a way to address specific instances of bias as they are discovered.

Bias in AI is not an easy problem to eliminate, and developers need to react to it in a constructive way. With effective mitigation strategies, there is certainly still a role for datasets in this type of work.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: AI, IBM, Microsoft, bias, face recognition. Amazon, image recognition

Topics: Artificial Intelligence