Keeping humans in the loop is not enough to make AI safe for nuclear weapons

By Peter Rautenbach | February 16, 2023

The Aegis Combat System’s powerful computers are capable of tracking enemy targets and autonomously deciding whether to destroy them. In this photo, an Aegis-class destroyer launches one of its own missiles to intercept another missile during a 2009 exercise. US Navy photo

The Aegis Combat System’s powerful computers are capable of tracking enemy targets and autonomously deciding whether to destroy them. In this photo, an Aegis-class destroyer launches one of its own missiles to intercept another missile during a 2009 exercise. US Navy photo

Artificial Intelligence (AI) systems suffer from a myriad of unique technical problems that could directly raise the risk of inadvertent nuclear weapons use. To control these issues, the United States and the United Kingdom have committed to keeping humans in the decision-making loop. However, the greatest danger may not lie in the technology itself, but rather in its impact on the humans interacting with it.

Keeping humans in the loop is not enough to make AI systems safe. In fact, relying on this safeguard could result in a hidden increase of risk.

Deep learning. Automation grants authority to machine systems to act alongside humans or in their place. To varying degrees, automation has played a role in deterrence and command-and-control systems since the dawn of nuclear weapons, but advanced AI would take automation to the next level.

AI systems are able to solve problems or perform functions that traditionally required human levels of cognition. Machine learning, a subset of AI, is the overarching driver behind the current surge in intelligent machines. Deep learning, an advanced machine-learning process, uses layers of artificial neural networks to recognize patterns and to assess and manage large sets of unstructured or unlabeled data.

This ability has made it possible to create driverless cars, virtual assistants, and fraud-detection services.

While no state is openly attempting to fully automate its nuclear weapons systems, integrating AI with command systems seems promising and even unavoidable. AI could augment early-warning systems, provide strategic analytical support and assessment, strengthen communication systems, and provide a form of forecasting similar to predictive policing. But AI integration comes with potential risks as well as benefits. Here are the good, bad, and ugly sides of this rapidly evolving technology:

The good. Integrating AI with command systems offers enticing strategic advantages for states. AI systems can perform their assigned functions at blinding speeds, drastically outpacing human operators without experiencing fatigue or diminishing accuracy. They can comb through enormous amounts of data to find patterns and connections between seemingly unrelated data points.

AI could also make deterrence systems safer. Humans have flaws and make mistakes. Automation could reduce the number of nuclear close calls that are related to human error, cognitive bias, and fatigue. Furthermore, AI can reduce uncertainty and improve decision making by protecting communication and extending the time available to decision makers responding to alleged launch detections.

Public statements by officials including former US Strategic Command General John Hyten and the former director of US Strategic Command’s Nuclear Command, Control, and Communications Enterprise Center Elizabeth Durham-Ruiz corroborate the importance that AI could play in nuclear command systems in the near future. This support for AI integration with nuclear command is happening at the same time as a massive modernization of the US nuclear command apparatus. In the near future, experts foresee AI integration being used to improve the capabilities of early-warning and surveillance systems, comb through large data sets, make predictions about enemy behavior, enhance protection against cyberattacks, and improve communications infrastructure throughout nuclear command systems.

The bad. For all its advantages, AI is not inherently superior to humans. It has significant and unique technical flaws and risks. Any integration with nuclear command systems would have to grapple with this reality and, if done improperly, could increase the chance of inadvertent nuclear use.

Complexity breeds accidents. The amount of code required in AI systems used for command and control would be immense. Errors and technical challenges would be inevitable.

It is especially difficult to address the technical challenges of AI because of the “black box” problem. AI systems are somewhat unknowable, because they effectively program themselves in ways that no human can fully understand. This innate opacity is particularly problematic in safety-critical circumstances with short time frames, which are characteristic of a nuclear crisis.

One technical challenge is that AI is “brittle”; even the most powerful and intelligent systems can break when taken into unfamiliar territory and forced to confront information they were not trained on. For example, graduate students at the University of California, Berkeley, found that the AI system they had trained to consistently beat Atari video games fell apart when they added just one or two random pixels to the screen. Given the sheer complexity of the international realm in which nuclear command systems operate, vulnerability to brittleness could easily lead to dangerous situations in which AI systems fail spectacularly—or, more dangerously, provide wrong information while appearing to work correctly.

A second and somewhat counterintuitive technical challenge is that AI systems are built with human-esque biases. The algorithms and code that form the foundation of allegedly “objective” autonomous systems are trained using data provided by humans. However well-meaning human programmers are, it is inevitable that they will introduce human bias into the training process. For example, recruiting algorithms that have been trained on the resumes of former successful applicants may perpetuate the bias of human hiring committees that previously underestimated female applicants.

This isn’t to say that AI shouldn’t be deployed or integrated into security systems. Every technology has flaws, and the humans supported or replaced by AI are not paragons of perfection, either.

Nonetheless, these technical issues need to be anticipated and accounted for. Measures to increase the safety of AI systems can, and must, be taken to mitigate or prevent these technical issues. Commitments to keep humans in the decision-making loop will play a role in increasing safety, because human critical thinking is an important tool in controlling incredibly powerful and smart—but ultimately still limited—machine intelligences.

The ugly. Unfortunately, integrating AI with nuclear weapon command systems affects more than just the machine aspect of nuclear decision making. Alongside these technical problems come effects on the humans involved, which makes keeping them in the loop less effective than needed to ensure safety.

One key issue is the development of automation bias, by which humans become overly reliant on AI and unconsciously assume that the system is correct. This bias has been found in multiple AI applications including medical decision-support systems, flight simulators, air traffic control, and even in simulations designed to help shooters determine the most dangerous enemy targets to engage and fire on with artillery.

Not only does this increase the likelihood that technical flaws will go unnoticed, but it also represents a subconscious pre-delegation of authority to these machine intelligences.

The dangers of automation bias and pre-delegating authority were evident during the early stages of the 2003 Iraq invasion. Two out of 11 successful interceptions involving automated US Patriot missile systems were fratricides (friendly-fire incidents). Because Patriot systems were designed to shoot down tactical ballistic missiles, there was very little time between detection and decision to fire on the incoming threat. This meant that the process was largely automated, with little opportunity for a human operator to influence the targeting. The system would do most of the work, and the operator would make the final call to fire.

In the fratricides, the Patriots and their operators mistakenly identified friendly targets as enemy targets and promptly fired on them, causing the deaths of allied pilots. In addition to the technical problems with the system, and issues surrounding short decision-making time frames, the task force that reviewed the fratricide incidents found significant evidence of automation bias: The Patriot’s operators not only trusted their automated system—they were trained to trust it.

At what point do human operators become nothing more than biological extensions of an autonomous, effectively uncontrolled AI system? While it is uncertain whether a difference in training or attitude would have ultimately changed the outcome of the Patriot fratricides, an inherent trust in machines makes it much harder to prevent technical errors in AI systems. Automation bias impairs the judgment of humans in the loop.

These problems can be somewhat mitigated by training humans to recognize bias. While not a perfect solution, such training can reduce undue confidence in the abilities of AI systems.

A need for speed? Despite the dangers of bias and pre-delegation, there are good reasons to believe that states might give military AI the authority to act without real human oversight. While providing a tempting edge, this risks bringing war to ‘’machine speed”—when war is fought at the pace of machine thinking. In what is dubbed “hyperwar,” or “battlefield singularity” in China, military reactions and decisions could take place in nanoseconds.

This is reminiscent of American nuclear-strategy expert Thomas Schelling’s concerns about the premium on haste being the greatest danger to peace. In an effort to maintain battlefield advantage, states may race to the bottom in a mad dash that effectively pre-delegates authority to AI systems.

Many influential military figures are not opposed to pushing the human element further out of the decision-making loop. During his time as commander of NORAD, Gen. Terrence J. O’Shaughnessy stated in 2020 that “what we have to get away from is … ‘human in the loop,’ or sometimes ‘the human is the loop.’”

Giving over control to intelligent machines in extreme situations is not science fiction. The Aegis ballistic missile defense system, used onboard military vessels, is already able to function autonomously without any humans in the loop. However, that operating mode is currently reserved for military engagements in which humans on board the vessel could be overwhelmed—or what one ship’s captain described as a kill-or-be-killed, World War 3-like scenario.

As American political scientist Richard K. Betts warned in 2015, states often “stumble into [war] out of misperception, miscalculation and fear of losing if they fail to strike first.” Military AI designed to rapidly act on advantages could miss de-escalatory opportunities or function too fast for human decision makers to intervene and signal their de-escalatory intent.

Completely removing humans from the decision-making process is extreme. No state is currently calling for fully automated nuclear weapon systems. Nonetheless, key aspects of the decision-making process, or its supporting elements, could be increasingly automated. To fully take advantage of machine speed, states could purposefully remove humans from the loop at key junctions.

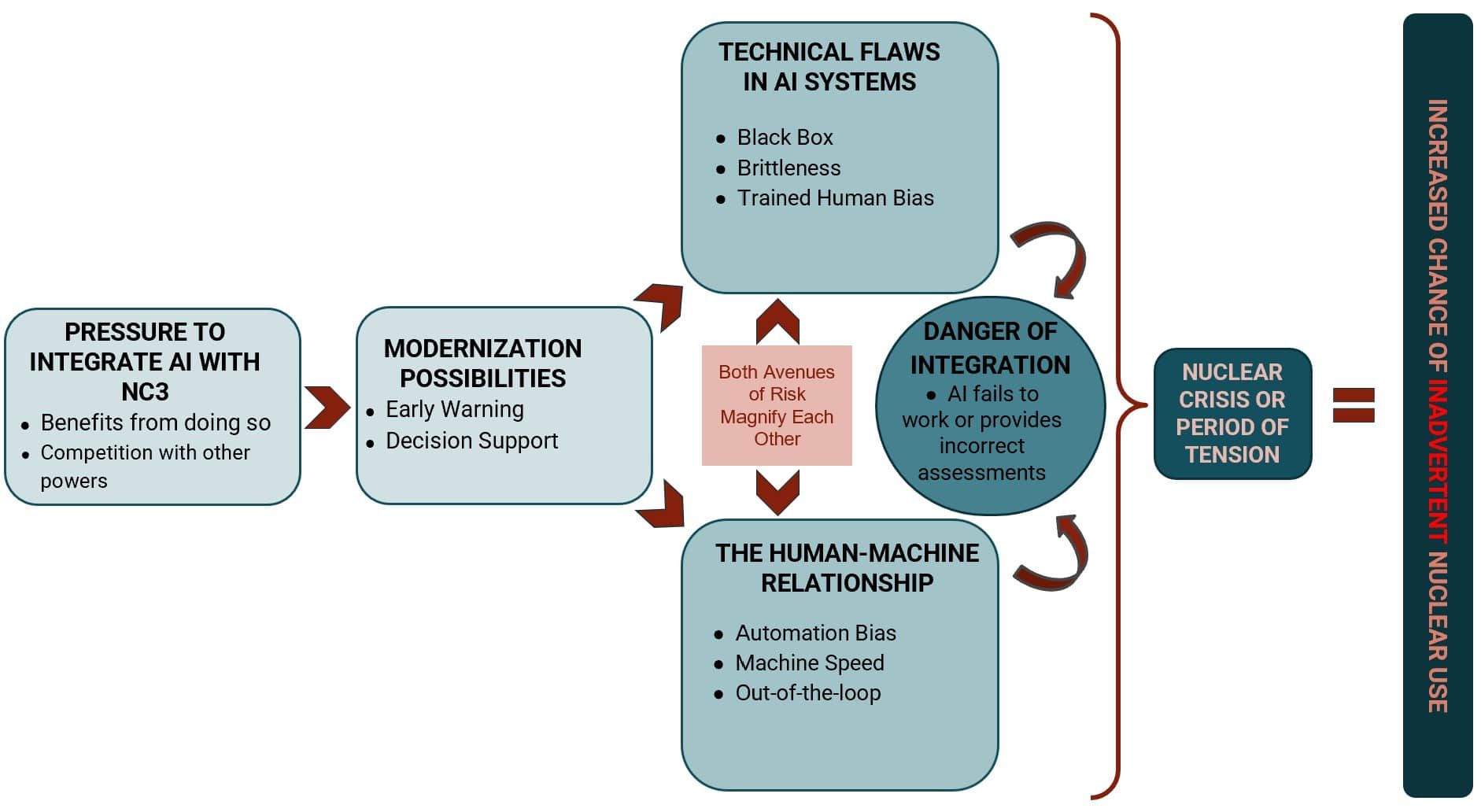

While humans are far from perfect, the unknowability of AI systems coupled with the implicit assumption of their superiority creates a dangerous environment. As shown in the chart below, the integration of AI with nuclear command, control, and communication (NC3) systems could increase the chances of inadvertent nuclear weapons use.

To mitigate these risks, states such as the United States and United Kingdom—from their positions of strength—may find it easy to decry fully automated nuclear systems. However, the same cannot necessarily be said for other nuclear powers. A fledgling nuclear weapon state surrounded by immensely powerful enemies could view fully automated systems as its only opportunity for an effective deterrent against the prospect of a lightning-fast first strike.

Even for currently secure states, emerging delivery systems like fractional orbital bombardment systems could bypass early warning altogether and severely shrink the already critically short decision-making time frame. As the actual and perceived speed of war increases, the question, as James Johnson, the author of Artificial Intelligence and the Future of Warfare, recently put it, is “perhaps less whether nuclear-armed states will adopt AI technology into the nuclear enterprise, but rather by whom, when, and to what degree.”

Credible commitments to keep humans in the loop are important signaling tools and provide one way to navigate the technical problems of AI systems. Nonetheless, in the face of changing human-machine relations and AI impacts on human decision making, these commitments are not enough. Changes to nuclear doctrine, policy, and training—alongside workable technical solutions and heavy vetting—will be required to prevent the inadvertent use of nuclear weapons in the age of intelligent machines.

Editor’s note: This article is an adaption of a paper presented at the International Student/Young Pugwash (ISYP) Third Nuclear Age conference in November 2022. Selected participants had the opportunity to submit their work for publication by the Bulletin, which was one of ISYP’s partners for the conference.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: automation bias, humans in the loop, nuclear command

Topics: Artificial Intelligence, Nuclear Weapons, Opinion, Voices of Tomorrow