Formal risk assessments and nuclear arms control: exploring the value of modern methodologies

A model hypersonic craft undergoing tests. (NASA / DOD)

A model hypersonic craft undergoing tests. (NASA / DOD)

Numerous technological, geostrategic, economic, and demographic changes challenge the stability of the international-security architecture and its arms-control structures. Factors leading to a reexamination of arms-control strategies include the post-post-Cold War environment, an increase in the number and diversity of international actors that have (or could have) nuclear capabilities, and new weapons capabilities. Information warfare, hybrid warfare, cyber operations, dependence on fragile space-based capabilities, hypersonic and stealth delivery systems, speed-of-light technologies — and even the deliberate violation of previously agreed rules as a means of signaling and intimidation — are all accelerating the erosion of international norms related to international security. Hypersonic boost-glide vehicles (HBGVs) constitute one of many such challenges. They make an interesting case study because they can carry either nuclear or non-nuclear payloads and combine the high speed of ballistic missiles with the maneuverability of cruise missiles. Their hybrid performance and multi-mission role complicate missile defense and further illustrate the complexity of the overall security situation.

Comparative risk is always a major consideration in arms control, but formal risk assessments are generally not performed, despite decades of advancement in risk-analysis methods that have been applied successfully in other fields (e.g., nuclear reactors). Most assessments of risk in nuclear arms control rely on intuitive and experiential methods, which can produce highly variable results, especially given that arms-control initiatives are intentionally competitive or even confrontational (and not purely cooperative), and different actors may have widely different perceptions of risk. Here we explore the application of formal risk tools to arms-control issues, looking at how arms-control risk assessments related to HBGVs or other advanced weapons could have a positive impact on future negotiations and treaties, reducing the likelihood and consequences of war, especially nuclear war.

Analyzing risk

Risk is generally understood to encompass both likelihood and harm. An outcome that is guaranteed to occur may not be considered risky even if it is undesirable, while a prospect with uncertain outcomes may not be viewed as risky if all outcomes are favorable (e.g., a lottery in which the only uncertainty is the size of a prize). More specifically, “risk” is often defined (National Research Council, 2010) as a function of threat (potentially adverse event), vulnerability (the chance that the event results in harm), and consequence (the extent or nature of the harm).

Formal risk assessments are widely used and have advanced significantly over the last several decades. Industries vulnerable to major hazards, such as nuclear power, frequently rely on quantitative risk assessments. Many risk-assessment methodologies exist, each with their own advantages and disadvantages. Modern risk-assessment methods, typically applied quantitatively to engineered systems (e.g., nuclear reactors or aerospace applications), might also be used to support attempts to achieve useful nuclear arms-control agreements.

There are large differences between engineered and non-engineered systems, especially in their levels of complexity and uncertainty. Non-engineered systems involving complex human behaviors often include intangible interactions and feedback mechanisms that may not lend themselves to probabilistic analysis. So, risk assessments of non-engineered systems typically rely heavily on intuition. However, this may not completely negate the utility of a structured, formal approach to risk assessment in the arms control arena. Like the intuitive approaches currently used in nuclear arms control, formal methods can also reflect objective conditions, support declared goals, and provide agility in adapting to new information or changed circumstances. Both approaches can in principle address the core issue in all risk-management challenges (namely, to support good decision making in the face of uncertainties), and both can be shaped by the norms and values (and biases) of their users. The strengths and weaknesses of each approach must therefore be considered.

While some believe that quantitative risk assessment is both feasible and useful for nuclear arms control even in its simplest form, others believe that such applications are either impossible or wrong (Hellman and Cerf, 2021). Internet pioneer and Turing Award winner Vinton Cerf argues (along with Hellman) that quantification is not necessary to understand that the consequences of nuclear war are unacceptable, and that urgent actions to reduce the likelihood should therefore be taken without waiting for the results of quantitative analysis. Advocates for intuitive risk assessments point out that living beings evolved their instincts to survive risks, and that this should be recognized and respected.

Critics of such intuitive approaches, however, note that intuition can also lead people astray. Numerous studies in psychology and behavioral economics have demonstrated the effects of phenomena such as recency bias and even how risks are presented (e.g., verbally or graphically) on intuitions (Gonzalez and Wallsten, 1992; Solomon, 2022). Likewise, intuitive evaluations of risk tend to overestimate the probability of extremely rare events (prospect theory; Kahneman and Tversky, 1979), while the “boiling frog” analogy suggests that the opposite can also occur—namely, that slow increases in risk may not create the level of alarm needed to inspire protective action. Finally, in the context of nuclear arms control, if adversaries have differing or even incommensurate intuitive narratives about risk, this can result in protracted or even unresolvable conflicts or stalemates in negotiations.

These challenges have inspired the authors of this paper to explore the possible benefits of probabilistic risk assessment (Bier, 1997; Cox and Bier, 2017) in arms control, given that the use of quantitative methods has helped to identify the most serious causes of risk and the most effective risk-reduction actions in a wide variety of fields. Risk assessments of engineered systems typically involve a set of possible “scenarios,” whose consequences and likelihood can each be analyzed. The value of such methods lies not only in the quantitative or numerical results, but also in the ability of these methods to identify the range of possible scenarios, structure or group those scenarios in a tractable manner, facilitate an understanding of newly identified risks or new technologies, and ensure a reasonable degree of completeness. This systematic approach may therefore also be useful in non-engineered, policy-oriented risk assessment, as in arms-control or other negotiations.

Application of abstract concepts to complex human-related matters, however, doesn’t always prove practical. While early applications of game theory to nuclear deterrence in the late 1960s fed expectations that it could be applied to arms control, Schelling (1966) and Harsanyi (1967) and others achieved only limited success in applying game theory, due to human diversity and the complexity of arms-control issues. Risk-analysis results can also be difficult for decision-makers to interpret. Therefore, persuading potential decision makers or arms-control negotiators of the utility of using formal risk-assessment methods would benefit from a compelling “existence proof,” which does not appear to exist currently. We speculate that HBGVs (which are rapidly growing in importance as an issue in nuclear arms control) might provide a useful case study to analyze using formal risk-assessment methods as the basis for such a proof.

HBGVs as an example

Currently, hypersonic boost-glide vehicles are primarily used in regional weapons systems rather than global strategic systems, but some intercontinental systems have already been deployed. Moreover, even regional dynamics can affect global strategy, especially in escalation scenarios (where an initially regional conflict becomes broader). Furthermore, the need to defend against regional HBGVs could result in missile-defense advances that threaten traditional strategic systems. Some HBGV developments could also have qualitative capabilities (e.g., against early warning, defense, and/or communications systems) that could be either advantageous or destabilizing, both regionally and globally.

Perhaps the key feature of HBGVs is that they are more maneuverable than traditional intercontinental ballistic missiles. Russia, China, North Korea, the United States, and other nations have different priorities for HBGVs, but together they encompass nuclear, conventional, and unarmed payloads on intercontinental, intermediate, and short-range delivery systems. As of early 2024, the only nuclear arms-control treaty that would limit any of these systems is New START, but that applies only if a nuclear warhead is placed on the systems that are already considered accountable under the treaty, or that would be accountable if treated as treaty-defined ballistic or cruise missiles. Some intercontinental delivery systems would not be considered treaty-accountable (for example, if they did not follow a ballistic trajectory over at least half their range, or were not self-propelled and aerodynamic over at least half their range). Views differ on how these hypersonic systems might impact nuclear stability and whether they need to be addressed in arms control. In the short term, intercontinental hypersonic weapons would be limited in number, but intermediate and shorter-range conventional (non-nuclear) systems may see wider deployment in regional conflicts.

Scenario structuring in risk assessment

Any risk assessment is likely to be scenario-based, so the first step in the HBGV case involves delineating the specific scenarios to be studied. The level of detail of the scenarios can be tailored to support the decisions to be made based on an analysis. Recognizing the need to address both uncertainty and consequences (e.g., in a nuclear exchange or in a negotiation), risk assessment generally focuses on three key questions (Kaplan and Garrick, 1981):

- What can go wrong?

- What is the likelihood of each possible scenario?

- What are the consequences?

Tools are available to help in scenario structuring. These include hazard and operability studies (Kletz, 1999; see International Electrotechnical Commission, 2016); hierarchical holographic modeling (Haimes, 1981); and the theory of scenario structuring (Kaplan et al., 2001). While any risk-assessment methodology has limitations and disadvantages, scenario structuring provides greater clarity than many other assessment methods. For example, even without quantifying the probabilities of the events in a fault tree or event tree, the effort of constructing the tree can highlight events that could otherwise be overlooked or underestimated. The thought process can help identify relationships among events (e.g., how the occurrence of one event or condition can influence the probabilities of subsequent events).

Hypothetical risk assessment of nuclear-armed hypersonic weapons

There is at least one significant advantage of quantitative methods such as those described above: They make explicit the scenarios that can lead to favorable or unfavorable outcomes. This approach is complementary to traditional approaches to arms-control analysis (which tend to be more narrative-based). In other words, the use of quantitative risk assessment is not intended to be a principal basis for decisions, or to replace the knowledge and understanding of key decision makers, negotiators, etc. But traditional approaches may provide little basis for resolution of differences if two groups have different intuitive perceptions of risk (e.g., about the likelihood that nuclear weapons will be used in a given conflict). By contrast, if two scenario-based risk assessments come to different conclusions about risk, that paves the way for a discussion about the reasons. Do they make different assumptions, or rely on different data? Does one omit some scenarios that play a role in the other?

HBGVs are not the only problem that would merit analysis using quantitative scenario-based methods, or the most important such problem, or even uniquely suited to the application of these methods. What we present here merely illustrates how the methods could be applied to a significant problem.

Fault tree for nuclear-armed hypersonic weapons

Some methods of risk assessment are particularly appropriate for analyzing the likelihood of rare events (Bier et al., 1999) for which little, if any, empirical data are available, since the undesired event for which data are sparse or unavailable (e.g., nuclear war) can be decomposed into constituent events whose likelihoods may be easier to estimate. For example, fault trees and event trees are frequently used to model the risks of nuclear-power plants, using inductive or “top-down” logic (beginning with a hypothesized failure or undesired event, and working backward to identify which combinations of events might give rise to that event), and deductive or “bottom-up” logic (beginning by hypothesizing an initiating event, and working forward in time to identify all possible combinations of subsequent events that could lead to an undesirable outcome). A decision tree can be thought of as a variant of an event tree that includes decision options, each of which can lead to one or more possible outcomes, often quantified by probabilities.

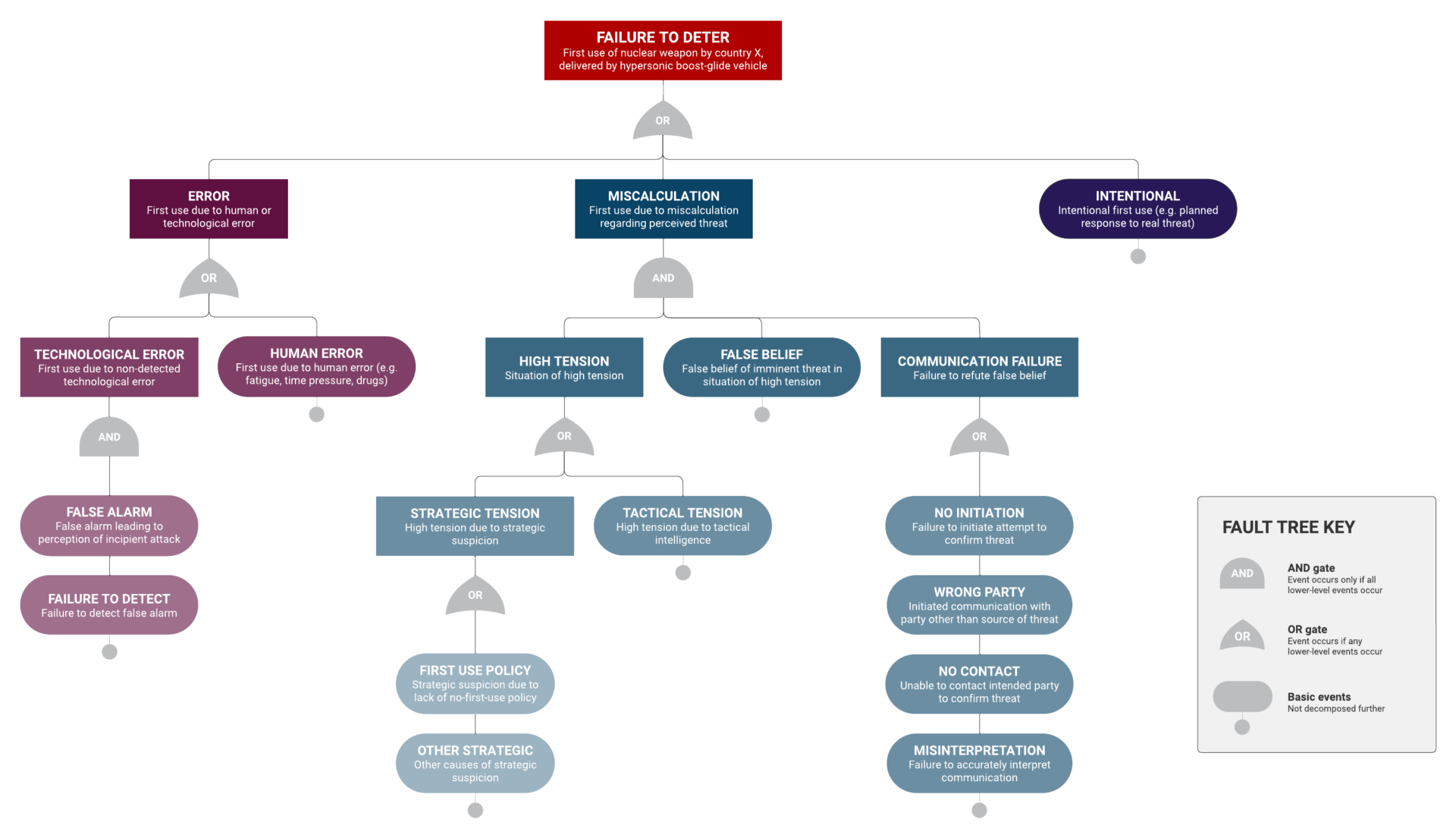

A simple hypothetical fault tree is shown in Figure 1 for use of nuclear-armed hypersonic weapons in a situation prior to a nuclear war.

Authors

This fault tree is incomplete (a realistic fault tree for this problem would have many more branches), and we do not claim that it is definitive or accurate. (Another team might draw a significantly different tree with other event descriptions.) However, a concrete example makes the advantages and disadvantages of using risk assessment methods clearer and easier to understand.

To illustrate the interpretation of this tree, the top level of the tree (an OR gate) postulates three ways by which use of nuclear-armed HBGVs could arise: human or technological error; or deliberate use based on a miscalculation of the perceived threat; or intentional use in response to an actual threat. Here, the difference between error and miscalculation is essentially whether the nature of the event would be evident to an informed observer almost immediately (error), versus only in retrospect, with additional background or context (miscalculation). Each of these paths is then further decomposed into its contributing factors. For example, “error” could be either human error, or technological error. Technological errors in turn (as modeled using an AND gate) are shown as requiring both a false alarm and failure to detect that the alarm is false. Human error could, if desired, be further subdivided into various possible causes (fatigue, time pressure, drug or alcohol use, mental illness, etc.). However, that level of detail is suppressed here. Similarly, “miscalculation” is here modeled as requiring a situation of high tension (which could arise due to either strategic or tactical considerations), and a false belief that there was an imminent threat, and failure to refute that false belief (e.g., due to lack of communication). Finally, intentional use of nuclear-armed HBGVs could in turn be subdivided into various possible reasons for such use—for example, because of pressure to act (“use-it-or-lose-it”) or intentional escalation of a conventional (non-nuclear) conflict.

Note that the methods used for assigning numerical values to the various events in the fault tree (and the uncertainties about those values) may differ. For example, some could perhaps be estimated based on historical data (such as failure of detection), while others could be estimated only judgmentally (e.g., the likelihood that foreign governments have a false belief). However, using the subjectivist theory of probability, both could be estimated quantitatively (at least in principle).

If the probabilities of some events in the fault tree seem too difficult to estimate accurately, some could perhaps be ranked on a purely ordinal basis—that is to say, rating some as more likely than others. Even this process can make critical assumptions explicit and facilitate discussion and debate if differences of opinion emerge. For example, some analysts might argue that the availability of nuclear-armed hypersonic weapons would improve survivability of a country’s nuclear capability, thereby reducing the probability of preemptive attack in situations of high tension. Conversely, others might argue that the availability of nuclear-armed hypersonic weapons would make nuclear use more likely—for example, because of the greater maneuverability of such weapons (compared to intercontinental ballistic missiles), or if the use of non-nuclear hypersonic weapons on the battlefield reduces the psychological barriers to use of nuclear hypersonic weapons. Although use of risk-analysis models might not provide immediate answers to key questions, even this simple hypothetical fault tree shows that it can highlight those questions, help to differentiate among similar scenarios, encourage analysts to make their assumptions explicit, and help to identify areas of disagreement or need for further information.

Risk assessment: advantages and disadvantages

One advantage of risk assessment is obvious: The mere act of scenario-structuring can provide clarity. For example, even without quantifying the probabilities of the events in a fault tree or an event tree, the effort of constructing the tree can highlight events that had been previously overlooked or underestimated. Likewise, the thought process can help to identify relationships among events (for example, the key role of situations of high tension in miscalculations, in the hypothetical fault tree above). And for some applications, a full and complete risk assessment may not be needed; rather, the use of risk assessment “tools” to perform a partial analysis might be sufficient.

In a wide variety of fields, risk assessment is used as a basis for improved risk-reduction decisions. Even without well-defined robability estimates, scenarios afford the opportunity to rank-order risks. After the most important risks have been identified (based on likelihood, severity, or some combination of both), the risk assessment also supports identifying which aspects or elements of the scenario(s) are most important. After these dominant contributors have been identified, one can then review the various details involved in those scenarios to identify opportunities for risk reduction (for example, methods to improve communication between potential arms-control adversaries, or more reliable ways to detect threats).

In cases where risk reductions cannot be achieved unilaterally, risk assessment information would also allow negotiators to focus on mitigating or managing the highest-order risks, while possibly paying less attention to scenarios that (while theoretically possible) may not be important contributors to the overall level of risk. These types of insights, although relying on quantitative analyses, can often be summarized qualitatively, making the results more broadly accessible. Consequently, risk assessments provide a relatively reproducible method that can help to define leadership decision pathways, giving negotiators a range of options and illustrating ways to assess their outcomes. In addition to informing arms-control negotiators and their staff about the most important risks, risk assessments can also provide a communication platform for negotiators. For example, risk assessments could help both arms-control negotiators and their staffs to understand how best to represent the threats they perceive, or how particular measures might increase or decrease the likelihood of particular events in the risk assessment.

Alternatively, risk assessments could be used as input to further analysis. For example, risk assessment could estimate the likelihood of particular outcomes or actions in a game-theoretic analysis of competition or cooperation between nations or could help prioritize the most important uncertainties for future research or intelligence-gathering. To achieve these goals, it is important to keep risk assessments up to date as circumstances change, as so-called “living risk assessments” (Goble and Bier, 2013), “running estimates” in the military context (US Army, 2019), or simply ongoing situation assessment. Risk assessments may need to be updated for reasons ranging from the advent of novel technologies, to a change in leadership in an adversary nation, to transitory changes in circumstances (such as local skirmishes). The importance of maintaining a living risk assessment may be even greater in the context of arms-control negotiations than in other domains, since different parties may have changing understandings of the risks over time, and a once-agreed-to risk assessment may come to be viewed as obsolete over time.

There are, of course, also potential disadvantages of risk assessment. First, the logic and assumptions behind an engineering-style analysis may not always be straightforward and easily understood, especially for subject-matter experts who may be knowledgeable about the problem at hand but not familiar with risk methods. For example, in the hypothetical fault tree, assumptions about adversary actions appear in multiple parts of the fault tree (under both intentional use and miscalculation). It is arguable that greater understanding might result from putting actions and behavior by a particular group of nations into a single framework rather than having them scattered throughout the tree. In principle, however, these different types of analysis (e.g., engineering-style risk methods along with more traditional narrative methods) could perhaps be used in tandem, as in so-called “plural analysis” (Brown and Lindley, 1986).

Risk assessment can also lead to mistaken interpretations in the face of well-known decision maker biases. For example, in a phenomenon known as “probability neglect” (Sunstein, 2002), either extremely small probabilities can be overweighted (Kahneman and Tversky, 1979), or such low-probability events can be neglected as essentially impossible. People are also often insensitive to the magnitude of a bad outcome—for example, 1,000 deaths may be viewed as almost as bad as 100,000 deaths (Slovic et al., 2013, on “psychic numbing”). Moreover, risk assessments themselves can have biases. This problem is perhaps more extreme for open systems such as arms control, where most actions involve highly uncertain human behavior, and probabilities cannot be easily estimated based on past failure data or component testing.

In addition, especially on arms-control issues, the scenario approach to risk assessment can generate enormous numbers of possible pathways. Overly numerous or detailed scenarios can generate detailed insights, but could also distract from recognizing important overarching qualitative aspects of the problem. Such elaborate analyses can also be difficult to use as a basis for decisions in real time (as in a crisis situation, or while actively engaged in arms-control negotiations), suggesting that such analyses are perhaps better done offline, far in advance of when the resulting insights or recommendations are likely to be needed. They can then be boiled down to higher-level and less-complex risk assessments that are easier for decision makers to digest and use.

Another concern is that a decision maker might take the results or insights from a risk assessment more seriously than is merited (for example, if other sources of information and expertise, such as the insights from area studies, are not adequately considered in the risk assessment). It is well-known that risk assessments can cause people to fixate on the parts of the analysis that can be easily quantified (e.g., the probability of false alarms) rather than parts that are difficult to quantify (Brown, 2002), and to focus on the scenarios that are shown in the analysis rather than on those that were inadvertently omitted (Fischhoff et al., 1978). This is problematic in analyzing systems that are highly complex or open-ended, as with “non-engineered” systems where human actions play a key role, in which even a reasonably complete enumeration of important scenarios may be impractical or difficult to achieve (Perrow, 1984). For this reason, in situations of great complexity, Leveson (2012) argues against attempting to apply probabilistic risk assessment, and instead recommends both eliminating risks where possible and reducing complexity (making risks clearer and easier to understand and mitigate).

Care must also be taken if the risk assessment (e.g., fault tree) is shared with the adversary in arms-control negotiations, to ensure that important information or assumptions are not inadvertently revealed. In addition, the use of risk assessment could paradoxically serve to derail negotiation instead of supporting it. For example, disagreements about minor aspects of the risk assessment could distract attention from overarching goals, or could be deliberately exploited to delay negotiations.

Despite these potential shortcomings, including possible oversimplification or even misuse of results, the potential benefits of risk assessment justify attempting to develop and apply it to support the broader objective of advancing international security and, where useful, achieving arms-control agreements. It is also important to recognize that methods exist for mitigating some of the pitfalls just mentioned. For example, formal methods of scenario identification and structuring (e.g., Kaplan and Garrick, 1981; Kaplan et al., 2001) can reduce problems of incompleteness; plural analysis (Brown and Lindley, 1986) can ensure sufficient attention to scenarios that are difficult to quantify; formal methods of expert elicitation can improve the calibration of probability estimates, and reduce the pitfalls of groupthink (Janis, 1982); and precursor analysis can use data on “near misses” or other undesired events to help inform risk assessments. There is literature on how best to communicate risk results (including uncertainties) to decision-makers, potentially mitigating difficulties such as probability neglect (Bier, 2001; Solomon, 2022).

A path forward could include a risk assessment test case for nuclear arms control

There may be considerable value in undertaking detailed risk assessment of the issues related to nuclear arms control for two key reasons. First, the structural formality of these methods provides a way to make explicit the often-implicit assumptions of interlocutors. Second, risk methods provide a pre-analyzed framework into which new information can be integrated as it becomes available, and that framework can be useful in getting multiple team members (or even adversaries) thinking along consistent lines. Without arguing for replacing the more qualitative types of analyses that have been done or for basing decisions primarily on risk assessment, we do believe that structured, engineering-style risk assessments would complement the qualitative types of analyses that have been done in the past, yielding ways of viewing the problem that can supplement and inform the more intuitive understanding of decision makers. Under some circumstances, risk assessment could even be used in near real time (for example, to support arms-control negotiators), despite the complexities of a scenario-based approach. Once a detailed analysis has been completed, the results could be simplified or condensed in a manner that would make them useful and usable in such situations.

The hypothetical fault-tree analysis of HBGVs presented here is intended only to illustrate these potential benefits, falling short of the type of compelling “existence proof” that might persuade potential decision-makers or arms-control negotiators of the utility of a formal risk-assessment process. In addition to fault trees and event trees, numerous additional methods of risk assessment, both qualitative and quantitative, could be explored (and some have already been applied to problems of nuclear-arms control). Examples are pathway analysis, war gaming, red teaming, game theory, agent-based modeling, exchange analysis, stability analysis, and network analysis. The strengths and weaknesses of many of these are discussed in Risk Analysis Methods for Nuclear War and Nuclear Terrorism (National Academies of Sciences, Engineering, and Medicine 2023, ch. 6). All are ways of modeling or understanding the relationships between two or more nations or organizations. Causal methods recently applied in the fields of statistics and economics could also be beneficial in arms control—for example, by providing automated methods of compliance verification. Chaos theory and other methods of understanding complex systems could help to understand whether a given security or arms-control regime contributes to stability in arms-race dynamics.

A desirable step forward would be for one or more organizations (either governmental or non-governmental) to select a specific issue or problem (such as the impact of HBGVs on arms control) and sponsor a project to explore the applicability of risk-assessment methods and develop the sort of risk assessment suggested here. Such an analysis would be resource-intensive and take considerable time to develop and would perhaps best be done by a dedicated group of analysts, working closely with (or at least informed by) decision makers or past arms-control negotiators. Given recent and ongoing geopolitical changes at a level not seen in many decades, it may be time to apply modern techniques of risk assessment to nuclear arms control to identify new ideas, improve understanding, and provide useful insights–increasing the security of all parties to negotiations. (The advance of “big data” and artificial intelligence/machine learning may also help to support the types of analyses suggested here.) Such a study could serve not only as an “existence proof” of the efficacy of the approach (if successfully demonstrated), but also as a way to ensure that end-users (for example, arms-control policymakers and negotiators) are aware of the types of insights that could be provided by this type of analysis.

The authors would like to gratefully acknowledge Siegfried Hecker, who provided valuable insights into benefits and communication of risk analyses, and to Gorgiana Alonzo for her editorial intelligence, which improved thought communication. In addition, William Barletta provided numerous suggestions on professionals to interact with in this field.

Authors

References

- Bier, V.M. (1997) “An overview of probabilistic risk analysis for complex engineered systems,” Fundamentals of Risk Analysis and Risk Management (V. Molak, editor), Boca Raton, FL: Lewis Publishers.

- Bier, V. M., Y. Y. Haimes, J. H. Lambert, N. C. Matalas, and R. Zimmerman, (1999) “Assessing and Managing the Risk of Extremes,” Risk Analysis, Vol. 19, pp. 83-94.

- Bier, V. M., (2001) “On the State of the Art: Risk Communication to Decision-Makers,” Reliability Engineering and System Safety, Vol. 71, pp. 151-157.

- Brown, R.V., (2002) “Environmental regulation: Developments in setting requirements and verifying compliance.” Systems Engineering and Management for Sustainable Development (A.P. Sage, editor).

- Brown, R.V., and D.V. Lindley, (1986) “Plural analysis: Multiple approaches to quantitative research,” Theory and Decision Volume 20, pages 133-154.

- Cox, L.A., and V.M. Bier, (2017) “Probabilistic Risk Analysis,” Risk in Extreme Environments: Preparing, Avoiding, Mitigating, and Managing (V. M. Bier, editor), Routledge, pp. 9-32.

- Fischhoff, B., P. Slovic, and S. Lichtenstein, (1978) “Fault Trees: Sensitivity of Estimated Failure Probabilities to Problem Representation,” Journal of Experimental Psychology: Human Perception and Performance Vol. 4, No. 2, 330-344.

- Goble, R., and V.M. Bier, (2013) “Risk Assessment Can Be a Game-Changing Information Technology—But Too Often It Isn’t,” Risk Analysis, Vol. 33, pp. 1942-1951.

- Gonzalez C.C., and T.S. Wallsten, (1992) “The effects of communication mode on preference reversal and decision quality,” Journal of Experimental Psychology: Learning, Memory, and Cognition;18:855±64.

- Haimes, Y.Y., (1981), Hierarchical holographic modeling, IEEE Transactions on Systems, Man, and Cybernetics 11(9): 606-617

- Harsanyi, John, (1967). “A Game-theoretical Analysis of Arms Control and Disarmament Problems.” In Development of Utility Theory for Arms Control and Disarmament: Models of Gradual Reduction of Arms. Report to Arms Control and Disarmament Agency. Contract no. ACDA/ST-116. Princeton: Mathematica.

- Hellman, M.E., and V.G. Cerf, (2021) “An existential discussion: What is the probability of nuclear war?” Bulletin of the Atomic Scientists, March 18. Available at: https://thebulletin.org/2021/03/an-existential-discussion-what-is-the-probabilityof-nuclear-war/.

- International Electrotechnical Commission (IEC), (2016) Hazard and operability studies (HAZOP studies) - Application guide, IEC 61882.

- Janis, Irving L., (1982) Groupthink: Psychological Studies of Policy Decisions and Fiascoes, Cengage Learning.

- Kahneman, D., and A. Tversky, (1979) “Prospect Theory: An Analysis of Decision under Risk,” Econometrica Vol. 47, No. 2, pp. 263-292.

- Kaplan, S., and B.J. Garrick, (1981) “On the quantitative definition of risk,” Risk Analysis, 1(1): 11-27

- Kaplan, S., Y.Y. Haimes, and B.J. Garrick, (2001) “Fitting hierarchical holographic modeling into the theory of scenario structuring and a resulting refinement of the quantitative definition of risk,” Risk Analysis, 21(5): 807-815.

- Kletz, T. (1999) Hazop and Hazan. Identifying and Assessing Process Industry Hazards (4th ed.). Rugby: IChemE. ISBN 978-0-85295-506-2.

- Leveson, N., (2012) Engineering a Safer World: Applying Systems Thinking to Safety, MIT Press,

- National Academies of Sciences, Engineering, and Medicine. (2023) Risk Analysis Methods for Nuclear War and Nuclear Terrorism. Washington, DC: The National Academies Press.

- National Research Council. (2010) Review of the Department of Homeland Security's Approach to Risk Analysis. Washington, DC: The National Academies Press.

- Perrow, Charles. (1984) Normal Accidents: Living with High-Risk Technologies New York: Basic Books.

- Schelling, Thomas C., (1966) Arms and Influence, Yale University Press.

- Slovic, P., D. Zionts, A.K. Woods, R. Goodman, and D. Jinks, (2013) “Psychic Numbing and Mass Atrocity,” In E. Shafir (Ed.), The behavioral foundations of public policy (pp. 126–142). NJ: Princeton University Press.

- Solomon, JD, (2022) Communicating Reliability, Risk & Resiliency to Decision Makers: How To Get Your Boss's Boss To Understand, , JD Solomon, Inc.

- Sunstein, C.R. (2002) “Probability neglect: Emotions, worst cases, and law,” Yale Law Journal 112(1), 61-107.

- U.S. Army, (2019) Army Doctrine Publication 3-0, Operations, Army Publishing Directorate.