AI-controlled nuclear weapons, smallpox labs, and nuclear disinformation: The best of 2019 in disruptive tech coverage

By Matt Field | December 27, 2019

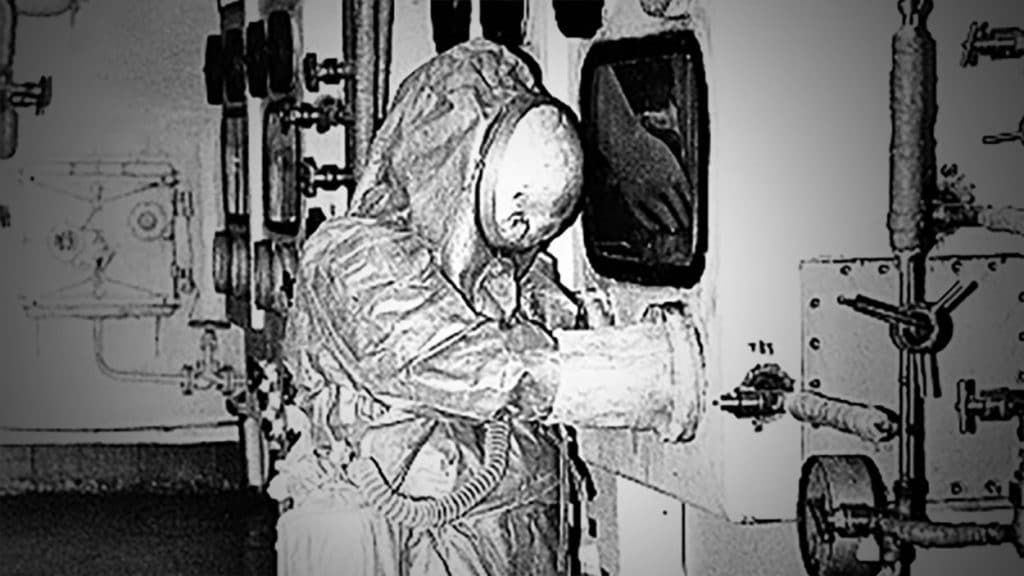

A researcher at a Russian disease research center. Credit: Unknown Soviet photographer. Photo courtesy of Raymond A. Zilinskas.

A researcher at a Russian disease research center. Credit: Unknown Soviet photographer. Photo courtesy of Raymond A. Zilinskas.

Since the Bulletin’s first issue in 1945, the publication started by scientists hoping to focus the world’s attention on the dangers of nuclear weapons has been expanding its scope. These days you’re just as likely to find exclusive climate change coverage as you are to read about new and ill-conceived ventures in the nuclear weapons space. A relatively new focus at the Bulletin involves what we call disruptive technologies. From artificial intelligence (AI) to new gene editing techniques, many technologies under development today promise unquestionably useful applications but also raise thorny ethical and practical questions and the possibility of misuse. Some use cases, in fact, seem only to help spread disinformation, raise the risk of war, or contribute to greater inequality.

There’s been quite a lot to talk about under this umbrella of disruptive technologies, and over the last year, the Bulletin has done just that. From an explosion at a Russian disease research center that houses the smallpox virus to a proposal to allow AI to be used in the command and control system for nuclear weapons, Bulletin authors ranged across the disruptive tech landscape. Here are six stories that are among the best we published in 2019.

What happened after an explosion at a Russian disease research lab called VECTOR?

Biosecurity researcher Filippa Lentzos talked to folks at the World Health Organization and dug deep into archival resources to deliver this story on what happened after an explosion and fire rocked a Russian disease research center that contained a facility housing the smallpox-causing variola virus.

A nuclear detonation in the South China Sea? No, more Twitter conspiracy nonsense

For Hal Turner, a nighttime AM radio host, white supremacist, and federal convict, to push a conspiracy theory that China had detonated a nuclear weapon in the ocean was perhaps not all that surprising. It is noteworthy, however, that a supposed news account on Twitter followed by academics and journalists alike would help amplify Turner’s whopping falsehood and make it go viral. Whether misinformation of this sort on social media could exacerbate nuclear tensions remains, unfortunately, an open question.

Strangelove redux: US experts propose having AI control nuclear weapons

How should the United States respond to potentially destabilizing new weaponry like the hypersonic missile? Two US deterrence experts with experience working in the country’s nuclear weapons enterprise decided the answer to that question is to have … umm … artificial intelligence control the nuclear launch button. Needless to say, the entire Terminator GIF-producing world sent us their favorite animations in response to our tweets about this story.

Hey, let’s fight global pandemics by maybe starting one… Say WHAT?

Bulletin multimedia editor Thomas Gaulkin took a look at the concept of studying potentially pandemic viruses by altering their genetic code so that they could more easily spread among new species, including (gulp) the human species. While at least a pair of elite researchers thought this “gain of function” experimentation sounded like a great idea, the rest of us had only one response: Say WHAT?

The existential threat from cyber-enabled information warfare

One key component of the Bulletin is its Science and Security Board, a group of science and policy experts who each year take a hard look at trends around the world and set the hands of the Doomsday Clock at an appropriate time. They also contribute to the magazine. In this piece, Herb Lin, an expert in cyber policy and security at Stanford University, argues that corruption of the information ecosystem through cyber-enabled information warfare is not just a phenomenon that increases the risk of nuclear war or further hampers efforts to fight climate change. It’s also an existential threat in its own right.

Stories of technological threat—and hope

When it comes time to spitball ideas for our bimonthly magazine, we usually stick to news and analyses of real-world events. With our November issue, however, we decided to try to jolt our readers to attention by having experts craft plausible scenarios from within their research areas about how civilization as we know it might end. While this might seem like more gloom and doom from the organization that brought you the Doomsday Clock, the articles will hopefully spur people to search for a better path. Or, as Bulletin Editor-in-Chief John Mecklin writes, they are not intended to “frighten readers, but, in the tradition of the Clock, to alert them, so they might act in time.”

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: AI, Twitter, VECTOR, bird flu, conspiracy theories, gain of function, smallpox

Topics: Disruptive Technologies