How to protect critical infrastructure from ransomware attacks

By Sejal Jhawer, Gregory Falco | June 25, 2021

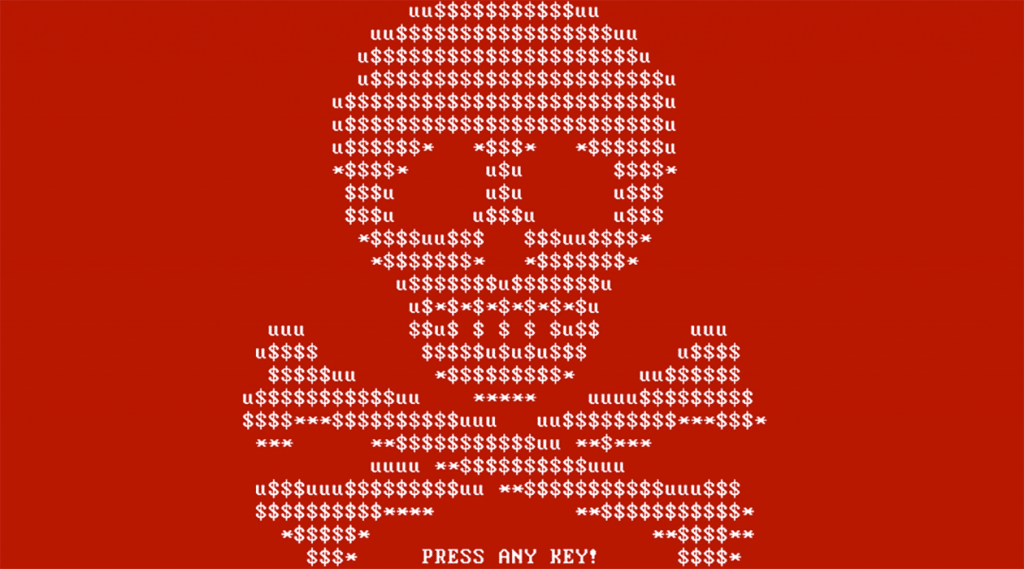

A screenshot of the Petya ransomware that began spreading in 2016. In recent years, ransomware attacks have wrought havoc on important digital infrastructure, like gas pipelines and city governments. Typically, affected users are asked to pay their attackers to regain access to their systems. Image via Wikimedia Commons.

A screenshot of the Petya ransomware that began spreading in 2016. In recent years, ransomware attacks have wrought havoc on important digital infrastructure, like gas pipelines and city governments. Typically, affected users are asked to pay their attackers to regain access to their systems. Image via Wikimedia Commons.

Whether the targets are local governments, hospital systems, or gas pipelines, ransomware attacks in which hackers lock down a computer network and demand money are a growing threat to critical infrastructure. The attack on Colonial Pipeline, a major supplier of fuel on the East Coast of the United States, is just one of the latest examples—there will likely be many more. Yet the federal government has so far failed to protect these organizations from the cyberattacks, and even its actions since May, when Colonial Pipeline was attacked, fall short of what’s necessary.

The pipeline attack crystalized how cyber threats can affect the physical world. Not knowing how deep the ransomware had infiltrated its computer network last month, Colonial Pipeline halted its operational pipelines for five days before paying a Russian group called DarkSide a ransom of $4.4 million. Meanwhile, East Coasters raced to gas stations in panic as fuel hoarders drained the stations of their supplies. The news was full of alarming scenes of long lines at the pump and even of fuel-laden vehicles catching fire.

In the aftermath of Colonial Pipeline, President Joe Biden issued an executive order that requires federal information technology contractors to provide information about breaches, improved standards on federal networks, and more. While the order deals with federal networks, the hope is that new practices affect the private sector, as well. Most important, the order outlines the federal government’s intent to move towards a so-called “zero trust” network architecture—a setup where all users are continuously authenticated and validated before receiving access to any application on the network. But rather than merely recommending zero-trust systems, the government needs to actually require it.

The zero-trust model. A zero-trust network assumes that all data and all users are suspect and could compromise the security of the network—even after they are already inside it. User identities are continuously authenticated (confirmed) and validated. Zero-trust security often employs a number of strategies, most commonly including frequent multi-factor authentication (such as two-factor authentication) and micro-segmentation of the network and data (in which users can only access certain security zones and must authenticate their identity at multiple steps). This represents a departure from the previous model of network security known as “castle-and-moat,” in which users are verified once, but granted unrestricted access to resources once inside the network. Though difficult to initially penetrate, such castle-and-moat paradigms—most traditionally, remote data access through corporate virtual private networks (VPNs)—allow an attacker who has breached the network’s security to wreak full havoc once inside with minimal to no further identity verifications. In this sense, a zero-trust model is a step in the right direction towards defensible security.

However, the federal government says no more than the following on the subject of zero trust: “To facilitate this approach, the migration to cloud technology shall adopt zero-trust architecture, as practicable.” The statement is vague and non-prescriptive. Since zero trust is fundamentally a security principle, it could be developed in a number of ways through a number of different strategies; the phrase “zero trust” does not actually define how such a system is implemented. The federal government must formulate how such networks should be built.

The software-defined network. One method of defining zero-trust implementations is to specify how data should be treated in the network, an approach directly aligned with what’s known as software-defined networks. These networks enable administrators to define policies—or rules—through software that control the network and data flow to enhance security. As opposed to traditional, hardware-based networking paradigms, software-defined networks allow network administrators greater control and visibility into the network. Data is sent over networks in small segments called “packets.” Like an envelope containing a letter, network packets also possess labels called “metadata” that convey information about the packet’s content, such as the network protocol used or the originating IP address of the data. Software-defined networks can read a packet’s metadata. This ultimately allows the network administrators to create policies and make decisions based on incoming packets’ metadata about how different packets should be routed —or whether one should be removed from the network.

Software-defined networks and their ability to create security policies at the fine-grained, software level can serve as the means to define and enforce zero-trust security policies. Such a zero-trust approach can ensure all users are authenticated and authorized in their requests, and if Colonial Pipeline had implemented a software-defined network, it could have prevented the ransomware from propagating throughout their systems, as the network would not have properly authenticated the software. No such measures were in place.

As with any technology, there are, of course, risks associated with software-defined networks. One need look no further than Russia to see how software policies that actively engage with data on a network could enable authoritarian regimes to control users’ access to content information. A national-scale network with draconian policies about what can pass through the network and what cannot would enable the interception of user data. Because software-defined networks can provide heightened, fine-grained control over what different users can access, national-scale software-defined networks in the hands of authoritarian governments enable censorship, which is why we propose that these systems should only be implemented on US critical infrastructure system networks that have one purpose only—to make sure the critical infrastructure operates as intended.

What the government should (and should not) do now. The US government should require critical infrastructure organizations to enforce zero-trust policies by using a software-defined network. It is only with such a prescriptive measure that we can substantially move the needle on improving critical infrastructure cybersecurity.

The Colonial Pipeline incident revealed how the cybersecurity for critical infrastructure organizations is largely managed by companies themselves. Pipelines could turn down federal reviews. They weren’t obligated to fix known issues. That needs to change. Requiring the implementation of software-defined networks in accordance with zero-trust security paradigms would be an important step in that direction.

We do not see (or recommend) that the United States set up a software-defined network enforcing zero trust at the national level anytime soon. (This would require an amazing amount of coordination—at a minimum it would necessitate private sector companies transferring partial operations to federally operated infrastructure.) Such a shift towards national control over all networks would likely upend the public’s trust in private companies as all companies would become dependent on federally controlled assets. Some companies see their network security as a competitive advantage and should the government take over networks, their investment would be wiped out. Critical infrastructure, however, certainly would benefit from software-defined networks, even if the government doesn’t mandate them.

DarkSide eventually returned access of Colonial Pipeline’s network to the company, which re-started its operations just days before the hack might have caused serious problems. But the company wasn’t able to entirely restore its data. Though the Justice Department recently seized $2.3 million of the ransom payment from DarkSide, the attack will likely cost the company tens of millions of dollars over the next few months as it repairs its networks. The Colonial Pipeline attack wasn’t as bad as it could have been. Will the same be true next time?

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: critical infrastructure, ransomware, software-defined networks, zero trust

Topics: Cyber Security

Wrong answer. The first rule of process control computers in chemical plants, nuclear plants and pipelines is never connect to internet. Updates by secure mail delivery from the manufacturer. Access to process systems in lock boxes. People connect to the internet to save money and because of the belief that is the modern thing to do. Wrong answer. Want secure fully-traceable custody so if something goes wrong, people got fired, people go to jail and companies providing software have massive financial losses.

Agreed. Critical infrastructure should be air gapped with associated physical security measures implemented. Zero trust policies and software defined networks should be a given within that disconnected context.