Why policy makers should beware claims of new ‘arms races’

By Haydn Belfield, Christian Ruhl | July 14, 2022

Claims of new “arms races” are everywhere these days. The world has supposedly entered a new space race, with Russian and Chinese anti-satellite weapons posing new threats to space-based infrastructure. The United States and China are also, allegedly, in an AI race, competing to build more powerful artificial intelligence systems. Let’s not forget the cyber arms race. Oh, and the hypersonic weapons race.

Under the specter of renewed great power competition, fears of a new arms race or an adversary’s imminent technological superiority are common. Vigilance is critical; an aggressive Chinese Communist Party and a revisionist Russia are both very real threats to the world—as Uyghurs and Ukrainians alike know painfully well—and the United States must remain prepared against expansionist authoritarian regimes.

Nonetheless, policy makers should examine new claims of a “race” in critical technologies dispassionately and rationally and beware of suboptimal arming in response to claims of adversary capabilities. (In the words of political scientist Charles L. Glaser, suboptimal arming occurs when “the state’s decision to launch a buildup is poorly matched to its security environment.”) History—especially the history of nuclear competition—shows that such fears can be overblown and costly, and policy makers would do well to remember the cognitive and cultural biases that make people see threats where there are none.

Not all “sprints” for new military technologies are mistaken, and not all mistaken sprints are suboptimal or dangerous. There have, however, been mistaken sprints in the past, and American leaders, key scientists, and experts should be careful about mistaken sprints in the future. It is important not to be complacent, but instead to engage in a vigorous accumulation of intelligence about other states’ capabilities and intentions, and a sober assessment of that intelligence—without being swayed by alarmist voices. The consequences of not doing so could be catastrophic.

The missing “missile gap.” In the late 1950s, key US experts were convinced that they “were again in a desperate race with a powerful, totalitarian opponent,” wrote Daniel Ellsberg, a former RAND employee and presidential nuclear advisor, in his 2017 memoir The Doomsday Machine. They believed the Soviets had a lead in intercontinental ballistic missiles (ICBMs)—the “missile gap.” With that supposed advantage, the Soviets could blackmail the world or even launch a successful preemptive strike on the United States. So, these experts advocated for and participated in an ICBM sprint.

However, their fears were mistaken. The 1961 National Intelligence Estimate, as Ellsberg later revealed, calculated that “the Soviets had exactly four ICBMs, soft, liquid-fueled missiles at one site, Plesetsk. Currently we had about forty operational Atlas and Titan ICBMs […] the numbers were ten to one in our favor.” Therefore, the United States’ sprint for ICBMs was not necessary to deter the Soviet Union from using its advantage to launch a first strike. Instead this sprint hastened the advent of ICBMs and the situation we are now in—in which a president has only minutes to decide how to respond to a warning of an attack.

Dissenting opinions (for example, from the Army and Navy) on the missile gap were sidelined, and information on the actual state of the Soviet forces kept secret for years. Better intelligence assessment could have delayed the development and deployment of these immensely destructive weapons. Later, key participants such as Ellsberg described their involvement as the greatest mistake of their life.

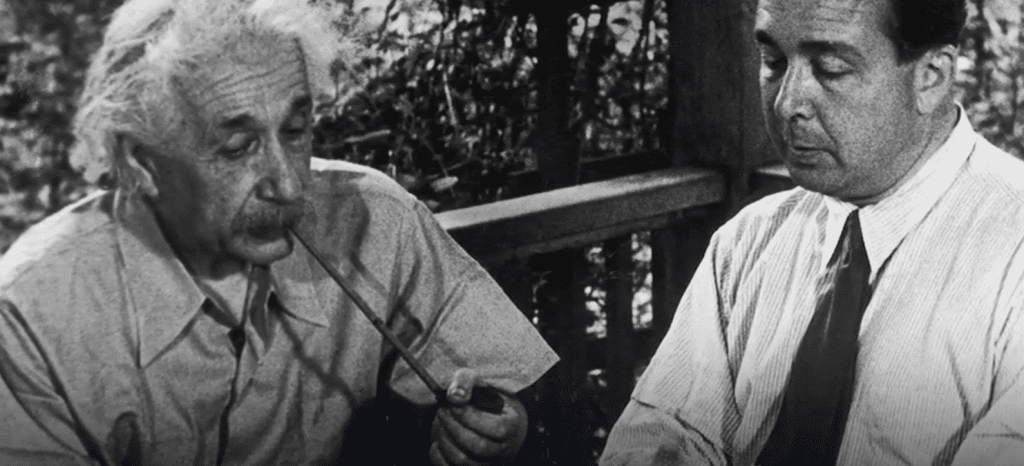

The nonexistent Nazi bomb. Nearly 20 years earlier, the key motivation for most of the scientists—like Albert Einstein, Enrico Fermi, and Leo Szilard—who advocated for and participated in the United States’ sprint for an atomic bomb was concern that the Nazis were themselves developing an atomic bomb. The Nazis could not be allowed a monopoly on such a destructive weapon.

However, these fears were also mistaken. We now know that, in June 1942, Hitler decided against a sprint. Armaments chief Albert Speer and nuclear physicists who worked on Germany’s bomb program thought it would take three to four years to deliver—too late to make a difference to the war. Moreover, the Nazis needed raw materials and manpower elsewhere for armaments production, so they faced real resource constraints.

The Manhattan Project did not need to, and did not in practice, deter Hitler from development or use of a nuclear weapon. Instead this sprint brought forward in time the development and deployment of nuclear weapons.

With their limited intelligence about the Nazi program, US nuclear scientists were surely right to advocate for and lead the sprint in 1942. Would these scientists have participated so vigorously had they known that no other state (Germany, Japan, or the Soviet Union) had a serious nuclear program during the war? Better intelligence about the Nazi regime in 1942 could have reduced the need for the Manhattan Project—freeing up vast resources (0.4 percent of the US gross domestic product in 1944) for other war production and delaying the advent of these immensely destructive weapons. Without the US sprint, the Soviets would not have been able to steal its secrets and build their own bomb so quickly. Later, key participants such as Einstein and Szilard described their involvement as the greatest mistake of their life.

The “best and the brightest” of two previous generations mistakenly thought they were in a race, sprinted when they did not need to, and later regretted it. The current generation of experts should not think itself immune to these dangers.

Misperceptions of AI. Unfortunately, some technologies seem more vulnerable to these dynamics than others. Software-based capabilities, for example, may be more difficult to verify than traditional military technologies.

As the AI expert Missy Cummings has shown, for example, misperceptions of technological advances could be especially insidious for artificial intelligence. As Cummings explains, AI advances are relatively easy for an adversary to fake, but “such a pretense could then cause other countries to attempt to emulate potentially unachievable capabilities, at great effort and expense. Thus, the perception of AI prowess may be just as important as having such capabilities.”

We can see this perception, for example, in some of the frothier warnings about an “AI gap” with China that have made the rounds in Washington. In reality, the United States and its allies and partners continue to dominate the semiconductor supply chain, high-impact AI research, and AI talent (more than 85 percent of Chinese PhD students in the United States intend to, and do, stay in the country after graduation).

US experts should not be complacent about this lead, but they do not need to panic. Policy makers should remember the lessons of the past, and approach new claims of technological superiority with care, and perhaps a healthy dose of skepticism.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: Manhattan Project, arms race, artificial intelligence, missile gap

Topics: Artificial Intelligence, Nuclear Weapons, Opinion, Voices of Tomorrow

Good article. If you state that you are in a “race”, you play the same game as the adversary. It is often a better idea to play a different game. Einstein was wrong when he thought that if the Nazis get the bomb, we must have it. There were at least two better approaches, both of which were used. One was to win the war before the German program could deliver. Another was to sabotage it, which was done with some success against heavy water in Norway, but could have been pursued more broadly. It was very easy to pinpoint… Read more »

There are plenty of more examples where arms control rather than arms racing might have made the world safer: Forward-deployed tactical nuclear weapons, thermonuclear weapons, MIRVs, intermediate-range ballistic missiles, missile defense. No one seems to be able to play chess thinking 2 moves ahead.

85 percent of Chinese PhD students remaining in the U.S may not be something to crow about, given that all manner of espionage may exponentially be at play.Consider why the NSA was created to begin with. It’s easier to hack on site than halfway around the world.The world has always been filled with people who truly believed they knew best and that all others were ignorant or misguided.Failure of imagination has doomed individuals, corporations, and nations alike. This article has plenty of food for thought, but never dismiss outright that a genial demeanour is benign or that one’s personal motives… Read more »