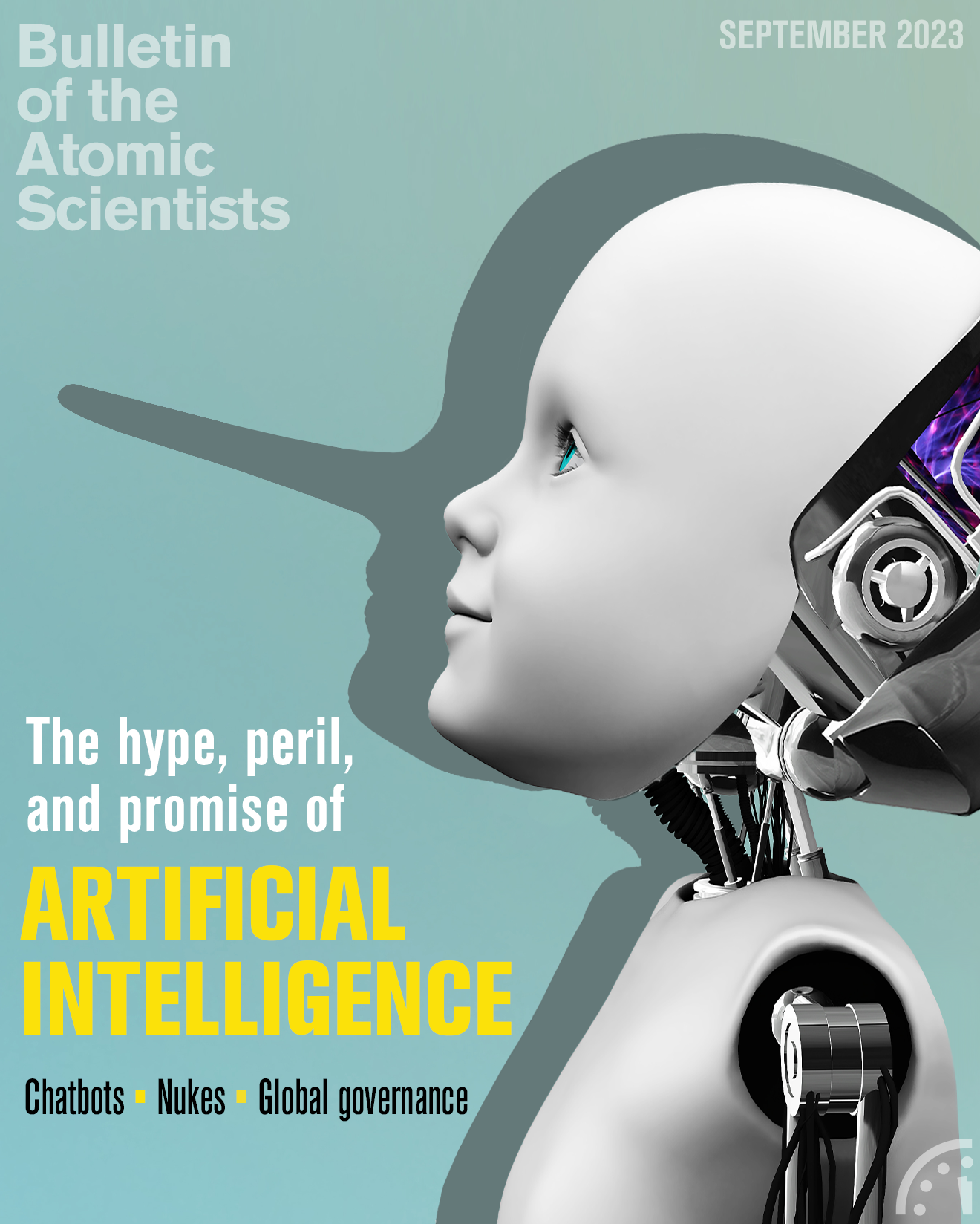

Introduction: The Hype, Peril, and Promise of Artificial Intelligence

By John Mecklin | September 11, 2023

Introduction: The Hype, Peril, and Promise of Artificial Intelligence

By John Mecklin | September 11, 2023

Editor’s note: This introduction was written by ChatGPT-3.5 at my prompting, and edited only very lightly. It’s not as thorough or nuanced as the intro I would’ve written. But time was short, we were past the deadline for submitting copy for this issue, and … ok, I confess. I used invented time pressure and a cliché—watch this AI introduce a magazine about artificial intelligence!—to clumsily illustrate that chatbots will be used, because what they produce is sometimes good enough to be useful. The chief outstanding questions are twofold: How will AI be used? And can it be managed, so the positives of its use far outweigh the negatives?

Dear readers,

In an era marked by the rapid advancement of technology, there is no doubt that artificial intelligence (AI) stands at the vanguard of innovation, captivating the imagination of nations and individuals alike. Its potential to revolutionize nearly every aspect of our lives, from warfare and governance to the very essence of human nature, is both awe-inspiring and daunting. As we navigate through these uncharted waters, the September issue of the Bulletin of the Atomic Scientists sets its gaze firmly on the complex interplay between humanity and AI, exploring the hype, peril, and promise that surround this transformative force.

We kick off the issue with an insightful interview conducted by editor in chief John Mecklin with Paul Scharre, an emerging military technology expert. Scharre brings into sharp focus the intricate dynamics of global power in the age of AI, where technology reshapes the contours of geopolitical influence.

As we marvel at AI’s capabilities, it is vital to remember that humans are the architects of this evolving landscape. In their thought-provoking article, Northwestern University management experts Moran Cerf and Adam Waytz urge us to shift our gaze from AI-induced fears to the potential harm that humans themselves may perpetrate. They compellingly argue that our focus should be on managing the intentions and actions of our own species, as we tread cautiously with the powerful tools AI affords us.

However, not all AI claims hold up to scrutiny. Bulletin disruptive technologies editor Sara Goudarzi takes on the task of deflating the chatbot hype balloon, cutting through the hyperbole to reveal the true potential and limitations of these automated conversational agents. In a complementary piece, Dawn Stover delves into the psychological impact of interacting with chatbots, probing the question of whether these digital entities will ultimately drive us to the brink of madness.

AI’s global impact demands thoughtful and coordinated governance. Existential risk researcher Rumtin Sepasspour, in a thought-provoking exploration, contemplates what a harmonious international governance framework for AI should look like. As we grapple with the challenges of regulating AI across borders, Sepasspour sheds light on potential avenues for global cooperation and responsible AI development.

Moreover, the integration of AI with nuclear material production is an area of significant concern and potential. In their fascinating article, international security researchers Jingjie He and Nikita Degtyarev illuminate the extraordinary ways AI is revolutionizing this critical domain. As the intersection of AI and atoms emerges, we must tread with discernment and responsibility to harness this innovation for the greater good.

Throughout these pages, we confront the multifaceted dimensions of AI with an eye toward understanding its complexities, its impact on society, and its implications for global security. We thank our distinguished authors for their contributions to this vital conversation. We invite you to dive into this compelling collection of articles and, as always, encourage you to engage in the discourse sparked by these pressing matters.

Sincerely,

ChatGPT-3.5, on behalf of John Mecklin, editor-in-chief, Bulletin of the Atomic Scientists

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.