Concerned about facial recognition AI? What about behavior recognition software?

By Matt Field | March 13, 2019

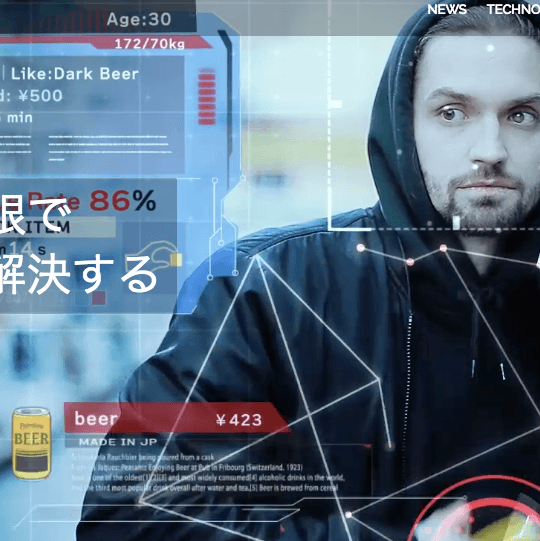

A screenshot from a video on Vaak's website. Credit: Vaak.

A screenshot from a video on Vaak's website. Credit: Vaak.

A young woman picks up and compares juices in a store aisle. She’s 29. She spends 40 minutes on average shopping and likes orange juice. That’s not all: She usually spends 2,500 yen per visit to the store.

The Japanese startup Vaak’s software knows a lot about the woman in a white shirt.

Most importantly, it knows that there is only a 4 percent chance of her doing something such as shoplifting.

Vaak is one of a growing number of companies across the globe developing AI-powered surveillance technology that analyzes body language to judge whether someone is behaving in a suspicious manner. (The technology also has important applications for autonomous cars; those systems need to know, for instance, what the intent of someone standing on a street corner is.)

But many companies envision the products as a security tool. While nary a month goes by without some press account on a troubling aspect of facial recognition technology, there’s been far less attention paid to the type of artificial intelligence that Vaak is developing.

Vaak’s software works by analyzing in-store security camera footage. In a promotional video, the software can not only identify the 29-year-old juice lover, but zero in on a shifty character in a hoodie who may be contemplating shoplifting. A floating tag next to the suspicious man’s face identifies him as a 30-year-old who usually only spends 500 yen (about $5) in the store. A high-tech looking array of dots and lines shimmers across his frame as he peeks down an aisle; presumably it is measuring the man’s movements and looking for signs of nefarious intent: fidgeting, restlessness, and suspicious body behavior. After a quick glance to make sure the coast is clear, hoodie guy pockets a can of beer. Vaak clocks him as having an “86-percent” suspicious rate.

According to the Bloomberg article “These cameras can spot shoplifters even before they steal,” Vaak is testing its software in several locations in the Tokyo region. After a real-life theft during a practice run of the technology at a test store in nearby Yokohama, Vaak reportedly helped authorities arrest a shoplifter. The company’s founder, Ryo Tanaka, told Bloomberg about the breakthrough moment for the company: “We took an important step closer to a society where crime can be prevented with AI.”

And Vaak is not the only one pursuing this type of body language profiling approach. Wrnch, a Canadian company, uses “synthetic” humans, similar to those that a videogame designer might create, to train the company’s system to recognize behaviors. In addition to Vaak and wrnch, companies in England and Israel, at least, are also working on similar technology.

At first glance, this approach appears different from the AI-based surveillance technology taken by facial recognition developers, who rely on massive data sets of photos of actual people, and which has received a torrent of criticism in recent months. (The American Civil Liberties Union found that Amazon’s Rekognition facial software disproportionately labeled minority members of the US Congress as being in a mug-shot database.)

But behavior recognition software may be no better in this regard. Just as a potentially racially biased facial recognition system could flag a person for police attention, could biased software deem someone moving in a “fidgety” manner as suspicious on dubious grounds?

Kind of all makes one want to go “Vaak.”

Publication Name: Bloomberg

To read what we're reading, click here

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: Vaak, security cameras, wrnch

Topics: Artificial Intelligence, Disruptive Technologies, What We’re Reading

Members of US Congress seen recognized as shifty! How awful. Surely you jest.

by now everyone knows elected Democracy brings out ACTORS. It’s an acting job.