The brain-computer interface is coming

and we are so not ready for it

By PAUL TULLIS

September 15, 2020

If you were the type of geek, growing up, who enjoyed taking apart mechanical things and putting them back together again, who had your own corner of the garage or the basement filled with electronics and parts of electronics that you endlessly reconfigured, who learned to solder before you could ride a bike, your dream job would be at the Intelligent Systems Center of the Applied Physics Laboratory at Johns Hopkins University. Housed in an indistinct, cream-colored building in a part of Maryland where you can still keep a horse in your back yard, the ISC so elevates geekdom that the first thing you see past the receptionist’s desk is a paradise for the kind of person who isn’t just thrilled by gadgets, but who is compelled to understand how they work.

If you were the type of geek, growing up, who enjoyed taking apart mechanical things and putting them back together again, who had your own corner of the garage or the basement filled with electronics and parts of electronics that you endlessly reconfigured, who learned to solder before you could ride a bike, your dream job would be at the Intelligent Systems Center of the Applied Physics Laboratory at Johns Hopkins University. Housed in an indistinct, cream-colored building in a part of Maryland where you can still keep a horse in your back yard, the ISC so elevates geekdom that the first thing you see past the receptionist’s desk is a paradise for the kind of person who isn’t just thrilled by gadgets, but who is compelled to understand how they work.

It’s called the Innovation Lab, and it features at least six levels of shelving that holds small unmanned aerial vehicles in various stages of assembly. Six more levels of shelves are devoted to clear plastic boxes of the kind you’d buy at The Container Store, full of nuts and bolts and screws and hinges of unusual configurations. A robot arm is attached to a table, like a lamp with a clamp. The bottom half of a four-wheeled robot the size of a golf cart has rolled up next to someone’s desk. Lying about, should the need arise, are power tools, power supplies, oscilloscopes, gyroscopes, and multimeters. Behind a glass wall you can find laser cutters, 3D printers. The Innovation Lab has its own moveable, heavy-duty gantry crane. You would have sufficient autonomy, here at the Intelligent Systems Center of the Applied Physics Laboratory at Johns Hopkins University, to not only decide that your robot ought to have fingernails, but to drive over to CVS and spend some taxpayer dollars on a kit of Lee Press-On Nails, because, dammit, this is America. All this with the knowledge that in an office around the corner, one of your colleagues is right now literally flying a spacecraft over Pluto.

The Applied Physics Laboratory is the nation’s largest university-affiliated research center. Founded in 1942 as part of the war effort, it now houses 7,200 staff working with a budget of $1.52 billion on some 450 acres in 700 labs that provide 3 million square feet of geeking space. When the US government—in particular, the Pentagon—has an engineering challenge, this lab is one of the places it turns to. The Navy’s surface-to-air missile, the Tomahawk land-attack missile, and a satellite-based navigation system that preceded GPS all originated here.

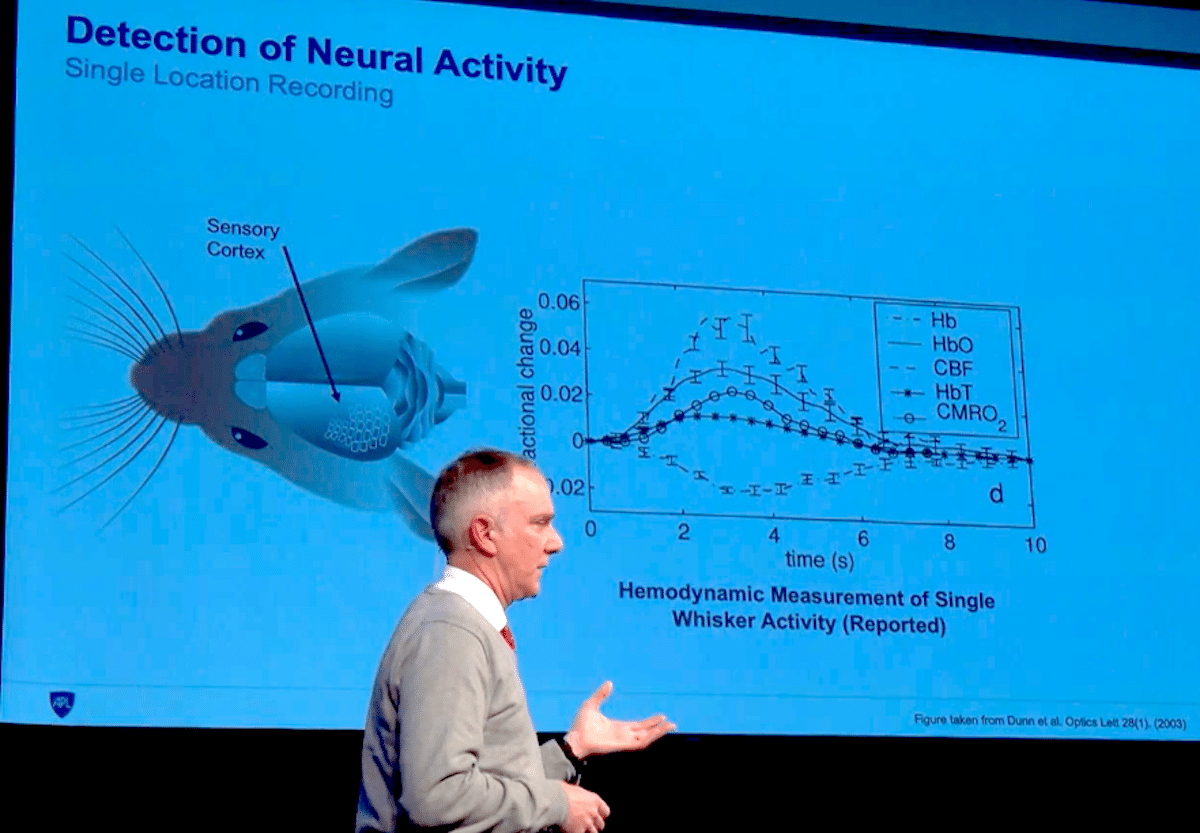

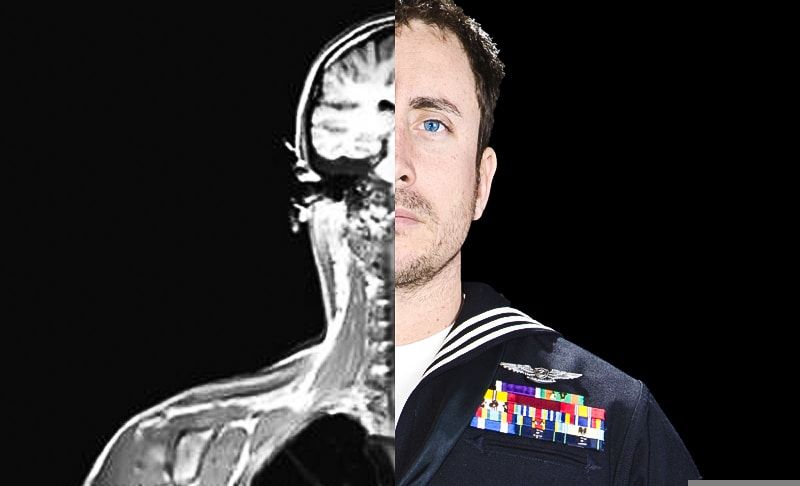

In 2006, the Defense Advanced Research Projects Agency (DARPA), an arm of the Pentagon with a $3.4 billion budget and an instrumental role in developing military technology, wanted to rethink what was possible in the field of prosthetic limbs. Wars in Iraq and Afghanistan were sending home many soldiers with missing arms and legs, partly because better body armor and improved medical care in the field were keeping more of the severely wounded alive. But prosthetics had not advanced much, in terms of capabilities, from what protruded from the forearm of Captain Hook. Some artificial arms and legs took signals from muscles and converted them into motion. Still, Brock Wester, a biomedical engineer who is a project manager in the Intelligent Systems Group at APL, said, “it was pretty limited in what these could do. Maybe just open and close a couple fingers, maybe do a rotation. But it wasn’t able to reproduce all the loss of function from the injury.”

The Applied Physics Laboratory brought together experts in micro-electronics, software, neural systems, and neural processing, and over 13 years, in a project known as Revolutionizing Prosthetics, they narrowed the gaps and tightened the connections between the next generation of prosthetic fingers and the brain. An early effort involved something called targeted muscle re-enervation. A surgical procedure rearticulated nerves that once controlled muscle in the lost limb, so they connected to muscle remaining in a residual segment of the limb or to a nearby location on the body, allowing the user to perceive motion of the prosthetic as naturally as he or she had perceived the movement of his or her living limb.

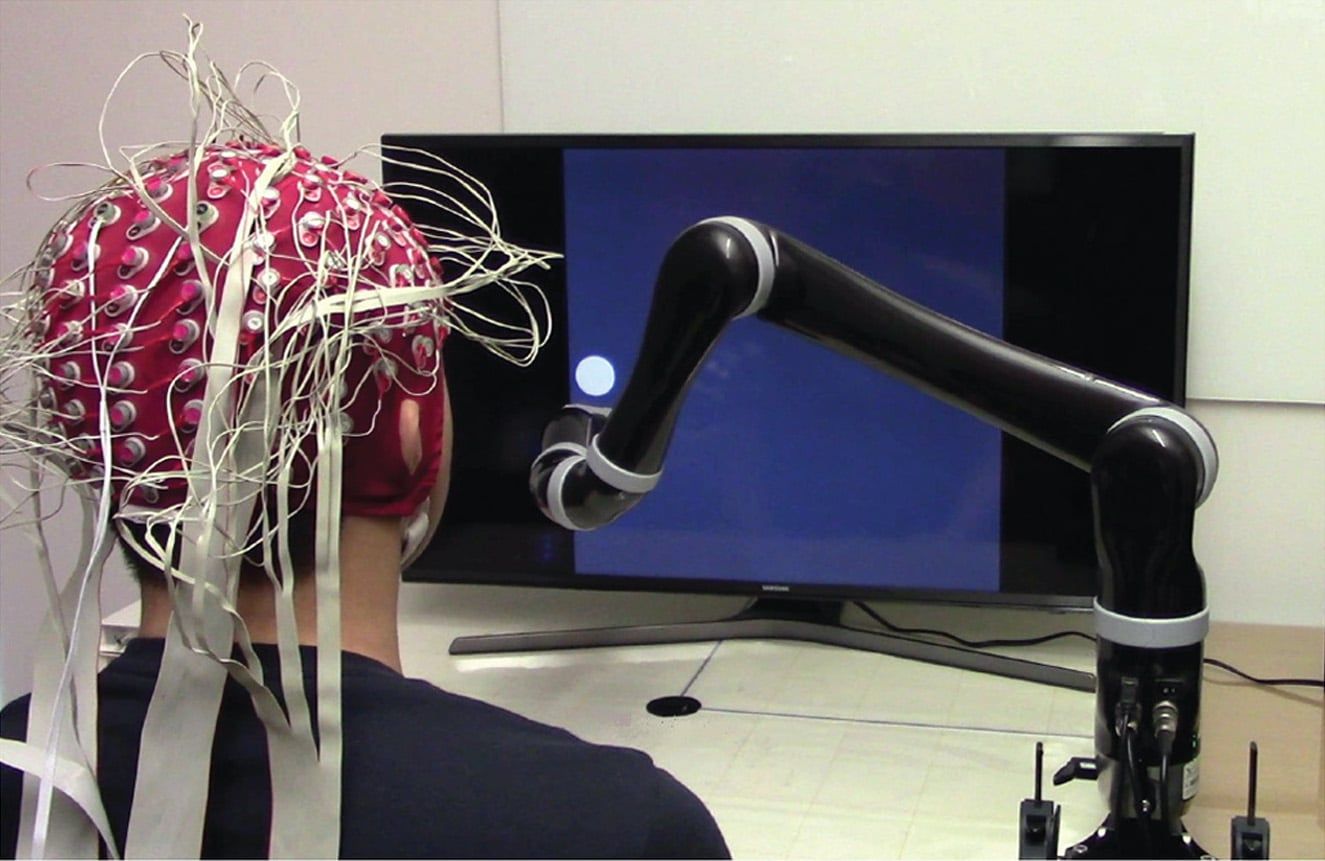

Another side of the Revolutionizing Prosthetics effort was even more ambitious. People who are quadriplegic have had the connection between their brains and the nerves that activate their muscles severed. Similarly, those with the neuromuscular disorder amyotrophic lateral sclerosis cannot send signals from their brains to the extremities and so have difficulty making motions as they would like, particularly refined motions. What if technology could connect the brain to a prosthetic device or other assistive technology? If the lab could devise a way to acquire signals from the brain, filter out the irrelevant chaff, amplify the useful signal, and convert it into a digital format so it could be analyzed using an advanced form of artificial intelligence known as machine learning—if all that were accomplished, a paralyzed person could control a robotic arm by thought.

Watch the Bulletin's virtual program with Paul Tullis and learn more about the progress and potential pitfalls of the brain computer interface.

Hear from the author

Watch the Bulletin's virtual program with Paul Tullis and learn more about the progress and potential pitfalls of the brain computer interface.

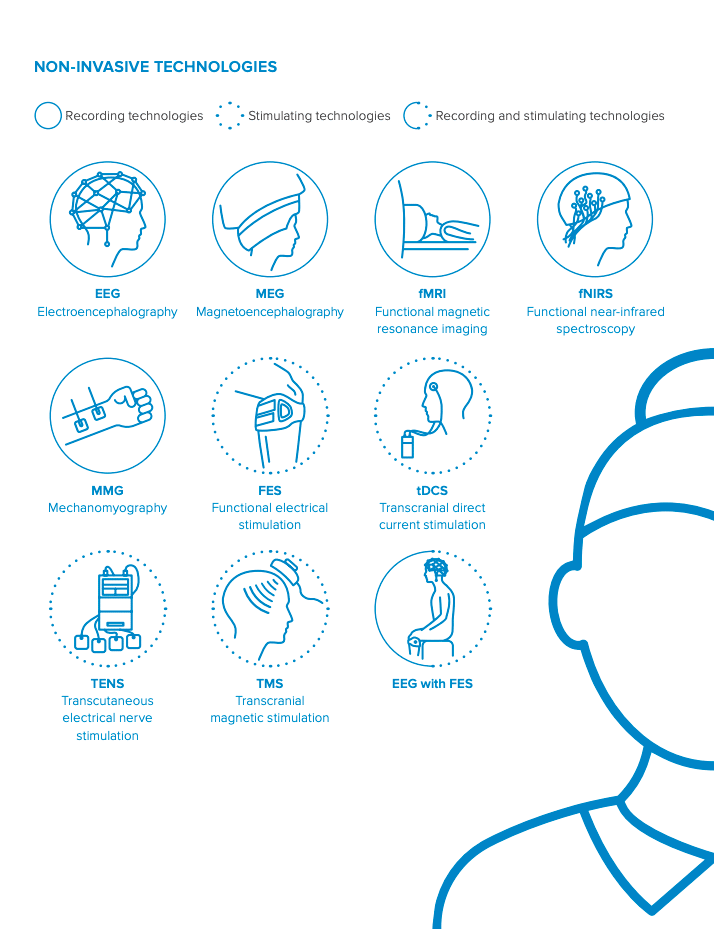

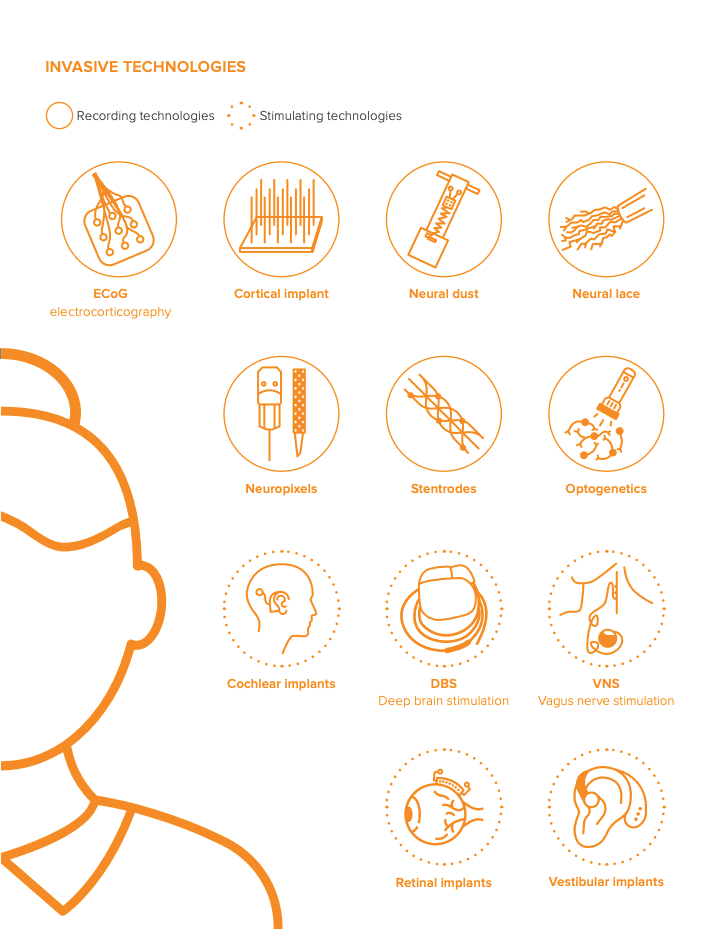

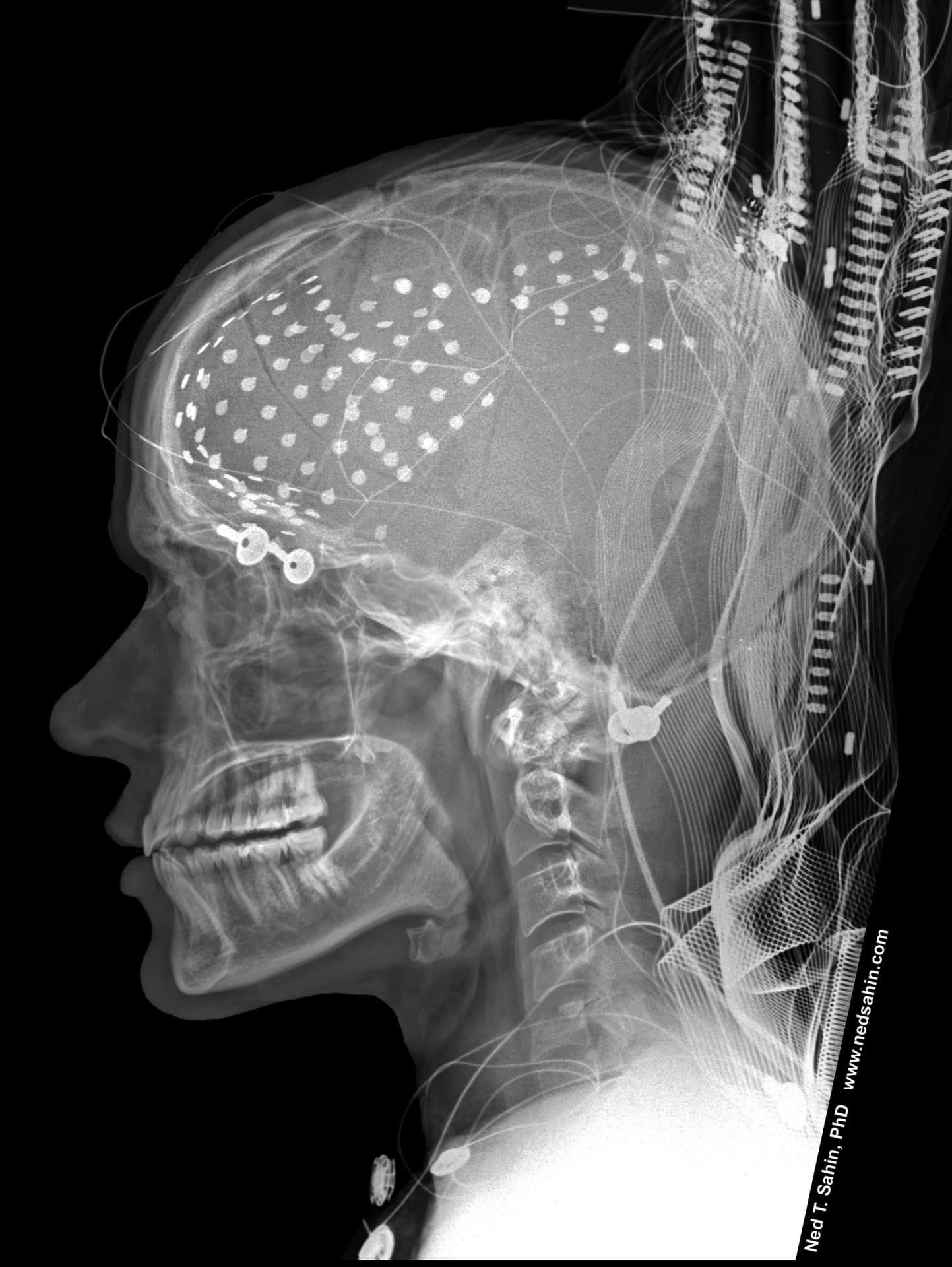

Researchers have tested a variety of so-called “brain-computer interfaces” in the effort to make the thinking connection from human to machine. One method involved electrocorticography (eCog), a sort of upgrade from the electroencephalography (EEG) used to measure brain electrical activity during attempts to diagnose tumors, seizures, or stroke. But EEG works through sensors arrayed outside the skull; for eCog, a flat, flexible sheet is placed onto the surface of the brain to collect activity from a large area immediately beneath the sensor sites.

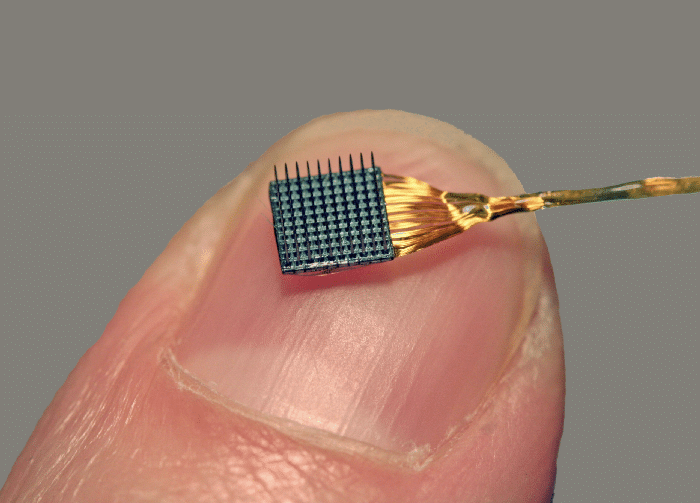

Another method of gathering electrical signals, a micro-electrode array, also works under the skull, but instead of lying on the surface of the brain, it projects into the organ’s tissue. One of the most successful forms of neural prosthetic yet devised involves a device of this type that is known as a Utah array (so named for its place of origin, the Center for Neural Interfaces at the University of Utah). Less than a square centimeter in size, it looks like a tiny bed of nails. Each of the array’s 96 protrusions is an electrode up to 1.5 millimeters in length that connects to neurons and receives and imparts signals from and to them.

In this 1965 experiment, electrodes placed in a young bull's brain allowed Yale University neurophysiologist José Delgado to control its movement via radio transmitter. (The March of Time / Getty Images)

The Pentagon’s interest in fusing humans with computers dates back more than 50 years. J.C.R. Licklider, a psychologist and a pioneer of early computing, wrote a paper proposing “Man-Computer Symbiosis” in 1960; he later became head of the Information Processing Techniques Office at DARPA (then known as ARPA). Even before that, a Yale professor named Jose Delgado had demonstrated that by placing electrodes on the skull, he could control some behaviors in mammals. (He most famously demonstrated this with a charging bull.) After some false starts and disappointments in the 1970s, DARPA's Defense Sciences Office by 1999 had the idea of augmenting humans with machines. At that time, it boasted more program managers than any other division at the agency. That year, the Brain Machine Interface program was launched with the plan of enabling service members to “communicate by thought alone.” Since then, at least eight DARPA programs have funded research paths aiming to restore memory, treat psychiatric disorders, and more.

The Pentagon’s interest in fusing humans with computers dates back more than 50 years. J.C.R. Licklider, a psychologist and a pioneer of early computing, wrote a paper proposing “Man-Computer Symbiosis” in 1960; he later became head of the Information Processing Techniques Office at DARPA (then known as ARPA). Even before that, a Yale professor named Jose Delgado had demonstrated that by placing electrodes on the skull, he could control some behaviors in mammals. (He most famously demonstrated this with a charging bull.) After some false starts and disappointments in the 1970s, DARPA opened the Defense Sciences Office by 1999 had the idea of augmenting humans with machines. At that time, it boasted more program managers than any other division at the agency. That year, the Brain Machine Interface program was launched with the plan of enabling service members to “communicate by thought alone.” Since then, at least eight DARPA programs have funded research paths aiming to restore memory, treat psychiatric disorders, and more.

First coined by UCLA computer scientist Jacques Vidal in the 1970s, “brain-computer interface” is one of those terms, like “artificial intelligence,” that is used to describe increasingly simple technologies and abilities the more the world of commerce becomes involved with it. (The term is used synonymously with “brain-machine interface.”) Electroencephalography has been used for several years to train patients who have suffered a stroke to regain motor control and more recently for less essential activities, such as playing video games.

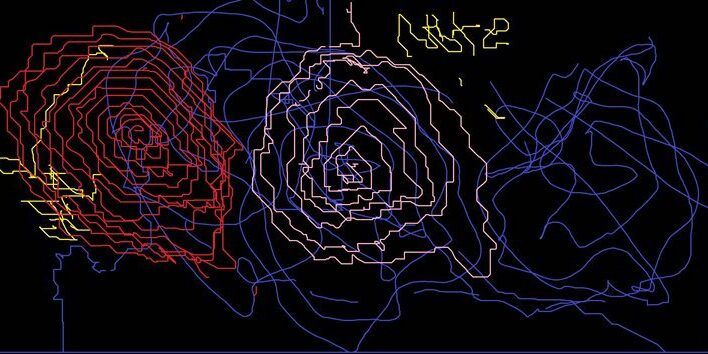

EEG paints only broad strokes of brain activity, like a series of satellite images over a hurricane that show the storm moving but not the formation and disruption of individual clouds. Finer resolution can be achieved with electrocorticography, which has been used to locate the short-circuits that cause frequent seizures in patients with severe epilepsy. Where EEG can show the activity of millions of neurons, and ECoG gives aggregate data from a more targeted set, the Utah array reads the activity of a few (or even individual) neurons, enabling a better understanding of intent, though from a smaller area. Such an array can be implanted in the brain for as long as five years, maybe longer.

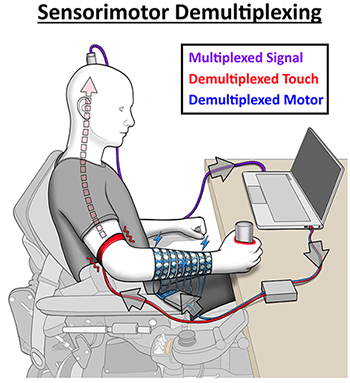

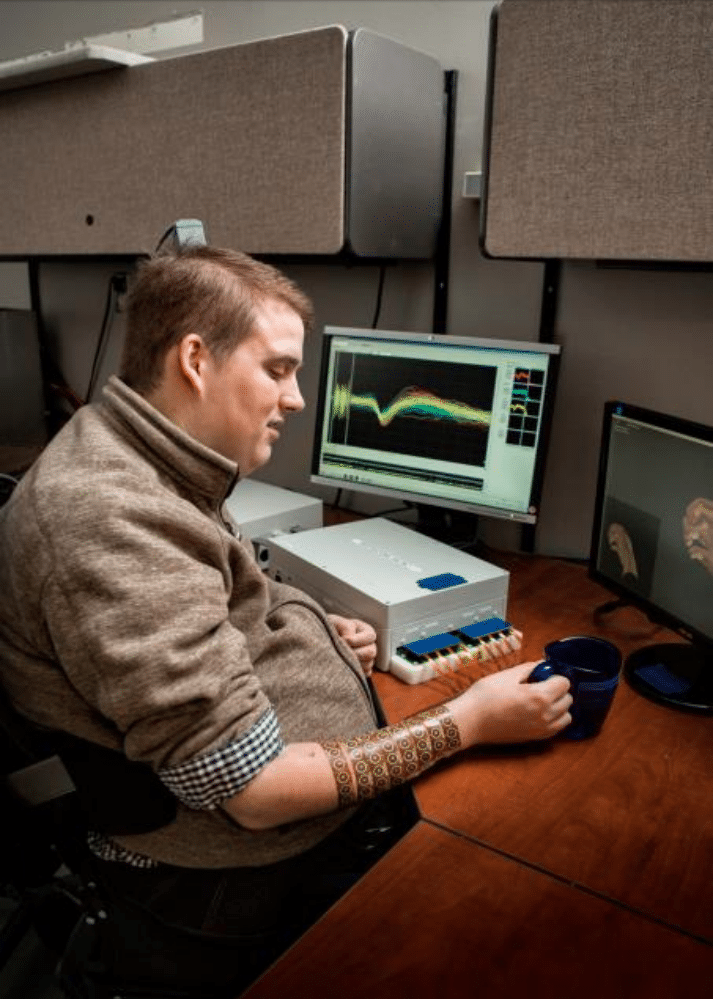

People with severe hypokinetic movement disorders who have had the Utah array—um—installed can move a cursor on a screen or a robotic arm with their thoughts. In August I met Ian Burkhart, one of the first people in the world to regain control of his own muscles through a neural bypass. He was working with scientists on a new kind of brain-computer interface that Battelle, a nonprofit applied science and technology company in Columbus, Ohio is trying to develop with doctors at Ohio State University’s Wexner Medical Center.

Once you get past the glass-enclosed atrium, the cafeteria, and the conference rooms and enter the domain where the science takes place, Battelle imparts the feeling of a spaceship, as depicted in one of the early Star Wars movies: Long, windowless, beige hallways with people moving purposefully through them and wireless technology required to gain admittance to certain areas. An executive led me to a room where Burkhart was undergoing experiments. After months of training and half an hour or so of setup each time he used it for an experiment published in Nature four years ago, he could get the Utah array, implanted in his motor cortex, to record his intentions for movement of his arm and hand. A cable connected to a pedestal sticking out of his skull sends the data to a computer, which decodes it using machine-learning algorithms and sends signals to electrodes in a sleeve on Burkhart’s arm. They activate his muscles to perform the intended motion, such as grasping, lifting, and emptying a bottle or swiping a credit card in a peripheral plugged into his laptop. “The first time I was able to open and close my hand,” Burkhart told me, “I remember distinctly looking back to a couple people in the room, like, ‘Okay, I want to take this home with me.’”

(DARPA)

(US Army)