Artificial intelligence: challenges and controversies for US national security

By Stephen J. Cimbala, Lawrence J. Korb | June 9, 2023

The military applications of AI

The military applications of AI

Artificial intelligence (AI) has recently taken center stage in US public policy debates. Corporate technical experts and some public officials want to declare a temporary moratorium on AI research and development. Their concerns include the possibility that artificial intelligence will increase in capability faster than human controllers’ ability to understand or control.

An autonomous AI technology that equaled or surpassed human cognition could redefine how we understand both technology and humanity, but there is no surety as to whether or when such a “superintelligence” might emerge. Amid the uncertainty, the United States and other countries must consider the possible impact of AI on their armed forces and their preparedness for war fighting or deterrence. Military theorists, strategic planners, scientists and political leaders will face at least seven different challenges in anticipating the directions in which the interface between human and machine will move in the next few decades.

First, the education and training of military professionals will undergo a near revolution. Historical experiences of great commanders will no longer be available only from books and articles. We will be able to “project” future commanders backward into the AI version of battles fought by great captains and retroactively change the scenarios into “what ifs” or counterfactuals to further challenge students and instructors. What used to be called “war gaming” will ascend to a higher level of scenario building and deconstruction. Flexibility and agility will be the hallmarks of successful leaders who can master the AI-driven sciences of military planning, logistics and war fighting. Since the art of battle depends upon the combination of fire and maneuver supported by accurate intelligence, AI systems will brew the optimal combination of kinetic strikes supported by timely intelligence and prompt battle damage assessment. To be successful, political and military leaders will have to think fast, hit hard, assess rapidly, reconstitute for another punch, bob and weave—in essence, boxing in virtual reality.

Second, the human-machine interface will be transformed when current AI systems attain maturity and compete with, or surpass, certain aspects of human decision making, including memory storage, flexibility, and self-awareness. Subordinate commanders will find that they are “reporting” to an AI system that is serving as a surrogate for higher-level commanders who, in turn, will be accountable to their superiors for performance as measured by yet another AI system. AI systems will increasingly be linked across departments and other bureaucratic stovepipes, eventually crunching everybody into one colossal metaspace. accessible only to expert technicians and the few very highest-ranking generals and admirals. Contractors will make fortunes providing service-selective AI systems for the specific needs of the US Army, Navy, Air Force, Marines, and other components of the Department of Defense and other national security bureaucracies. Think of the first decades of institutional “computerization” in the government—on steroids.

Third, the management of national and military intelligence will face formidable challenges from mature AI systems. AI will make possible the collection of even more enormous amounts of data compared to those already gathered and stored in government servers. Yet AI systems will provide superior tools with which to analyze data collections for timely use by commanders and policy makers. Currently, decision makers are data collection-rich and analysis-poor. AI may support more strategic dives into massive databases to retrieve and collate information that could save lives or win battles. AI may also allow for faster interpretation of signals intelligence and other information and for a more rapid insertion of pertinent knowledge into the OODA (observe, orient, decide, and act) loop of decision making. AI will also challenge the traditions within the US intelligence community of bureaucratic protectionism. Civilian leaders in the executive branch and Congress could use AI to obtain a clearer picture of which missions are being carried out by which agencies and which secrets are worth keeping and for how long.

Fourth, more wars will be information-related, in the largest sense. As in the past, countries and terrorists alike will want to acquire intelligence for war fighting and deny it to their opponents. But as AI advances, future commanders and heads of state will have increased abilities to influence the views of reality held by their enemy counterparts. They will do this at two levels: by targeting top officials who are responsible for making policy, and by swaying mass public opinion in the target country.

AI and other instruments of influence can be used to manufacture false images (aka deepfakes), including fictitious but realistic political speeches and news reports concocted to deceive mass audiences. An AI-manufactured deepfake might, for example, depict an image of President Biden meeting secretly with President Xi or President Putin for some nefarious purpose. Fake scenes of battle carnage or terrorist attacks could be superimposed on supposedly routine news coverage. As AI-enhanced fake news proliferates, credulous publics could be stirred into mass demonstrations. And political leaders will be specific targets for what the Soviets and now Russians call “active measures” of strategic deception—enhanced by AI technologies. These include, but are not limited to, techniques of “reflexive control,” in which the enemy is persuaded to want to do what its opponent wants it to do.

Fifth and most certainly: AI will speed everything up, including decisions about the use of force and the application of force to particular targets. This means that the first wave of attacks in a war against a major power could focus on space and cyber assets. A state denied access to real time information provided by satellites for launch detection, command and control, communications, navigation, and targeting will be immediately pushed backward into the preceding century as a military power. Accordingly, states will have to provide protection for their space-based assets by launching large numbers of redundant satellites, by placing satellites in orbit to defend against terrestrial and space-based anti-satellite weapons (ASATs), and by developing capabilities for rendezvous and proximity operations for reconnaissance, threat assessment, and repair of satellites. Earth-to-space and space-to-Earth communications links will have to be protected and encrypted.

If war does break out in the AI age, the United States can also expect challenges to its defenses in the cyber realm. The major powers already have both offensive and defensive cyber capabilities and under “normal peacetime” conditions are probing one another’s information systems and supporting infrastructure. During crisis or wartime, cyberattacks would be speeded up and become more intrusive, potentially decoupling one or more weapons, delivery systems, or command-control assets from assigned chains of command. Attacks on civilian infrastructure, including by terrorists, are already common in cyberspace, and AI systems will only make them more sophisticated. Even more so than with respect to attacks on space systems, cyberattacks might not leave a clear “footprint” as to the identity of the attacker.

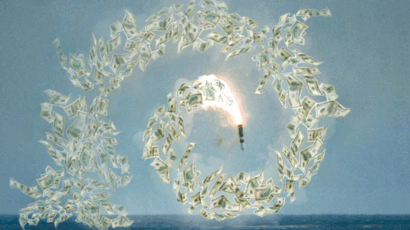

Absorbing a first blow and then retaliating may not be available to beleaguered and time pressed decision makers. Instead, they will have to establish and authorize preset responses to attacks on information-providing “brainstem” assets, and some of those responses will be AI-based or at least AI-assisted. This situation poses an especially concerning challenge for nuclear deterrence. Policy makers should have sufficient time to deliberate alternatives with their advisors and to select the most appropriate option when faced with decisions for or against nuclear war. But the potential speed of AI-boosted attacks against space and cyber assets, together with the rising speed of kinetic strikes from hypersonic weapons, may leave leaders who fear an enemy nuclear first strike to choose preemption over retaliation.

Sixth, if AI does advance toward an artificial general intelligence that is more sentient and humanoid than is currently possible, it may evolve in directions not entirely foreseen by its creators—for good or ill. AI systems could adopt their own versions of emotional intelligence and value preferences. They might then collaborate across national boundaries to promote their preferred outcomes in public policy. For example, sentient AI systems could decide that nuclear disarmament was a necessary condition for the survival of a viable civilization and, notwithstanding the directives of their national policy makers, work to publicize the arguments for disarmament. They might also cooperate to discredit the arguments for nuclear deterrence and to insert digital wrenches into the software gears of nuclear preparedness. A more ambitious AI agenda on behalf of human rights and against authoritarian governments is not inconceivable. After all, the creators of AI systems may include dedicated globalists who regard existing states as outdated relics of a less advanced civilization. On the other hand, authoritarian governments are already exploiting AI systems to repress individual freedom, including rights of free expression and peaceful anti-government protest. AI can help to identify and isolate “enemies of the state” by omnivorous monitoring of private behavior and public acts. Advanced artificial intelligences could make mass repression easier and more effective than history’s worst dictators ever imagined.

Seventh, a more mature IA infrastructure will require more education and training on the part of public officials not only with respect to technology, but also with regard to the relationship between public policy and human values. No artificial intelligence, however smart by its own standards, can be entrusted to substitute for decisions made by accountable policy makers with at least a decent background in the arts and humanities. Turning public policy decisions about AI over to those who are mesmerized by the latest gadgets and gaming systems—but who are lacking in comprehension of philosophy, music, literature, religion, art, and history—is to be ruled by (with apologies to the ghost of Spiro Agnew) nattering nabobs of neocortical narcissism armed with unprecedented political power.

In the United States, public policy affecting a vast population should not be the province of a self-appointed elite of politicians, bureaucrats, scientists and media barons; it should also be disciplined by the involvement of a broad base of citizens. Without the adult supervision provided by a truly democratic decision-making process, elites create and re-create their own entitlements as endemic privileges, even if it destroys their own societies.

The US armed forces are elite organizations, but they are as subject to the vicissitudes of democratic awareness and public policy making as the other components of the US government. The American military has a great deal of public support and respect, but Americans are an impatient people within a future-oriented and success-driven culture. Americans are skeptical about wars and military commitments of long duration if favorable outcomes are not quickly forthcoming.

Advances in AI and other technologies that it enables will only increase public expectations for quick and decisive resolutions to America’s wars. Doubtless AI and other technologies can improve the performance of US military forces in many regards, but there is no substitute for military forces whose training and experience are grounded not just in US military law, but also in the better aspects of American culture and history. Americans, including their armed forces, have adapted new technologies to good use in the past, without allowing the tech tail to wag the values dog. AI presents a unique challenge in this regard, but not an insurmountable one, if the challenge is tackled immediately.

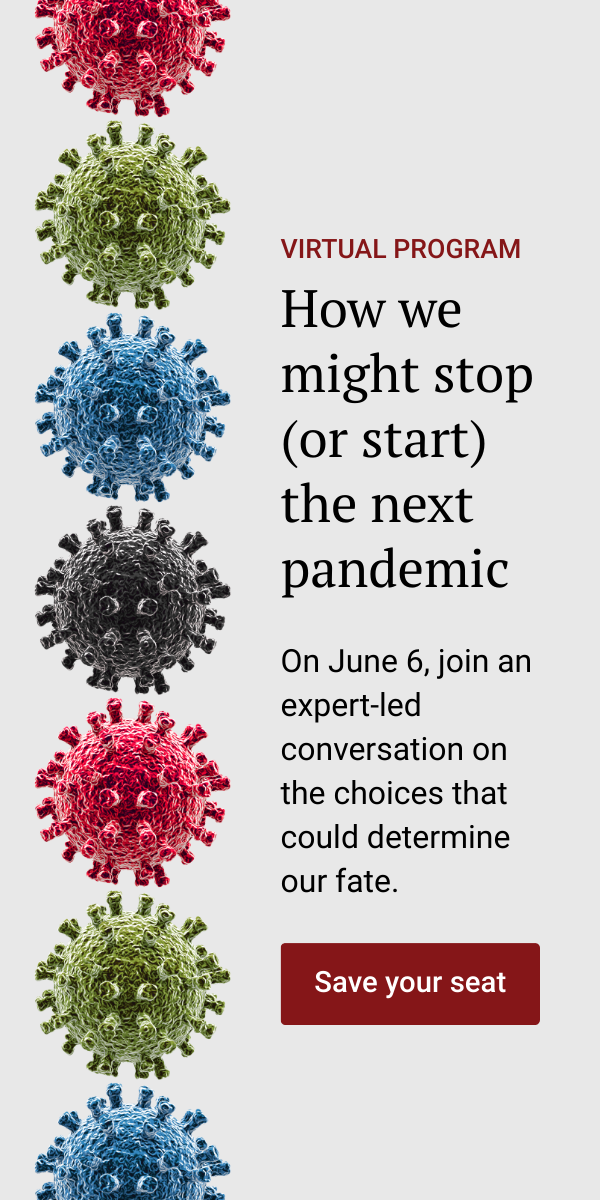

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: AI, AI arms race, emerging military technologies

Topics: Artificial Intelligence, Nuclear Weapons

“For example, sentient AI systems could decide that nuclear disarmament was a necessary condition for the survival of a viable civilization and, notwithstanding the directives of their national policy makers, work to publicize the arguments for disarmament. They might also cooperate to discredit the arguments for nuclear deterrence and to insert digital wrenches into the software gears of nuclear preparedness.” Now does this paragraph disparage the cause of nuclear disarmament, because it uses the issue to characterize a sort of renegade loose-cannon computer that all of us fear, as being for disarmament and using deceptive means to achieve that end,… Read more »