AI & the future of WARFARE

US military officers can approve the use of AI-enhanced military technologies that they don't trust. That's a serious problem.

November 29, 2023

Experts agree that future warfare will be characterized by the use of technologies enhanced with artificial intelligence (AI), especially fully-autonomous weapons systems. These capabilities—such as the US Air Force’s “Loyal Wingman” unmanned aerial vehicle or drone—are able to identify, track, and prosecute targets without human oversight. The recent use of these lethal autonomous weapons systems in conflicts—including in Gaza, Libya, Nagorno-Karabakh, and Ukraine—poses important legal, ethical, and moral questions.

Despite their use, it is still unclear how AI-enhanced military technologies may shift the nature and dynamics of warfare. Those most concerned by the use of AI for military purposes foresee a dystopian future or “AI apocalypse,” in which machines will mature enough to dominate the world. One policy analyst even predicts that lethal autonomous weapons systems “will lead to a seismic change in the world order far greater than that which occurred with the introduction of nuclear weapons.” Other observers question the extent to which AI systems could realistically take over humans, given the complexity of modelling biological intelligence through algorithms. Assuming such extension of AI is possible, militaries that rely on it are incumbered by data and judgment costs that arguably “make the human element in war even more important, not less.”

While useful in discussing the potential effects of AI on global politics, these perspectives do not explain how AI may actually alter the conduct of war, and what soldiers think about this issue. To tackle this problem, I recently investigated how AI-enhanced military technologies—integrated at various decision-making levels and types of oversight—shape the trust of US military officers for these systems, which informs their understanding of the trajectory of war. In the field of AI, trust is defined as the belief that an autonomous technology will reliably perform as expected in pursuit of shared goals.

To measure the level of trust of the military in lethal autonomous weapons systems, I studied the attitudes of officers attending the US Army War College in Carlisle, Pennsylvania, and the US Naval War College in Newport, Rhode Island. These officers, from whose ranks the military will draw its future generals and admirals, are responsible for managing the integration and use of emerging capabilities during future conflict. Their attitudes are therefore important to understanding the extent to which AI may shape a new age of war fought by “warbot” armies.

My research shows three key findings. First, officers trust AI-enhanced military technologies differently depending on the decision-making level at which they are integrated and type of oversight of new capabilities. Second, officers can approve or support the adoption of AI-enhanced military technologies, but not trust them, demonstrating a misalignment of attitudes that has implications for military modernization. Third, officers’ attitudes toward AI-enabled capabilities can also be shaped by other factors, including their moral beliefs, concerns for an AI arms race, and level of education. Together, these findings provide the first experimental evidence of military attitudes toward AI in war, which have implications for military modernization, policy oversight of autonomous weapons, and professional military education, including for nuclear command and control.

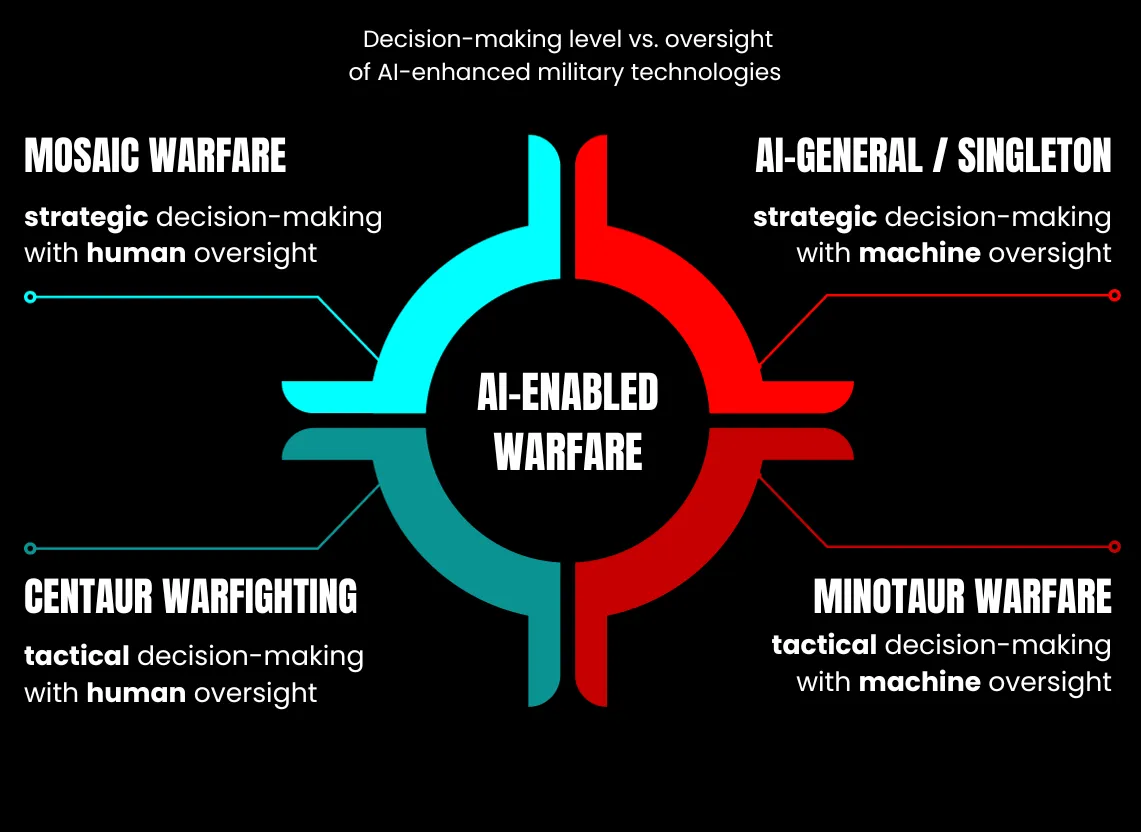

Four types of AI-enabled warfare

The adoption of AI-enhanced military technologies by different countries can vary in terms of the level of decision-making (tactical or strategic) and the type of oversight (human or machine). Countries can optimize algorithms to perform tactical operations on the battlefield or conduct strategic deliberations in support of overall war aims. Tactically, such technologies can enhance the lethality of field commanders by rapidly analyzing large quantities of data drawn from sensors distributed across the battlefield to generate targeting options faster than adversaries. As cybersecurity expert Jon Lindsay puts it, “combat might be modeled as a game that is won by destroying more enemies while preserving more friendlies.” This is achieved by significantly shortening the “sensor-to-shooter” timeline, which corresponds to the interval of time between acquiring and prosecuting a target. The US Defense Department’s Task Force Lima and Project Maven are both examples of such AI applications.

Strategically, AI-enhanced military technologies can also help political and military leaders synchronize key objectives (ends) with a combination of warfighting approaches (ways) and finite resources (means), including materiel and personnel. New capabilities could even emerge and replace humans in future military operations, including for crafting strategic direction and national-level strategies. As one expert argues, AI has already demonstrated the potential “to engage in complex analyses and strategizing comparable to that required to wage war.”

At the same time, countries can also calibrate the type of oversight or control delegated to AI-enhanced military technologies. These technologies can be designed to allow for greater human oversight, affording enhanced agency over decision-making. Such systems are often called semi-autonomous, meaning they remain under human control. This pattern of oversight characterizes how most AI-enhanced weapons systems, such as the General Atomics MQ-9 Reaper drone, currently operate. While the Reaper can fly on autopilot, accounting for changes in the topography and weather conditions to adjust its altitude and speed, humans still make the targeting decisions.

Countries can also design AI-enhanced military technologies with less human oversight. These systems are often referred to as “killer robots” because the human is off the loop. In these applications, humans exercise limited, if any, oversight, even for targeting decisions. Variation in the decision-making level and type of oversight suggests four types of warfare that could emerge globally given the adoption of AI-enhanced military technologies.

First, countries could use AI-enhanced military technologies for tactical decision-making with human oversight. This defines what Paul Scharre calls “centaur warfighting,” named after a creature from Greek mythology with the upper body of a human and the lower body and legs of a horse. Centaur warfare emphasizes human control of machines for battlefield purposes, such as the destruction of a target like an enemy’s arms cache.

Second, countries could use AI-enhanced military technologies for tactical decision-making with machine oversight. This flips centaur warfare on its head, literally, evoking another mythical creature from ancient Greece—the minotaur, with the head and tail of a bull and the body of a man. “Minotaur warfare” is characterized by machine control of humans during combat and across domains, which can range from patrols of soldiers on the ground to constellations of warships on the ocean to formations of fighter jets in the air.

Third, strategic decision-making, coupled with machine oversight, frames an “AI-general” or “singleton” type of warfare. This approach invests AI-enhanced military technologies with extraordinary latitude to shape the trajectory of countries’ warfighting, but may have serious implications for the offense-defense balance between countries during conflict. In other words, an AI-general type of warfighting could allow countries to gain and maintain advantages over adversaries in time and space that shape the overall outcomes of war.

Finally, “mosaic warfare” retains human oversight of AI-enhanced military technologies but attempts to capitalize on algorithms to optimize strategic decision-making to impose and exploit vulnerabilities against a peer-adversary. The intent of this warfighting model—which US Marine Corps Gen. (Retired) John Allen calls “hyperwar” and scholars often refer to as algorithmic decision-support systems—is to retain overall human supervision while using algorithms to perform critical enabling tasks. These include predicting possible enemy courses-of-action through a process of “real-time threat forecasting” (which is the mission of the Defense Department’s new Machine-Assisted Analytic Rapid-Repository System or MARS), identify the most feasible, acceptable, and suitable strategy (which companies such as Palantir and Scale AI are studying how to do), and tailor key warfighting functions, such as logistics, to help militaries gain and maintain the initiative in contested operating environments that are characterized by extended supply lines, such as the Indo-Pacific.

US officers’ attitudes toward AI-enabled warfare

To address how military officers trust AI-enhanced military technologies given variation in their decision-making level and type of oversight, I conducted a survey in October 2023 among officers assigned to the war colleges in Carlisle and Newport. The survey involved four experimental groups that varied the use of an AI-enhanced military technology in terms of decision-making (tactical or strategic) and oversight (human or machine), as well as one baseline group that did not manipulate these attributes. After reading their randomly assigned scenarios, I asked respondents to rate their trust and support in the capability on a scale of one (low) to five (high). I then analyzed the data using statistical methods.

Although my sample is not representative of the US military (nor its branches, like the US Army and Navy), it is what political scientists call a convenience sample. This helps draw extremely rare insights into how servicemembers may trust AI-enhanced military technologies and the effect of this trust on the character of war.

This sample is also a hard test for my understanding of possible shifts in the future of war given the emergence of AI, since I oversampled field-grade officers, including majors/lieutenant commanders, lieutenant colonels/commanders, and colonels/captains. They have years of training and are experts in targeting, and many have deployed to combat and made decisions about drones. They are also emerging senior leaders entrusted to appraise the implications of new technologies for future conflict. These characteristics imply that officers in my sample may be primed to distrust AI-enhanced military technologies more so than other segments of the military, especially junior officers who are often referred to as “digital natives.”

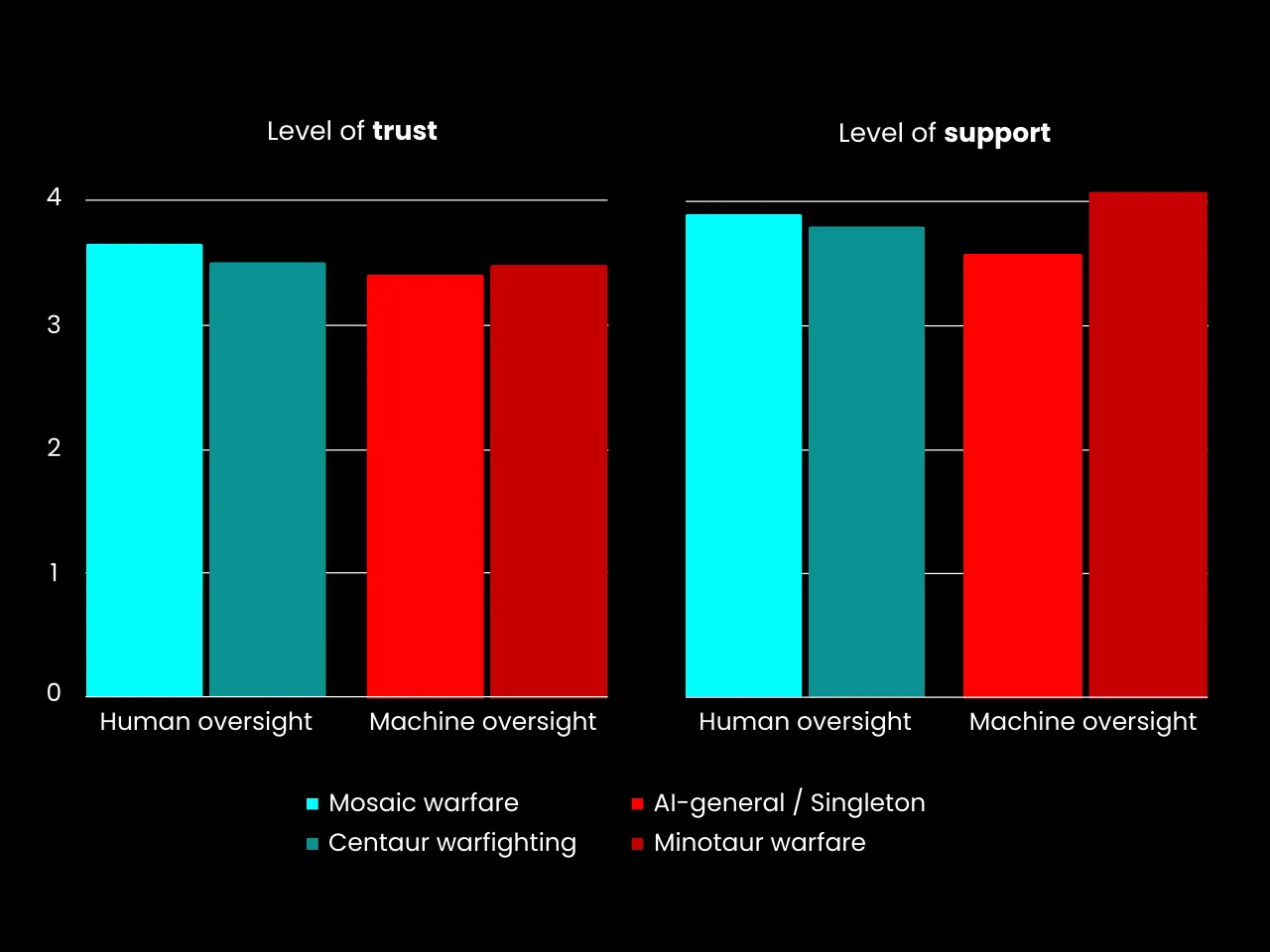

The survey reveals several key findings. First, officers can trust AI-enhanced military technologies in different ways, based on variation in the decision-making level and type of oversight of these new capabilities. While officers are generally distrusting of different types of AI-enhanced weapons, they are least trusting of capabilities used for singleton warfare (strategic decision-making with machine oversight). On the other hand, they demonstrate more trust for mosaic warfare (human oversight of AI-optimized strategic decision-making). This shows that officers consistently prefer human control of AI to either identify nuanced patterns in enemy activity, generate military options to present an adversary with multiple dilemmas, or help sustain warfighting readiness during protracted conflict.

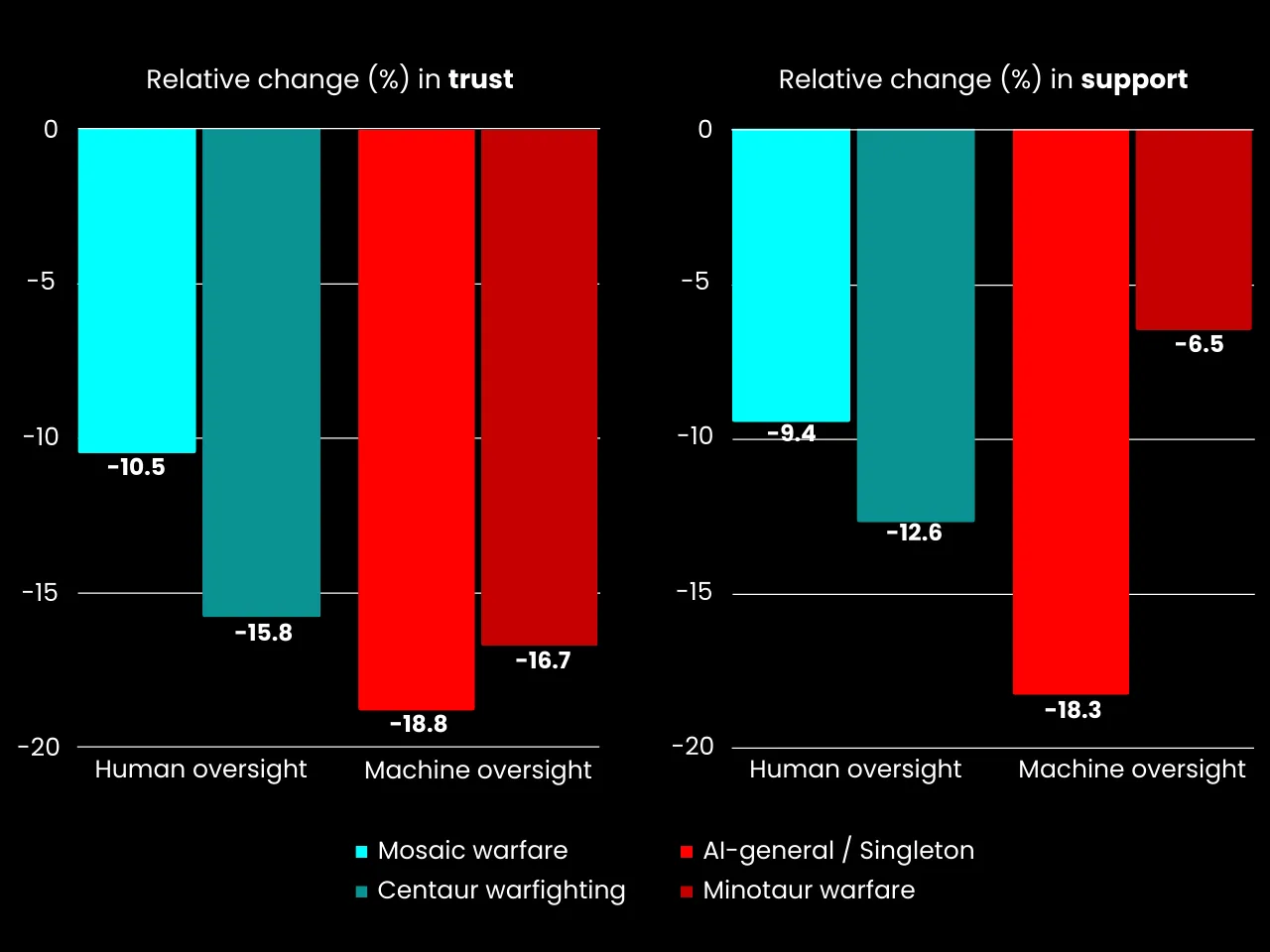

Compared to the baseline group, officers’ trust for AI-enabled military technologies declines more in terms of singleton warfare (18.8 percent) than it does for mosaic warfare (10.5 percent)—see Figure 1. While differences in officers’ mean levels of trust compared to the baseline group are statistically significant for both types of AI-enhanced warfare, they are more pronounced for new military capabilities used for singleton warfare than for mosaic warfare. Also, the average change in probability for officers’ trust in both types of AI-enhanced warfare (that is, the average marginal effect of AI-enhanced military technologies on officers’ trust) is only statistically significant for singleton warfare. Overall, these results suggest that officers have less distrust of AI-enhanced military technologies that are used with human oversight to aid decision-making at higher echelons.

These results for levels of trust are largely mirrored by officers’ attitudes of support. Officers demonstrate less support for AI-enhanced military technologies used for singleton warfare compared to the baseline group, and at virtually the same level—18.3 percent—and degree of statistical significance. Compared to the baseline group, however, officers also support minotaur warfare more than other patterns of AI-enhanced warfare, with change in the level of support around 6.5 percent. This suggests that while officers may have less distrust of AI-enhanced military technologies incorporated at higher levels of decision-making and with human control, they are more supportive of AI-enhanced military technologies used for tactical-level decision-making and with machine supervision. In sum, officers’ attitudes seem to reflect King’s College professor Kenneth Payne’s argument that “warbots will make incredible combatants, but limited strategists.”

The officers’ relatively higher support for tactical-level use of AI-enhanced military technologies reveals a second key finding. The officers’ attitudes toward AI-enhanced military technologies can be more pronounced for support than trust. This implies what some scholars call a “trust paradox.” Officers appear to support the adoption of novel battlefield technologies enhanced with AI—even if they do not necessarily trust them. This phenomenon relates mostly to minotaur warfare (the use of AI for tactical-level decision-making and with machine supervision). This suggests that officers expect that AI-enhanced military technologies will collapse an adversary’s time and space for maneuver while expanding the US military’s, which is based on a shortened “sensor-to-shooter” timeline that senior military leaders believe is the lynchpin to defeating near-peer adversaries in future conflict.

Variation in the magnitude of officers’ support for AI-enhanced military technologies used for decision-making at the tactical-level and with machine oversight is greater than shifts in their trust (Figure 2). In addition, the results show that the difference in officers’ attitudes of trust and support are statistically significant: Officers support AI-enhanced military technologies used for minotaur warfare more than they trust them. The average change in the probability that officers will support AI-enhanced military technologies used for minotaur warfare is also higher than for the other three types of AI-enhanced warfare.

Combined, these results indicate a misalignment of beliefs in US officers’ support and trust toward AI-enhanced military technologies. Despite supporting the adoption of such technologies to optimize decision-making at various levels and degrees of oversight, officers do not trust the resulting types of potential warfare on account of emerging AI-enabled capabilities. This result suggests that US officers may feel obliged to embrace projected forms of warfare that go against their own preferences and attitudes, especially the minotaur warfare that is the basis of emerging US Army and Navy warfighting concepts.

Other factors further explain variation in officers’ trust toward AI-enhanced military technologies. In my survey, when controlling for variation in the level of decision-making and type of oversight, I find that officers’ attitudes vis-à-vis these technologies can also be shaped by underlying moral, instrumental, and educational considerations.

Officers who believe that the United States has a moral obligation to use AI-enhanced military technologies abroad reflect a higher degree of trust in these new battlefield capabilities, which is consistent with attitudes of support as well. This suggests that officers’ moral beliefs for the potential benefits of AI-enhanced military technologies used abroad, such as during humanitarian assistance and disaster relief operations, may help overcome their inherent distrust in adopting these capabilities.

In addition, officers who attach an instrumental value to AI-enhanced military technologies and experience a “fear of missing out” attitude toward them—that is, they believe other countries’ adoption of such technologies compels the United States to adopt them too, lest it is disadvantaged in a potential AI arms race—also tend to have greater trust in these emerging capabilities. Similar attitudes of trust are observed when considering education. The results show that higher education reduces officers’ trust in AI-enhanced military technologies, implying that greater or more specialized knowledge raises questions about the merits and limits of AI during future war. Finally, at the intersection of these normative and instrumental considerations, I find that officers who believe that military force is necessary to maintain global order also support the use of AI-enhanced military technologies more. Together, these results reinforce earlier research showing that officers’ worldviews shape their attitudes toward battlefield technologies and that officers can integrate different logics when assessing their trust and support for the use of force abroad.

How to better prepare officers for AI-enabled warfare

This first evidence about US military officers’ attitudes toward AI paints a more complicated picture of the evolving character of war on account of emerging technologies than some analysts allow. Yet, these attitudes have implications for warfighting modernization and policy and for officers’ professional military education, including for the governance of nuclear weapons.

First, although some US military leaders claim that “we are witnessing a seismic change in the character of war, largely driven again by technology,” the emergence of AI-enhanced military technologies in conflict may constitute more an evolution than a revolution. While the wars in Gaza and Ukraine suggest important changes in the way militaries fight, they also reflect key continuities. Militaries have traditionally sought to capitalize on new technologies to enhance their intelligence, protect their forces, and extend the range of their tactical and operational fire, which combine to produce a “radical asymmetry” on the battlefield. Most recently, shifts in how drones are used and constrained by countries have been shown to also shape public perceptions of the legitimate—or illegitimate—use of force, a result consistent with emerging fully autonomous military technologies.

However, the implications of these and other capabilities for strategic outcomes in war is at best dubious. Strategic success during war is still a function of countries’ will to sacrifice soldiers’ lives and taxpayer dollars to achieve political and military objectives that support vital national interests. Indeed, officers in my study may have supported AI-enhanced military technologies used for minotaur warfare the most. But study participants still demonstrated far less trust and support for new battlefield technologies overall than may be expected given the hype—if not hyperbole and fear—surrounding their military innovation. These results suggest that military leaders should temper their expectations regarding the paradigmatic implications of AI for future conflict. In other words, we should “prepare to be disappointed by AI.” The lack of such a cleareyed perspective allows, according to US Army Lieutenant Colonel Michael Ferguson, the emergence of “fashionable theories that transform war into a kabuki of euphemisms” and obscure the harsh realities of combat. It is a clash of will, intensely human, and conditioned by political objectives.

Second, officers’ attitudes of trust for AI-enhanced military technologies are more complex than my study shows. Indeed, as one former US Air Force colonel and currently analyst with the Joint Staff J-8 directorate notes, it is “difficult for operators to predict with a high degree of probability how a system might actually perform against an adaptive adversary, potentially eroding trust in the system.” In another ongoing study, I find that officers’ trust in AI-enhanced military technologies can be shaped by a complex set of considerations. These include technical specifications, namely their non-lethal purpose, heightened precision, and human oversight; perceived effectiveness in terms of civilian protection, force protection, and mission accomplishment; and oversight, including both domestic but especially international regulation. Indeed, one officer in this study noted that trust in AI-enhanced military technologies was based on “compliance to international laws rather than US domestic law.”

These results suggest the need for more testing and experimentation of novel capabilities to align their use to servicemembers’ expectations. Policymakers and military leaders must also clarify the warfighting concepts within which the development of AI-enhanced military technologies should be encouraged; the doctrine guiding their integration in different domains, at different echelons, and for different purposes; and the policies governing their use. For this latter task, officials must explain how US policy coincides with—or diverges from—international laws, as well as what norms condition the use of AI-enhanced military technologies, considering how officers in the field-grade ranks, at least, expect these capabilities to be used. To fill this gap, the White House recently announced a US policy on the responsible military use of AI and autonomous functions and systems, the Defense Department adopted a directive governing the development and use of autonomous weapons in the US military, and the Pentagon also created the Chief Digital and Artificial Intelligence Office to help enforce this directive, though this office is reportedly plagued by budgetary and personnel challenges.

Finally, military leaders should also revamp professional military education to instruct officers on the merits and limits of AI. They should explore the application of AI in other strategic contexts, including nuclear command and control. Many initiatives across the US military already reflect this need, especially given officers’ hesitancy to partner with AI-enabled capabilities.

Operationally, “Project Ridgeway,” led by the US Army’s 18th Airborne Corps, is designed to integrate AI into the targeting process. This is matched by “Amelia” and “Loyal Wingman,” which are Navy and Air Force programs designed to optimize staff processes and warfighting. Institutionally, in addition to preexisting certification courses, some analysts encourage the integration of data literacy evaluations into talent-based assessment programs, such as the US Army’s Commander Assessment Program. Educationally, the service academies and war colleges have faculty, research centers, and electives dedicated to studying the implications of AI for future war. The US Army War College recently hired a professor of data science, the US Naval Academy maintains a “Weapons, Robotics, and Control Engineering” research cluster, and the US Naval War College offers an “AI for Strategic Leaders” elective.

At the same time, wargames conducted at the US Naval War College and elsewhere suggest that cyber capabilities can encourage automation and pre-delegation of nuclear command and control to tactical-levels of command and incentivize aggressive counterforce strategies. But my results suggest a puzzling outcome that deserves far more testing. Taken at face value, and notwithstanding that the results could be the same as the use of nuclear weapons in war, the results raise a troubling question: Would officers actually be amenable to support a potential automation and pre-delegation of nuclear command and control to the tactical-level AI, even if they do not trust it or trust or support the use of AI to govern counterforce strategies, as my results suggest?

While this conclusion may seem outlandish—contradicting a body of research on the nuclear “taboo,” crisis escalation, and sole presidential authority for the use of these weapons—Russia’s threats to use nuclear weapons in Ukraine have encouraged the US military to revisit the possibility of the limited use of nuclear weapons during great power war. Despite or because of the frightening potential of this “back to the future” scenario, which echoes the proliferation of tactical nuclear weapons during the Cold War, US war colleges have reinvigorated education for operational readiness during a tactical nuclear exchange between countries engaged in large-scale conflict.

The extent to which these and other initiatives are effectively educating officers on AI is unclear, however. Part of the problem is that the initiatives pit competing pedagogical approaches against each other. Some programs survey data literacy and AI in a “mile wide, inch deep” approach that integrates a single lesson into one course of a broader curriculum. Other programs provide greater development opportunities and a “narrower and deeper” approach, in which a handful of officers voluntarily select electives that ride on top of a broader curriculum. Other programs, like the one at the US Army War College, attempt the “golden thread” approach, which embeds data literacy and AI across courses that frame a broader instructional plan. However, this latter approach forces administrators to make important tradeoffs in terms of content and time and assumes in-depth faculty expertise.

Going forward, the Joint Staff J-7—the directorate responsible for coordinating training and education across the US joint force—should conceptualize professional military education as a continuum of sustained and additive enrichment over time in terms of data literacy and AI instruction. Pre-commissioned students attending the service academies or participating in the Reserve Officers’ Training Corps should be exposed to foundational concepts about AI. Junior and mid-grade officers should integrate these insights during training, deployments, and while attending Intermediate Level Education, such as the US Army’s Command and General Staff College. Upon selection to the war colleges, officers should wrestle with conceptual, normative, and instrumental considerations governing the use of AI in combat, which my study suggests can shape military attitudes toward novel technologies.

This end-to-end educational approach, of course, will take time and money to adopt. It is also liable to the prerogatives of different stakeholders, service cultures, and inter-service rivalries. By aligning training and education to clear and feasible learning outcomes, however, this holistic instructional model capitalizes on existing opportunities to ensure that the US military is ready and willing to adopt AI-enhanced military technologies during peacetime and future wars in ways that align with international laws and norms governing their legitimate use.

Paul Lushenko is lieutenant colonel in the US army and director of special operations and a faculty instructor in the US Army War College. He is the co-editor of Drones and Global Order: Implications of Remote Warfare for International Society (Routledge, 2022) and co-author of The Legitimacy of Drone Warfare: Evaluating Public Perceptions (Routledge, 2024). He received his PhD in international relations from Cornell University.

We must NEVER let machines have control. We have Empathy and Compassion that NO Machine will ever have. AI is a Slippery Slope at best.

One of the most interesting pieces I have ever read related to human oversight of potential military applications of AI systems. It is particularly concerning when related to foreign political systems, not that we have even been perfect. Yesterday’s passing of Dr. Kissinger brings that home very sharply. Thank you Ltc. Lushenko.

Great topic. I would love to see what the results around the singleton warfare look like from O-7 up. Will our generals and admirals be afraid of automating themselves out of work, or will they find AI promising and helpful?