The Silicon Valley Way: Move fast and break…aviation safety?

By David Woods, Mike Rayo, Shawn Pruchnicki | May 29, 2025

A seemingly unending stream of incidents, close calls, and fatal accidents has challenged confidence in aviation safety. Image: ErsErg via Adobe Stock

A seemingly unending stream of incidents, close calls, and fatal accidents has challenged confidence in aviation safety. Image: ErsErg via Adobe Stock

Aviation safety has been in the news lately for all the wrong reasons, and everyone from potential passengers (which is most of us) to high-ranking government officials are looking for answers. For decades, the commercial aviation system has effectively and quietly built a record of safety equaling or surpassing all others. (According to a report by the International Air Transport Association, there was one accident for 880,000 flights last year.) The ultra-safe performance record required hard work and learning from experiences to improve safety continually even as accidents almost disappeared.

But now a seemingly unending stream of incidents, close calls, and fatal accidents has challenged confidence in aviation safety. Chronic shortages of air traffic controllers combined with unreliable equipment has prompted slowdowns and even stoppages of arrivals and departures at airports. Most recently, Newark Liberty Airport has had three communication failures in two weeks. The equipment breakdowns rendered the control tower unable to track or communicate with aircraft for tens of seconds at a critical flight stage. Similarly, on May 12 the Denver Air Route Traffic Control Center lost communications with aircraft when primary and backup communication frequencies failed, forcing controllers to scramble as they lost communication with at least 20 aircraft for a number of minutes.

Before that, on January 29th, a midair collision between a commercial airliner and an army helicopter in the congested airspace around Washington Reagan National airport killed all 67 people onboard both aircraft. Checks of aviation incident reporting systems revealed that the collision had been preceded by a history of many close calls but had produced no changes to improve safety.

Even after the fatal accident, near misses have continued to occur, as helicopter traffic disrupts commercial traffic attempting to land, requiring go-arounds. And before that, a plug door gave way mid-flight on an Alaskan Airlines flight, startling passengers with a four-foot hole in the fuselage of their Boeing aircraft. Only lady luck intervened to avoid any fatalities.

The magnitude and frequency of these and other breakdowns in aviation safety have stimulated a search for answers ranging from small fixes to extreme makeovers. The industry and the Federal Aviation Administration (FAA) have always worked to improve infrastructure and use new technology, but the breakdowns highlight that progress has not been sufficient. The FAA has now announced plans to invest billions to overhaul the air traffic system and infrastructure over the next several years, and officials look to Silicon Valley successes and methods “to plug in to help upgrade our aviation system.”

It seems fair to suggest aviation’s current troubles are due to the slow adoption of new technological capabilities. Therefore, it would seem obvious to apply Silicon Valley’s methods for rapid change to aviation, using software and AI algorithms to replace legacy technologies, automation, and people. After all, Big Tech has been successfully developing new technologies and innovations dramatically, changing virtually all areas of society.

The Silicon Valley Way is captured in the mantra “move fast and break things.” However, rapid change and increasingly sophisticated technologies can create more or different risks within the layers of interconnected systems and people, which must work together seamlessly under varying conditions to make flying safe for the millions who board planes every day. At its core, the move fast and break things strategy asserts its mistakes are justifiable no matter how big they are.

For Big Tech, mistakes produce financial losses that are more than offset by the financial gains that come from being the first to innovate new capabilities, like enhanced smart phones or virtual reality headsets for gaming enthusiasts. But this strategy is at odds with safety-critical, complex worlds like aviation.

Aviation became ultra-safe through the methods for proactive safety in systems engineering. The mantra of proactive safety is “create foresight about the changing shape of risk before anyone is harmed.” The contrast with the Silicon Valley Way is stark. Moving fast emphasizes building and deploying quickly. Safety and systems engineering, by contrast, urges checking assumptions before changes go into effect, and catching and addressing mistakes before their consequences become devastating. Systems engineering monitors for shifting or missed vulnerabilities—and changing course before vulnerabilities become costly accidents.

The proactive safety perspective places aviation’s current trouble in a different light: Have all parties in the aviation industry taken its hard-won record of safety excellence for granted? Are short-term economic pressures, which lead to symptoms such as unreliable equipment and shortages of air traffic controllers, undermining advances in proactive safety?

Proactive safety in systems engineering and operations is a thorough, tried and tested method in aviation but one that looks to be moving too slowly or has been degraded by short-term economic factors. The Silicon Valley Way will move fast but incurs high-consequence risks. Are we trapped? Advances in how to build resilient systems indicate it is not one or the other. There is a path that can accomplish both.

A trade-off trap we’ve seen before. Boeing until recently had a stellar record of deploying highly reliable systems. Institutional leaders believed that designs were safe, and they had risks under control. However, the engineers within the organization were operating under intense pressure to move faster to meet schedules, cut costs, and introduce new capabilities to maximize revenue. Then twin accidents happened. In late 2018 and early 2019 two Boeing 737 MAX aircraft crashed shortly after takeoff. An investigation by the House Transportation and Infrastructure Committee indicated that the crashes—which led to the death of 346 people and cost the company more than $30 billion—documented a “disturbing pattern of technical miscalculations and troubling management misjudgments made by Boeing. It also illuminates numerous oversight lapses and accountability gaps by the FAA that played a significant role in the 737 MAX crashes.”

Post-accident independent investigations revealed that the warning signs of impending trouble were discounted and ignored due to the competitive pressures to move fast and avoid risk of additional costs or delays in the design and certification process. Boeing had to add new automation to adjust for the impact of more powerful and efficient engines but assumed the automation did not affect safety. They did not test effectively to assess new failure modes—how a sensor failure would lead to automated, faulty control of the aircraft that would prove difficult for the flight crews to correct.

This pattern of discounting warning signs when under production or schedule pressure is not unique to Boeing. The pattern has been seen in the pre-accident buildup to both the Challenger and Columbia space shuttle accidents. Evidence showed the systems were encountering situations beyond the accepted engineering and performance limits of normal, reliable, and robust operations. But instead of stepping back and investigating, the organizations discounted evidence of threats to safety and pressed on to meet speed pressures. When the only choice seemed to be thoroughness or speed, they chose speed.

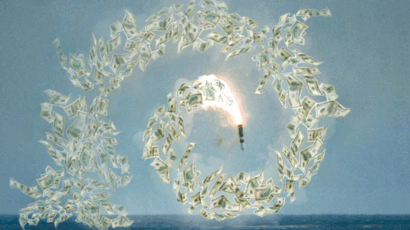

A tale of two mantras, in two acts. The recent spate of SpaceX explosions provide a unique look at how that company is dealing with this decision to pursue either speed or thoroughness. SpaceX’s Starship program is an ambitious attempt to build a large, reusable launch vehicle that can carry high payloads into space, thereby lowering costs. Consistent with the move fast mantra, SpaceX management views explosions as necessary symptoms of success and are valuable, even required, to build a fast pace of innovation. SpaceX operates on a philosophy of “successful failures” that are necessary for learning. The point is to make mistakes and then learn from them. The phrase draws a stark contrast to a comment attributed to Bismarck who united various states into the modern country of Germany in the late 19th century: When asked about learning from his mistakes, he is reputed to have replied, “I prefer to learn from others’ mistakes.”

Act I: prologue and burnt metal. In April 20, 2023, during a SpaceX Starship rocket launch, the energy released during launch blew up the launchpad and harmed the rocket, requiring its destruction. The launchpad explosion created a large debris field affecting a nearby town outside the evacuation zone and started a fire that razed 3.5 acres in a state park. The rocket had to be destroyed before it reached its planned altitude, a move that SpaceX referred to as a “rapid unscheduled disassembly.” Ironically, those in charge at SpaceX learned little about safe launches that wasn’t already known. They already understood the safeguards required for deflecting uncontrolled energy release but dismissed them due to scheduling pressure and the slow pace of meeting new testing requirements. The cost of the accident was large, but justifiable, measured in the amount of metal, advanced technologies and natural ecosystem that was destroyed.

Act II: loud explosions, quiet adaptation. On March 6, 2025, another SpaceX rocket exploded minutes after launching from Texas. In addition to the damage the explosion created in and around the launchpad, the large debris field in the commercial airspace around the launch site created other disruptions. Air traffic controllers had to recognize the new risk and quickly move air traffic away from possible debris in both SpaceX rocket explosions. Again, the damage and cost were measured in burnt metal and advanced technologies and not in human terms, but only because of the actions of an adaptable air traffic control system.

In both cases, the rocket program attempted to do new things and push boundaries—larger systems, higher forces to tame, aspirations for reusable systems. Doing things differently means unexpected effects, which leads to being prepared to discover unanticipated interactions and risks. In both cases, SpaceX’s systems were unable to cope. In both cases, the air traffic controllers, who have no explicit training on dealing with debris from rocket explosions, managed the resultant challenges with minimal impact on pilots, passengers, and the public. Because they were expert professionals, the air traffic controllers quietly avoided any further repercussions.

Experienced people are a critical ingredient for meaningful proactive safety. They are vital to navigating uncertainty in degraded or exceptional air traffic management operations, well beyond what automation via any family of algorithms can accomplish. However, their quiet effectiveness to keep systems calm is often used as leverage to remove them. They, too, are a current target of the move fast and break things camp.

Earlier this year, the administration fired 400 Federal Aviation Administration employees. “All of these people are part of the safety net,” said David Spero, president of the Professional Aviation Specialists Association as reported by the Associated Press. “The more of them that are not there, the more difficult it becomes to do the actual safety oversight.”

Charting a route to escape the trade-off trap. The true way forward in technology development—in this case, an air traffic control system that moves airplanes on schedule and keeps people safe—is not either-or, but both-and. It is not to follow a relentless march towards speed of development, but to be able to effectively determine when to favor thoroughness, and when to favor speed. Fortunately, there are systems architectures that can be both fast and thorough, each at the right time. The field of resilience engineering is working out how to design these more adaptive systems for high-risk worlds. The methods prioritize the capability to monitor for signals of trouble ahead as development and operations proceed, in a way that those designing a system can know when to shift the emphasis between speed and thoroughness. These systems also invest in the ability to shift fluidly as signs indicate a course change is needed.

The escape route is illustrated practically in a development at NASA in the aftermath of the Columbia space shuttle accident. NASA developed a new cross-cutting organization called the NASA Engineering and Safety Center (NESC). The NESC carries out a learning function as it tackles problems that arise at the intersection of normal organizational groups. It is able to learn about emergent systems issues that cut across the usual engineering and operational areas. The learning role tends to be proactive, diagnosing issues before serious incidents arise. The NESC is able to facilitate substantive improvements that exceed the scope of any one group within the organization. Also noteworthy, the NESC’s activities tend to produce results that enhance both safety and effectiveness at the same time.

Implementing resilience engineering practices requires organizations to recognize basics about complexity and adaptation. Adaptive organizations develop the ability to shift priorities, recognizing that surprises will inevitably occur and that responding late to signals of surprise has costs that are at least an order of magnitude higher than normal economic cost/benefit decisions. Organizational adaptability requires sustained investment in groups like NASA’s NESC that facilitate learning and action across organizational boundaries and a willingness at the leadership level to resist the inevitable pressure to value speed and short-term economics over thoroughness.

In looking to the future of aviation safety in the face of the pressure to move fast, those in charge of the national air transport system should focus on the following four-point plan:

First, they need to understand that moving fast and breaking things is a risk seeking methodology; strategically, it is willing to accept large financial losses and, if applied to worlds like aviation, to harm people. But experts can find balance, sacrificing some speed to reduce serious risks.

Second, the industry needs to heed the alarm bells that have already gone off and recognize that safety needs to take precedence over short-term economic factors, reinvigorating past advances in proactive safety and developing new ones. This requires coordination over multiple jurisdictions and national leadership.

Third, all layers of management need to invest in monitoring systems that tell them when it is safe to go faster and when uncertainty and surprise are looming. They should also emphasize thoroughness. These monitoring systems will cost money to build in the near-term but will pay dividends in the long-term.

Finally, a coordinated set of evaluation methods need to be adopted, beginning early and continuing throughout development, deployment, and post-deployment phases of monitoring systems. These methods need to assess how new technology expands the ability of experienced people to handle disruptions, anomalies, and surprises that inevitably occur in complex real-world systems. Maximizing deployment of the latest automation technology or satellite communications alone is insufficient. Investing in the joint performance of human operators and technology (and testing for effective joint performance) will result in the continuation and expansion of the robust and resilient air transportation system we have come to expect.

Reinvigorating aviation safety should be a high priority for all stakeholders. The recent incidents highlight that past progress based on techniques for proactive safety has stalled. But if the response to this lack of safety progress is adopting the Silicon Valley Way—to move fast and break things—while upgrading aviation infrastructure technology, the first thing to break may be the proactive safety approach that helped to make aviation ultra-safe in the first place.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: Aviation safety, Boeing, Boeing MAX, Challenger, Columbia, Denver airport, FAA, NASA, Newark Airport, Silicon Valley, SpaceX, Starship, air traffic, airline accidents, airports, big tech, rocket, space shuttle

Topics: Disruptive Technologies

So is Space X still operating under their mode of move fast break it fix and try again? Since this will be a manned vehicle and also introduces major environmental risks, the new NESC of NASA should apply. Also, NASA ‘s budget has been cut by more than 20% so what is the impact NASA’s safety policies?