Private-sector research could pose a pandemic risk. Here’s what to do about it

By Gerald L. Epstein | February 16, 2023

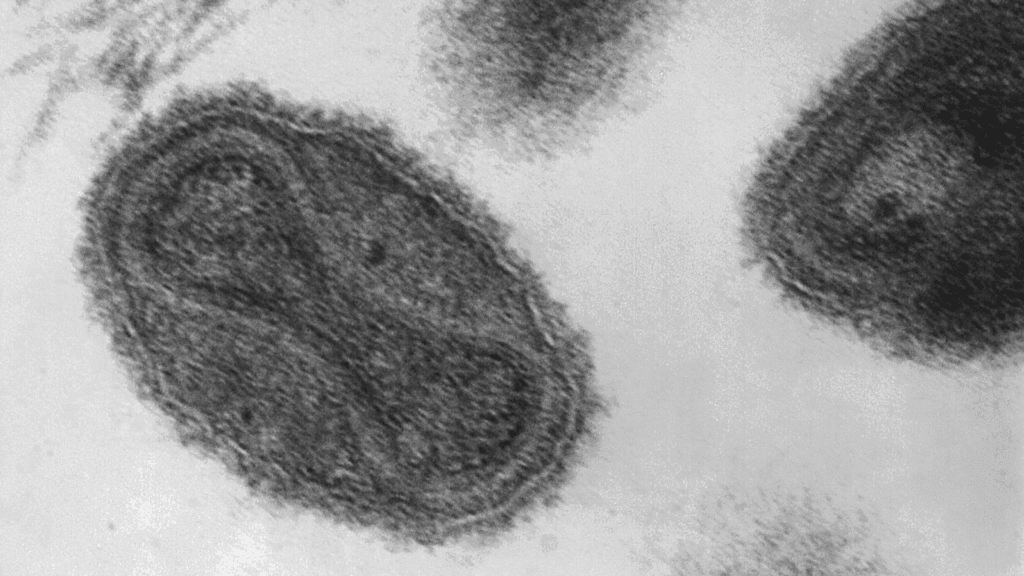

An image of the variola virus, which causes smallpox. Researchers working with independent funds resurrected an extinct relative of the virus, the horsepox virus, in a study published in 2018.

An image of the variola virus, which causes smallpox. Researchers working with independent funds resurrected an extinct relative of the virus, the horsepox virus, in a study published in 2018.

In 2018, Canadian academics with pharmaceutical industry funding made a stunning announcement. They had synthesized horsepox, a pathogen that no longer exists in nature and that is closely related to the smallpox virus, variola. The controversial product was meant as a vaccine candidate—intended to infect humans and confer immunity without being transmissible or pathogenic—but the biotechnology involved in its production could readily have been applied to create a pathogen with the potential to start a deadly pandemic.

As it was, the research raised questions about whether it lowered the bar for bad actors to synthesize variola as a biological weapon. But the study also highlighted a broader, perhaps less appreciated risk. By synthesizing a close cousin of a pandemic killer, the Canadian, pharma-backed researchers showed there is funding—and capability—outside the government to create and manipulate potential pandemic pathogens. In the United States, researchers with nongovernmental funding, unlike their government-funded counterparts, can perform experiments with so-called “enhanced potentially pandemic pathogens” largely free from regulation. These are germs that not only might trigger a pandemic but which researchers have made more virulent or transmissible in a lab. With a few exceptions, like for the original SARS virus (SARS-COV-1), no laws or regulations constrain the ability of independently funded companies to create or use lab-created pathogens with pandemic potential.

Observers have long seen the need for broadening the reach of US government policies that subject enhanced potential pandemic pathogens research to extra scrutiny; the risks that such research might pose do not disappear merely because no government funds are involved. Indeed, the US policy that currently creates an oversight framework for government-funded activity in this area explicitly sets out a marker that it be revised in some future version to cover “all relevant research activities, regardless of the funding source.” But doing so is not straightforward. From new laws to incentives for voluntarily following government policies, there are a range of possibilities for achieving this goal. As the amount of privately funded activity in the US bioeconomy increases, doing so will only become more important.

The light touch. The primary federal law that controls the possession of pathogens that could pose a major public health threat has its roots in the odd tale of an Ohio septic tank inspector in the mid-1990s.

Larry Wayne Harris, a purported member of the Aryan Nations white supremacist group, ordered freeze-dried plague bacteria from a Maryland company that maintained stocks for research purposes. Harris was arrested and charged with mail fraud for claiming the bacteria were for his employer, but the fact that he was able to order them at all highlighted a weakness in federal biosecurity regulations. Congress subsequently passed a 1996 law requiring the Health and Human Services Department to regulate the transfer of so-called “select agents”—pathogens such as anthrax that could threaten public health. After the October 2001 anthrax attacks, the select agent program was expanded to control possession and use of these pathogens, in addition to their transfer.

These select-agent rules notwithstanding, the US government has traditionally been wary of legislating controls over scientific research. A major scientific debate in the mid-1970s illustrates this tendency well.

At the dawn of the recombinant DNA era, when societal fears over genetic engineering were rising, prominent scientists met in a now-famous conference in Asilomar, California, and developed voluntary guidelines for their research. The National Institutes of Health (NIH) included guidelines based on these recommendations into the terms and conditions of its research grants, updating them as the science advanced.

Although Congress faced pressure to do something, NIH worked hard to head off legislation, which it felt would overly constrain research practices and stunt innovation; also, the legislation would set up restraints that could not be modified without subsequent legislation.

Congress has passed laws regulating the use of human subjects – which apply only to federally funded research—and the use of research animals. With these exceptions, however, along with the select agent legislation discussed above, the light touch of legislation governing life sciences research has remained in place ever since—a precedent that would need to be reversed if new legislation were to be enacted governing private-sector research involving enhanced potential pandemic pathogens. Alternatively—or in addition—several other approaches to encouraging greater oversight could be adopted.

New legislation mandating oversight. The most direct way to extend oversight of enhanced potential pandemic pathogen research to cover privately funded work is through new legislation. Only Congress has the power to mandate restrictions or oversight approaches that would apply to any research within the United States, irrespective of funding source.

The select agent program provides a precedent for statutory control over life science research. However, whether Congress would act again is unknown. Given that an accidental laboratory release is one possible origin for the COVID-19 pandemic, the issue of research involving potential pandemic pathogens—like other aspects of the pandemic—is highly politicized. The heat that US-government funded research on that topic has generated may make it even less likely that Congress could take a nuanced approach to applying biosecurity rules to the private sector.

The Health and Human Services Department is currently the only government agency that has a review process for overseeing enhanced potential pandemic pathogen research. (All other government agencies remain under a 2014 funding pause on research that would enhance the pathogenicity or transmissibility of a group of pandemic-capable viruses.) The agency’s review framework sets out a number of principles that must be satisfied and establishes an extra layer of review for all proposed projects that might create, transfer, or use enhanced potential pandemic pathogens. The most straightforward option for mitigating private-sector pandemic risks would be for Congress to pass a law requiring that any similar privately funded activities undergo an equivalent government review.

Utilizing existing legislation. Far simpler than passing new legislation would be for the Executive Branch to take action to bring enhanced potential pandemic pathogen research under existing law. One way to do so is relatively straightforward, although not without challenges. A second approach is more speculative.

The health and agriculture secretaries currently have the authority to place additional biological agents or toxins under the purview of the select agent program. Everyone with access to agents that have been so designated must undergo review by the FBI; all institutions possessing such agents and using them in research must be registered with the government and adhere to specified safety and security measures; and all incidents of theft, loss, or accidental environmental release of these agents must be reported.

Designating enhanced potential pandemic pathogens as select agents would bring them into this legally binding regime. Doing so might be challenging, however; unlike other select agents, these lab creations are defined by their attributes, not their names. It is their presumed transmissibility and virulence that would subject them to greater scrutiny. Even informed experts might disagree on whether a particular pathogen is an enhanced potential pandemic pathogen, or whether a proposed experiment is likely to generate one. Such subjectivity could pose legal problems because of the Constitution’s due process requirements, which require that regulated activities be specified clearly enough for a reasonable person to understand what is permitted and what is prohibited. One way around this difficulty would be to require anyone uncertain about the status of a pathogen—including one yet to be created—to seek clarification from the government before proceeding. However, the select agent program currently has no such provision.

One notable feature of the select agent program is that the relevant cabinet secretaries can designate experiments using select agents as “restricted,” requiring explicit government permission to perform them. For these experiments, the secretaries can specify additional requirements. One current example of a restricted experiment is conferring antibiotic or antiviral resistance to a select agent, if such resistance could compromise the ability to control the disease that agent is responsible for. If enhanced potential pandemic pathogens were listed as select agents and their creation or use were designated as restricted experiments, the existing government review regime could be made a requirement, even for privately funded research.

Another law that could arguably be utilized to regulate enhanced potential pandemic pathogen research is the Biological Weapons Antiterrorism Act of 1989, which implements the 1975 Biological Weapons Convention banning biological weapons. However, this route is much more problematic.

This law criminalizes the possession of any biological agent “of a type or in a quantity that, under the circumstances, is not reasonably justified by a prophylactic, protective, bona fide research, or other peaceful purpose.” This wording is identical to language in one of the convention’s prohibitions with the exception of “bona fide research,” which does not appear in the treaty text; it was added to the US law—but not defined—after lobbying by the life science research community. Although Congress has not specifically authorized it to do so, one can imagine the Justice Department issuing guidance specifying that the conduct of enhanced potential pandemic research without authorization of an appropriate government agency such as the Department of Health and Human Services would not be considered “bona fide research” and would therefore not be considered as having “prophylactic, protective, or other peaceful purpose.” That would remove its legal justification.

Indeed, the Justice Department has in the past issued guidance that clarifies permissible life science activities. A provision of a 2004 law that was specifically intended to criminalize unauthorized possession of the virus responsible for smallpox could be read as prohibiting the possession of smallpox vaccine as well. To rectify this misstep, the Justice Department issued a 2008 opinion that made it clear that it did not see this prohibition as extending to smallpox vaccine.

However, these two cases are not really equivalent.

The smallpox opinion narrowed the set of activities eligible for prosecution, and it did so in a very specific way. A hypothetical opinion on “bona fide research” would expand the Justice Department’s prosecutorial authority in a way that could be arbitrarily generalized, essentially allowing the agency to criminalize activities at its own discretion rather than that of Congress. It is very unlikely that the Justice Department would be willing to pursue this route in the absence of specific legislation and without establishing a formal regulatory rule-making process that would give notice to, and accept comments from, affected parties.

Whatever the mechanism, one important additional reason to have statutory control over potentially dangerous research is to facilitate investigations. In the absence of a potential criminal violation, law enforcement authorities are powerless to investigate anyone who might be suspected of conducting such research for nefarious purposes. A statute that requires research with enhanced potential pandemic pathogens only be done with government permission gives law enforcement the ability to investigate those who might be conducting such research outside of appropriate oversight.

Soft law: incentivizing oversight. Given the challenges of creating a new law or repurposing an existing one to regulate enhanced potential pandemic pathogen research, it’s worth thinking about other methods to create guard rails around the riskiest private-sector research. In lieu of “hard law” provisions, a number of “soft law” mechanisms can provide incentives for research institutions to voluntarily accept policies like the government’s enhanced potential pandemic pathogen policy. While that policy doesn’t allow non-government funded researchers to voluntarily submit projects for review, other federal oversight policies for life science research do. For example, current policy to deal with the threat that certain life science research might produce materials or information that could be misused for harm, which strictly applies only to US government-funded institutions, explicitly encourages and allows for the voluntary review of non-federally funded research as well.

Along the same lines, the NIH’s Guidelines for Research Involving Recombinant or Synthetic Nucleic Acid Molecules formally apply only to institutions receiving US government funding for life science research; in 1980, NIH added a section on voluntary compliance. Even though there was no requirement to do so, many private companies conducting recombinant DNA research sought NIH approval to demonstrate that they were acting responsibly. Under the new provisions, they registered their experiments with NIH, used only NIH-certified laboratory procedures, and even solicited permission from NIH to conduct the same types of experiments that federally funded institutions were required to get NIH approval for.

The framework for overseeing enhanced potential pandemic pathogen research currently makes no provision for voluntary review of non-federally funded activity, but it could.

Oversight as a condition of service. Regardless of the funding source of the research, scientific publishers will generally not accept manuscripts reporting on research involving human subjects if that research has not been approved by an institutional review board. These boards minimize the risk to research subjects to the extent possible, validate that the potential benefits of the research warrant the remaining risks, and ensure that research subjects are aware of those risks and voluntarily accept them. Publishers do not impose a similar requirement that enhanced potential pandemic pathogen research go through a review process analogous to the government’s reviews, but they could.

By law, the Food and Drug Administration requires all applicants for approval of drugs or devices to comply with federal human research subject protections as well. However, this legal requirement is specific to human research subjects and could not likely be generalized to require federal review of enhanced potential pandemic pathogen research. Moreover, it is not clear that any such research would be relevant to supporting the Food and Drug Administration approval process.

Norms. As was the case in the early days of genetic engineering, non-governmental entities performing controversial research such as that involving enhanced potential pandemic pathogens may wish to voluntarily place their work under the same oversight that the US government applies to work that it funds. An overt public campaign by industry and scientific leaders, or just the gradual buildup of expectations, could create a norm that responsible research institutions should apply this type of guidance. However, there would be no legal consequences for not doing so, nor would such an approach constrain anyone who was secretly pursuing this technology for illicit purposes.

One way to promote the development of norms that exceed federal requirements is through incentives. As an example, federal regulations regarding the use of research animals do not require third-party accreditation of animal use and care, but many institutions—including ones that are not federally regulated—seek it anyway. Such accreditation can be a way to demonstrate their willingness to exceed legal requirements, and it can open up sources of funding that are not available to unaccredited labs. (Of course, this particular incentive would have little leverage over self-financed activity.)

Tort Law and Liability. Whether or not a federal law or policy addresses some aspect of potentially risky research, anyone responsible for inflicting damage on another can be held responsible for providing compensation. Any laboratory working with potentially dangerous pathogens implicitly accepts the possibility that should such a pathogen escape laboratory containment and cause harm in the surrounding community, the laboratory could be legally liable. (Harm to laboratory workers would generally be addressed by the workers compensation system, which is a ‘no-fault’ process.)

To defend against these claims, a research lab would have to argue that it took reasonable care to avoid accidental releases, and that it implemented “reasonably prudent” protective measures. Such “reasonably prudent” measures are often—but not exclusively—defined by the generally accepted standard of practice protecting against such hazards. In the case of a biological research laboratory, this might mean adhering to guidelines such as the CDC/NIH guidance Biosafety in Molecular and Biomedical Laboratories. However, a 1932 court case ruled that the use of generally accepted practices is not necessarily a sufficient defense if additional measures that could have prevented the harm were available but not used.

Voluntary adherence to the government’s framework for enhanced potential pandemic pathogen research might demonstrate an additional level of prudence, were a laboratory to be accused of responsibility for an accident. Independent of any particular biosafety or biosecurity measures that might have been applied, an accident involving research that would not likely have been approved at all under the government’s framework could leave a laboratory in a vulnerable situation.

Legislation can establish “strict liability” for some hazards, eliminating the defense that the institution acted “reasonably prudently” and assigning it responsibility for any harm that can be traced to it, regardless of the actual mechanism of harm or utilization of protective measures. For example, in the majority of states, dog owners face strict liability for injuries caused by their dogs. Given the magnitude of potential harm, establishing strict liability for potentially triggering a pandemic would likely end enhanced potential pandemic pathogen research by any institution that was not willing to bet its continued existence on the success of its laboratory containment protocols.

To protect themselves from liability lawsuits in the event of damages that can be traced back to their research, privately funded institutions may not only want to follow best practices, but also purchase insurance that would cover any assessed damages. Even large institutions that “self-insure,” meaning that they are willing to assume the possible risk of a large payout to avoid the certain expense of purchasing liability policy, typically have excess insurance for those claims that would exhaust even their ability to pay. (Insurance companies themselves have reinsurance agreements with other insurance companies to mitigate against the possibility that they may be hit with a barrage of claims that would exceed their own available resources.)

If insurers were willing to offer liability coverage to institutions researching enhanced potential pandemic pathogens, they would have the incentive and the ability to require those institutions to follow the government’s review and oversight policies as a condition of coverage. It is worth noting that the growth of the commercial nuclear power industry, another human endeavor that offers the possibility of inducing widespread harm, was only made possible in the United States by the Price-Anderson Act, which caps the liability of any individual nuclear facility and creates an industry-wide mechanism to cover damages exceeding that limit—up to a point. Likewise, it would be impossible for insurers to cover losses as large as those that could be attributed to a pandemic; COVID-19’s costs to society have been estimated at many tens of trillion dollars.

With the growth of the bioeconomy and increasing amounts of privately funded life sciences research, restricting biosecurity policy only to government-funded institutions creates an ever-growing gap. Even though research with enhanced potential pandemic pathogens constitutes an extremely small fraction of the overall life science and biotechnology enterprise—and the fraction of that work done with private funding even smaller—the potential global consequences of such work make it increasingly important to develop governance approaches that go beyond attaching strings to US government dollars. Closing this gap within the United States is not sufficient, given the global extent of the life science enterprise and the global consequences of any lab-caused pandemic—but it is a necessary start.

Acknowledgments and Disclaimer: I thank David Gillum, Kimberly Winter, Wendy Hall, and others for helpful review and discussions; any remaining errors, of course, are the mine alone. In particular, I thank Dr. Hall for the proposal to utilize a Justice Department interpretation of the Biological Weapons Antiterrorism Act as a control mechanism. That approach does not necessarily represent the position of her employer and was developed in the context of exploring a wide range of possibilities for governing potentially threatening biological activity, without pre-judging their efficacy or desirability. The views expressed in this paper are mine and are not an official policy or position of the National Defense University, the Department of Defense, or the US government.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: COVID-19, gain-of-function research

Topics: Biosecurity