Alex Wellerstein pulls back the curtain on nuclear secrecy

By Susan D’Agostino | April 26, 2021

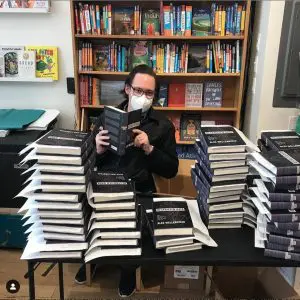

Alex Wellerstein leans against an Mk-17 hydrogen bomb casing at the National Museum of Nuclear Science and History in Albuquerque, New Mexico. Permission: Alex Wellerstein.

Alex Wellerstein leans against an Mk-17 hydrogen bomb casing at the National Museum of Nuclear Science and History in Albuquerque, New Mexico. Permission: Alex Wellerstein.

Historian Alex Wellerstein has thought about nuclear secrecy for a long time. He began writing his book, Restricted Data: The History of Nuclear Secrecy in the United States, when George W. Bush was president. He continued writing through the Obama and Trump administrations and released it during Biden’s first 100 days. Even so, the result seems current. US nuclear secrecy is a timeless topic, and Restricted Data informs the present as much as the past.

“Restricted data” is a legal term relating to information about nuclear weapons, fissile material, and nuclear energy in the United States. The idea: The existential threat to humanity posed by the bomb is so singular that attempts to keep it secret require a special legal construction. But nuclear secrecy is more than legalese. It is “a bundle of many different ideas, desires, fears, hopes, activities, and institutional relationships that have changed over time, at times dramatically,” Wellerstein writes.

American democracy and secrecy were never natural bedfellows. Neither were secrecy and scientists who value open discussions. The history of US nuclear secrecy is messy and fraught—all of which makes for delicious, if at times disturbing, reading.

I recently caught up with Wellerstein to talk about nuclear espionage, security theater, and even an occasion in the 1950s when the Bulletin of the Atomic Scientists kept a nuclear secret. What follows is an edited and condensed transcript of our conversation.

Susan D’Agostino: You opted not to seek special clearance to research your book. Why did you make that decision?

Alex Wellerstein: Well, God knows they probably wouldn’t have given me a clearance because I talk to too many people. But I wouldn’t have wanted one. Clearances give access to certain types of information but also come with considerable constraints. For me, there’s no inherent value in knowing something without being able to share it.

Susan D’Agostino: Did your lack of a clearance hinder your research?

Alex Wellerstein: You get real used to looking at deleted spots when you do any kind of work around nuclear weapons. And you get really used to your Freedom of Information Act [FOIA] requests being denied. With nukes there’s always going to be stuff deleted. But I was able to get some previously classified information through FOIA. There’s so much documentation out there that the problem was never not having access. I was drowning in paperwork for most of this book.

There are two reasons things might be still secret. One is it contains information that some classification guide out there says shouldn’t be released. And it’s going to be the judgment of whoever’s redacting it not to release it, based on the guide. Occasionally the things I want to know and the things that the censor cares about are the same, and then I have to work around that edge.

The other reason that it might be still classified is because nobody has taken the time to declassify it. This is a backlog problem. Only some small fraction of classified records have been reviewed for release. When I’m filing a FOIA request, I’m not expecting them to give me anything that would still be secret by any definition, but I’m expecting them to give me some things that nobody has bothered to request before and the agencies haven’t processed before. So, I did sometimes find new things this way.

Susan D’Agostino: In the case where the information you requested remained classified, how did you work around that edge?

Alex Wellerstein: Occasionally, they screw up the redaction, which gives you a glimpse behind the curtain. In one case, they sent me two different versions of the same classification guide in response to my FOIA request. But two different people reviewed these two identical versions, and they each redacted slightly differently. And if I combined their two versions, I sometimes had the full thing.

You learn that most of what they’re redacting is really boring. It’s not that they’re redacting, “Oh, we did some terrible thing.” It’s that they’re redacting that the size of this nozzle is 0.5 millimeters, and some other guy redacts the thing that says, “the size of this nozzle could also be 0.6 millimeters.” When you combine them together, you get the full story. But it’s usually not all that interesting a story.

I tried to understand how secrecy functions from the perspective of the person within the system—as opposed to how it looks on the outside, where it can look arbitrary, capricious, and foolish. On the inside, they’re given this impossible task of interpreting guidelines and then marking things up with absolute perfection. Their task is extremely technical. You can see the logic behind that, but it’s easy to say, “I don’t think the sum total of all of these tiny redactions is going to have much of an effect on proliferation one way or the other.”

Susan D’Agostino: Right, this illustrates your point that nuclear secrecy is really a political problem rather than a technical problem. Does the technical side not play a role? For example, you tell the story in your book of Klaus Fuchs, whose espionage challenged the notion that the Manhattan Project was the war’s best kept secret.

Alex Wellerstein: There’s value in some kinds of explicit information of the sort that we call secrets. In the case of Fuchs, [the Soviets] didn’t take his data and say, “great, now we have the blueprint.” But they did use it to coordinate their investigations a bit. They used it as something to check against, and they used it as a goal to reach. It’s not non-use. But it’s not the use that most people assume.

Explicit information—information you can write down—by itself is rarely sufficient for these kinds of technologies. There are technologies where explicit information directly translates in the use. For example, the written code of a computer virus is the final technology. There’s no distinction. But with most technologies, you need to translate the information into some sort of lived practice. If I gave you some form of plutonium and told you that you could use chemistry to turn it into this other form of plutonium, you’d need certain skills for this knowledge to be useful. If you did that to me, I’d probably kill myself in the process because I haven’t taken chemistry since high school. With nuclear weapons, you need to be able to marry the technical information with a huge infrastructural material development.

That isn’t saying the secrets are worthless, but it is saying that they’re probably much lower value than our system believes them to be. As a consequence, we might question whether the size, scope, and scale of the nuclear secrecy system we have is really worth that trade off.

Among states that have proliferated, or even the ones that have attempted the proliferation, neither their successes nor their failures can usually be attributed to access to or lack of information that can be written down in an explicit form. You can attribute failure to politics, high expense, resource cost, treaties, allies, enemies, and saboteurs. But secrecy doesn’t seem to be the thing that stops or enables people or countries from getting nuclear weapons.

Susan D’Agostino: So, is the US approach to nuclear secrecy a failure?

Alex Wellerstein: Let’s imagine the most charitable pro-secrecy argument we can have. They would say, “look, maybe the secrecy isn’t going to stop proliferation of some sort, but maybe it will slow things down.” If the lack of information adds any expense or extra time to a nation acquiring a nuclear weapon, and buys more time for diplomacy, then maybe it’s worth it.

I don’t think that’s a crazy argument. It isn’t easy to establish evidence for this argument, but it doesn’t mean that it’s not true. There’re plenty of things that we have a hard time establishing evidence for in the nuclear world, but nonetheless seem to be worth pursuing.

Susan D’Agostino: Still, does US nuclear secrecy need reform?

Alex Wellerstein: People on the outside tend to imagine that if they were suddenly in a position to enact new policy, they would immediately change everything. Imagine somebody comes to you and says, “look, here’s some technical fact that’s currently secret that we could release tomorrow.” What would the benefit be? What would the possible costs be? In most cases, the benefit isn’t going to be some huge, “oh, if we release this fact then we’ll be rolling in wealth and happiness.” It’s going to be, “well, it might improve some work on solid state physics that’s being done.” That could be interesting, but who knows what the value would be?

But the potential worst-case scenario costs could be quite high. You could say, “well, this might help some awful state or terrorist out there eventually get nuclear capability.” And so that’s why it’s easy to say that we can afford to wait a couple years since the benefits are really modest. That isn’t bad logic.

It’s very hard to argue that releasing any small fact—the diffraction of a wavelength through a certain material, for example—will somehow improve democracy or democratic discourse. The problem arises when you end up with this incredibly swollen collection of secrets, most of which are probably not that important. The aggregate of the system produces an effect where nuclear policy and knowledge live in their own world. That has had a very silencing effect on many topics related to nuclear weapons.

Susan D’Agostino: Does that silence also slow down an engaged public discussion? Do you think it has contributed to the massive US stockpile of nuclear weapons?

Alex Wellerstein: Oh, for sure. Most people were not aware of exactly how ridiculous the stockpile got, especially in the Cold War, but even today. You can look up this information now in such fine periodicals as the Bulletin of the Atomic Scientists, but most people are still pretty ignorant. The secrecy fosters that ignorance, even if the information is out there. Nuclear weapons are perceived as the most secret of the secrets. Whenever I talk to people online about nuclear weapons effects or what we know about deployments of warhead designs, they joke, “I’m on a [government watch] list now.” It’s a joke, but it also reflects this sensibility that proper people don’t talk about this.

Even presidential control of nuclear use authority is one of those topics where people—even scholars—will say there’s just too much that’s secret for us to even know what we’re talking about. It turns out that there is a lot of information out there, but there’s this perception that it’s not even worth trying to have real democratic deliberation. People essentially kick it up to a layer of policy that is itself within the classification sphere. That’s had long-term negative effects on the size of the stockpile, the types of weapons deployed, and the strategies. A very small number of people are making decisions on a matter that affects at least hundreds of millions, if not more.

Susan D’Agostino: It’s disturbing, yet you’ve also noted that some find comfort in nuclear secrecy. What role does “security theater” play in making people feel safe?

Alex Wellerstein: There’s a ritualistic aspect that’s not unique to nuclear secrecy. We see this not only in government secrecy, but fraternities, secrets societies, lodges, masons, whatever. These group rituals aren’t only about preserving the secret but also about making one feel like part of a select group and as if one has more control over this little domain than one actually does. The danger here is that people on the outside can see all of this and think that everything on the inside must be well run, which is not necessarily the case.

On the flip side, they could also suspect it isn’t well run. In the ’70s you had an inversion where a significant number of people saw secrecy and thought, “ah ha, that’s where corruption is!” I call this the politics of anti-secrecy, which is the same impulse that produces its own degradation. They’re both worrisome.

One of my favorite areas of tension in the Cold War is when the people inside the secrecy system discovered that things that they didn’t think were known outside of secrecy were known. It turns out secrets are very easy to find if you go looking for them, but very few people who are inside the regime of secrecy actually have experience in looking at what is known outside. They’re very poor at saying what’s a real secret and what’s classified as secret but is, in fact, on Wikipedia. These systems can be seductive and dangerous for those on the outside and the inside, as well.

Susan D’Agostino: Even the most anti-secrecy advocate is usually willing to say that some things need to be kept secret. So, who should determine what’s classified as secret?

Alex Wellerstein: It’s a really hard question to answer. When I get documents that they won’t release, like on a weapons system that the United States never built because it didn’t really work, I frequently think: How important can this be for the present world? Who are we thinking is going to build this? At the same time, I can also ask, “what’s the benefit of release?” It could help us understand the history, but the government’s going to rate that a lot lower than the potential downsides.

Right now, there are guides that say what is secret. Those are usually made by a small committee of people who are exactly the kind of people you’d want. For example, the guidebook for what could be released about laser fusion was put together by a bunch of physicists. Some are university researchers. Some are at national labs and knew more about specific weapons applications. They hashed out a policy that would both respect the need for the science to develop in a free and open way, but also respect that they saw some weapons implications that they didn’t want circulating in the wider world.

You could imagine a system in which people on the outside were able to get better explanations as to why things were secret. One of the reasons we reject secrecy decisions we don’t like is because it never comes with an explanation. It comes with at most a FOIA exemption number. If somebody could say to you, “you see, if I told you, that would actually give a scientist in North Korea an insight on how to make their weapons more powerful with less fuel.” Then the reasonable person would say, “oh, that makes so much sense to me.” But of course, for them to explain all this, they’d have to tell me the secret. They’re not going to do that for obvious reasons. And so, there’s a way in which this is unsolvable.

In the ’90s, when they were trying to open things up, they brought those classification officials into a much wider conversation. They didn’t just have them talk with university scientists but with people who lived around production plants that might be polluting the environment or people downwind of nuclear testing. They had them talk with historians who do research on these topics and struggle to tell certain stories. If you could widen the perspective of the people within the classification system, it wouldn’t produce radical change overnight, but there might be more options. They could give slightly more information without giving away any secrets. They could say, “we really believe this could impact our nuclear proliferation or nuclear terrorism priorities.” People on the outside of these systems might not buy that, but they might feel like it was less arbitrary. It feels incredibly arbitrary when all you can get is, “no—national security.”

Susan D’Agostino: In Restricted Data, you note that even the “staunchly anti-secrecy Bulletin of the Atomic Scientists” self-censored on the hydrogen bomb in the 1950s. What sense did you make of that?

Alex Wellerstein: When I point that out, I don’t mean that they were hypocrites. The 1950s Bulletin had core political goals. But in some of the areas—especially the H-bomb—it’s not clear that pursuing the goal of openness was going to fulfill the goal of not encouraging the arms race. They erred on the side of the one they felt was safer.

Susan D’Agostino: What role do you think nuclear secrecy should play in journalism today?

Alex Wellerstein: [Secrecy] is a real issue that journalists face when dealing with topics on the edges of classification. In the United States, there needs to be a presumption of openness in journalism. If you get rid of that presumption, you start publishing whatever a government tells you to and then you very, very quickly are no longer being responsible to the broader public.

That said, if an investigative journalist finds some core information that the government wants to keep secret, the government will talk with those journalists, and say, “we’re not in control of you, but here’s the danger if you publish X.” That kind of engagement is actually quite productive. Journalists need to be skeptical and independent—and occasionally do things that people in the government aren’t going to like. If your goal is to inform the public—not to get the most clicks or to sell the most magazines—then some sort of moderation could be found. When a journalist totally ignores those considerations, gets a leak, and runs with it, that can be just as dangerous as acquiescing to a government demand not to release something.

The number one way that journalists end up with secrets is not because they’ve discovered some secret archive or because somebody lost a document on a train. It’s because someone in the government leaked it to them. And that’s usually a very incomplete version of a discussion that’s happening inside the government. It’s not usually whistle blowing. It’s usually, “I want to kill this program,” or “I want to make this person’s political fortunes more limited.”

Journalists have, at times, allowed themselves to be pawns. In the nuclear area today—not the Bulletin of the Atomic Scientists—you do see some of the coverage of Iran in say, the New York Times, where they’re clearly getting selective leaks to paint a picture that works with a political position that whoever is leaking to them—our government, somebody else’s government, or some third party—would like. That’s the kind of area where the allure of the secret could be at the detriment of poor journalistic practices. But the Bulletin‘s great. Don’t change a thing.

Susan D’Agostino: We have no plans to change.

Alex Wellerstein: The difficult thing here is none of us know the future. There’s a line from Lewis Strauss—the [former] head of the Atomic Energy Commission who took down [J. Robert] Oppenheimer. He’s awful. He’s so odious at every turn. I try not to have enemies but, my god, he’s really unpleasant. But he said something along the lines of, “a secret once released is released forever.” There’s a truth to that, and that produces a conservatism in releasing things. The Bulletin didn’t publish on the H-bomb because they knew if they did, that information would be out there indefinitely. But they gave themselves the option of publishing later.

Susan D’Agostino: Was there anything in your research for the book that surprised you?

Alex Wellerstein: Once you peel back the layer of secrecy—even in the Eisenhower years—you don’t find a bunch of angry malcontented bureaucrats on the other side. You find rich discussions about what should and shouldn’t be released. You find differences of opinion, not a monolithic view. You don’t find a total adherence to secrecy or even a total faith in it. But you find fairly reasonable people who cannot predict the future, who cannot determine necessarily what the right choice between several difficult choices is.

I was also surprised that so many aspects of the system that we’ve come to take for granted are really determined by a tiny number of people—maybe six or seven people. Even the restrictive data clause was probably written, drafted, and proofread by about three people. The entirety of the classification system was set up on the guidelines of about seven people. Looking at this is important now and in the future. We don’t have to feel like this was set up in the most perfect way in the most perfect system by the most perfect people and thus can never be changed. These are human systems set up by other human beings. They bear all of the mistakes and fallibilities that any of us would have today. We should feel authorized to rethink decisions made 60 or 70 years ago that we’re still living with.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: nuclear, nuclear arsenal, nuclear risk, nuclear secrets, nuclear weapons

Topics: Nuclear Risk, Nuclear Weapons