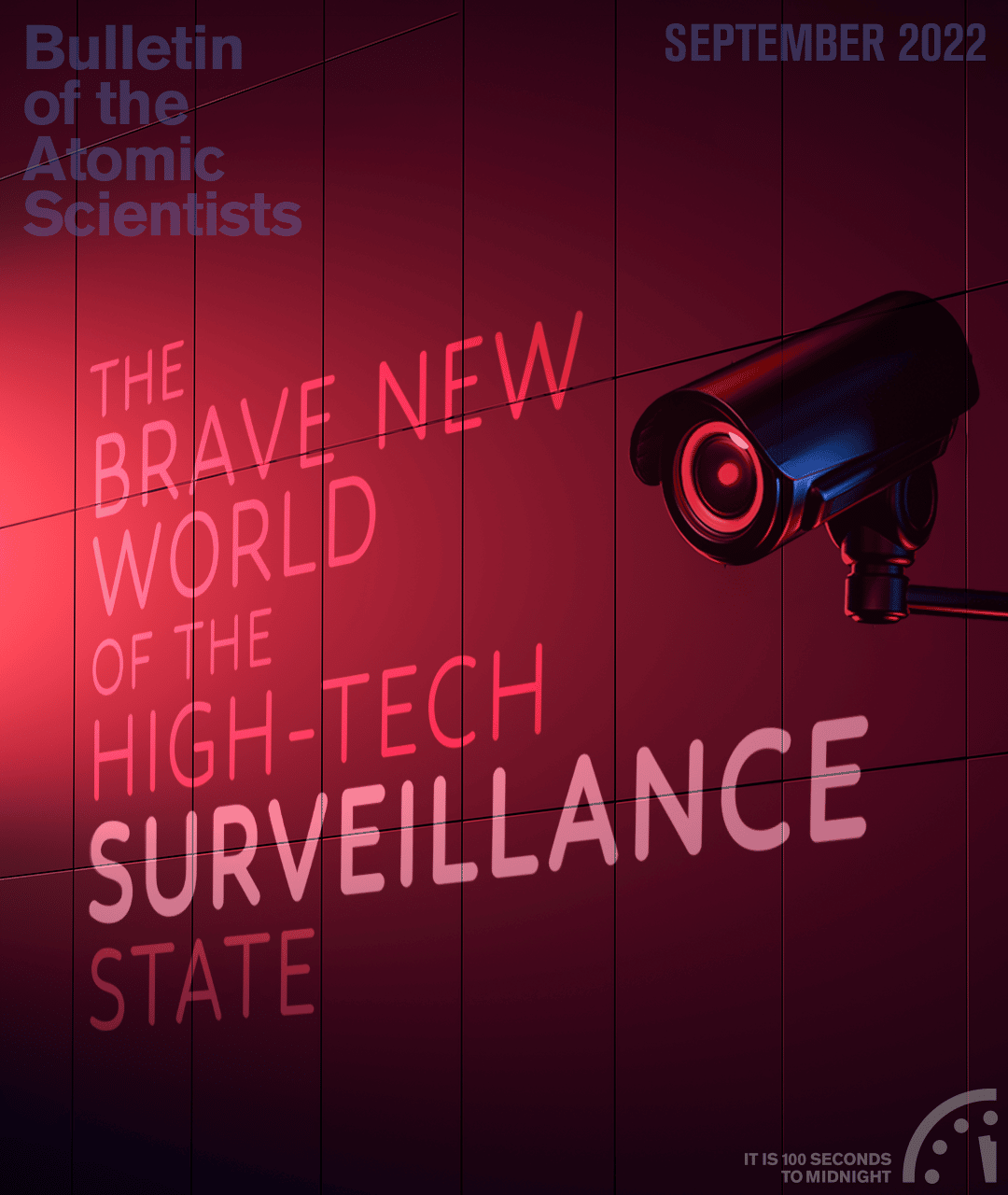

The high-tech surveillance state is not restricted to China: Interview with Maya Wang of Human Rights Watch

By Dan Drollette Jr | September 8, 2022

The high-tech surveillance state is not restricted to China: Interview with Maya Wang of Human Rights Watch

By Dan Drollette Jr | September 8, 2022

As readers may know from media exposés, the Chinese government has already implemented an authoritarian surveillance state in its province of Xinjiang, where the Uyghur Muslim minority is constantly subject to cameras, facial recognition algorithms, biometrics, abundant checkpoints, big data, and constant screening—making them live inside what some have called a “virtual cage.” Some of this technology has spread to other parts of the country, in one form or another. But what may have gotten lost in the shuffle is that there is no reason for the misuse of these technologies to be confined to communist China.

One of the first researchers to become aware of the size and extent of this surveillance apparatus was Maya Wang, whose ground-breaking research on China’s use of technology for mass surveillance has helped to galvanize international attention on these developments. In this interview with the Bulletin’s Dan Drollette Jr., Wang explains how she learned of what was going on, what technologies are being used, and the thinking behind its implementation on the part of the Chinese government—and how this techno-authoritarianism could be a taste of what is to come.

(Editor’s note: This interview has been condensed and edited for brevity and clarity.)

Dan Drollette Jr.: I understand you’ve been covering this area for a while.

Maya Wang: I don’t think it’s an exaggeration, but I’m probably the first person to discover the use of mass surveillance systems in China. That’s not to boast or anything, just stating a fact.

Drollette: How did you find out about China’s use of surveillance technology?

Wang: We at Human Rights Watch have been writing about—and documenting—the rise of the authoritarian surveillance technology state in China since 2017. As to how we found out about its existence—several different things happened at once around that time.

Our organization has been working with people on the ground in China for decades. And one of the people in China that I have worked with for many years, who himself is a veteran activist, told me that I should look at this experimental new smart card system being set up by Beijing on a trial basis.[1] And he said; “I’m really worried. Once it is fully enacted, it could have a really big impact on how we carry on activism.”

So that was when I started looking into these systems.

It was very confusing at first. And the reason why I said I’m probably the first person to document these monster surveillance systems was that there was zero literature on this wherever I looked at that time. There was nothing at all in English—and when you are researching in Chinese, it’s a entirely different universe. But eventually I began discovering more and more documents in Chinese, written by the marketing departments of the surveillance companies that sell these systems to the government—or sometimes written by the police. But they were all written in a way that was pretty glowing.

Drollette: What do you mean?

Wang: The writing was all about how this technology could better manage society and make everything more efficient and controllable.

I thought: “Wait a minute, this isn’t happening.” What you’re building is a surveillance state, but what you’re describing is much more positive.

And at the time, arbitrary detention technology wasn’t something that I knew about; I didn’t have a lot of background in subjects like facial recognition.

Meanwhile, I was researching what was happening in Xinjiang province, such as the political education camps being erected there.

So basically, I was researching this smartcard system and looking at policing literature at the same time that I was hearing about what’s happening in Xinjiang—which uses surveillance to control the local native Uyghur population—and these three things essentially clicked together. What I was discovering was that the Chinese government was building this massive surveillance state … and nobody had actually realized what was happening.

And of course, when I read that, I realized that there were similar things that had been going on that stretched way back in the past, but nobody had connected the dots. The individual pieces had been noticed by researchers but not put together.

A really useful seminal report that I found was written by a researcher named Greg Watson. In the year 2000, he wrote about China’s construction of something called the Golden Shield projects—precursors to to what you could see today. And the reason they’re all coming to fruition now is that China has devoted a massive amount of resources to refining these forms of social control, and has been doing it for the past 20 years.

So it all essentially escalated into the amount of social control we see now in China, coming to a head just over the last five years.

Drollette: I’ve seen the use of this system in Xinjiang described in the New York Times as a “virtual cage,”[2] but I’m still trying to get a sense of what that means for the everyday person. If I understand correctly from something you said previously for the Project on Government Oversight,[3] the system is so intrusive that if you go to the gas station too many times a day, the system will pick up on that as something abnormal, flag it, and the police will send someone to investigate.

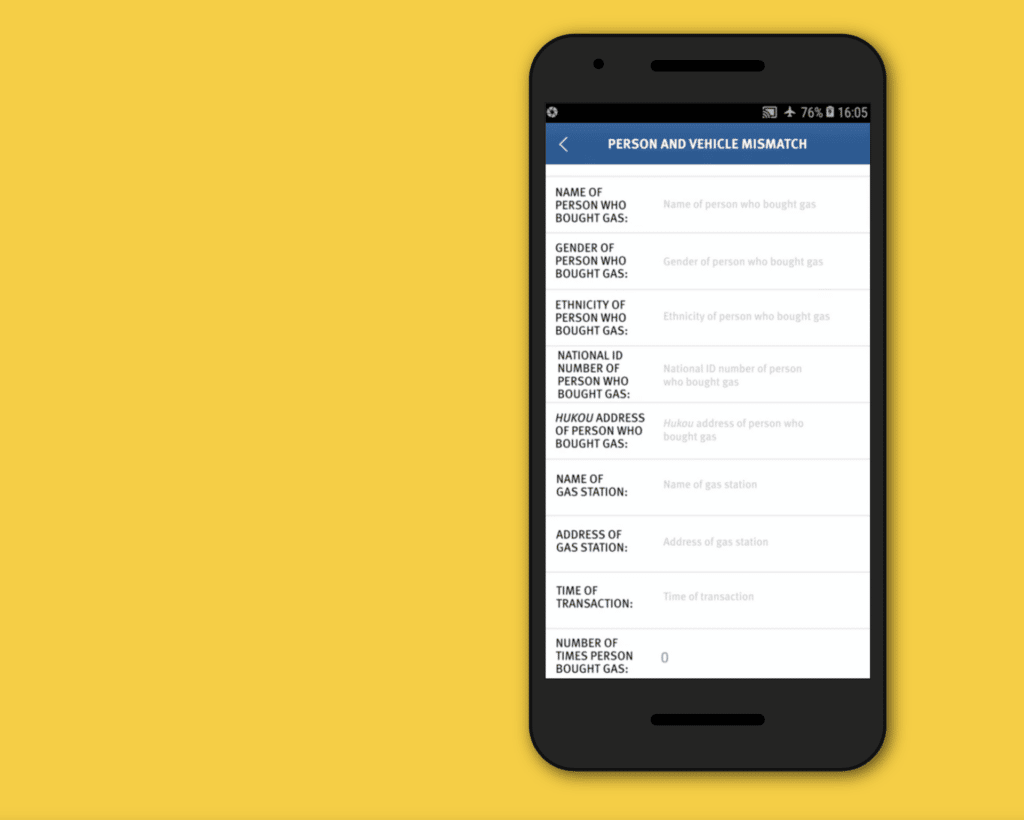

Wang: Yes, but that example actually involves the integration of more than one different source of data. There are multiple companies, using multiple systems, and the Chinese authorities are trying to standardize and integrate them all. So, in Xinjiang, we documented the use of this system called the Integrated Joint Operations Platform that is connected to a sensory system around the region for gas stations. That means that when you pay at the gas station, you have to use your ID card and pass the license plate recognition system—and if you go to the gas station too many times, then police officers will come and investigate. And some of the people who are investigated can be sent to political education camps.

And the whole interconnected network of surveillance systems also looks for other things that are deemed suspicious—red flags such as growing a beard or listening to tapes from Muslim countries. Although sometimes we don’t know how much of this comes from the use of automated systems versus how much is through informants.

In Xinjiang province—that specific part of China—the Chinese government has long been more heavy-handed, because the the people there are very visually, linguistically, and culturally different from Han Chinese. And they’re Muslims. So after September 11, any Uyghur exercising their separate identity and cultural distinctiveness suddenly became a subject of suspicion in the war on terror, in the eyes of the Chinese government.

Now, that’s not to say that there have not been violent incidents in Xinjiang that were caused by terrorists. There are some legitimate security concerns. But nonetheless, the response has been completely disproportionate. So this is how, under the banner of counterterrorism, the Chinese government essentially dialed up their mass surveillance systems in Xinjiang.

The thinking behind it is that this system can catch any kind of abnormal behavior.

Drollette: It sounds problematic.

Wang: Any law enforcement official will tell you that it’s really hard to predict acts of terrorism, because those have many different factors.

And the things that trigger the system to flag you are so many and varied: If people use too much electricity, if they use WhatsApp, if they circulate video and audio messages that are in Arabic, if they go into their home from the back door instead of the front door—these are all part of the range of suspicious activities that could lead to triggering the system and your interrogation. And the list goes on.

Some of these activities are not illegal under Chinese law. For example, if your phone hasn’t been switched on for two days, then the system picks you up. Now that is obviously not actually illegal—and yet the system would send a signal for police to interrogate you.

At the same time, there are also these data doors they have to pass through, which look like the airport security machines that you pass through but are in fact gathering the identification numbers of your connected devices—such as your Mac address or your phone’s IMEI [International Mobile Equipment Identity] number[4].

And the Integrated Joint Operations Platform acts kind of as a big brain to identify anything deemed abnormal.

Drollette: And it is starting to be used elsewhere in the country as well?

Wang: Yes, in varying incarnations and to varying degrees. For instance, the authorities are also blanketing the country with these these things called WiFi sniffers, which are a bit like what I was describing with the data doors that the Uyghurs have to go through at checkpoints.

In the rest of China, people don’t go through checkpoints. Instead, in public spaces, these WiFi devices essentially sniff out your phone’s identifying information—which can then be used to find out your movements and relationships.

I think a lot of people in China just simply do not understand that these systems were being put in place and running in the background.

Drollette: I imagine that the Chinese government would say: “Well, what’s so bad about this? We’re just trying to make for a safer society, where there’s less crime and we can better deal with disasters.” How do you respond to that critique?

Wang: From a human rights perspective, governments can collect people’s data, but that collection has to be necessary, proportionate, and lawful, and meet the standards of legality.

And now, in China, none of these conditions are met.

So, I would say that the collection of data by the police falls completely outside of these parameters. There’s no rule of law; the party controls the law. It has such wide powers that there’s very little restraint on their collection of data. Which is why it is so bad: These kinds of collection systems don’t meet international human rights standards.

And it’s open to abuse. There can be government officials just filling a quota: “We need to gather so many people for this detention center, because I’ve got to meet my quota.”

Now, the government claims that these are crime-fighting tools. But if you look at where they’re being used, how they’re being used, who they’re targeting, and what these systems were designed for, then what they are actually being used for is for social control.

For example, in Xinjiang, they claim that all these surveillance capabilities as being used to, quote, “precisely target terrorists” unquote.

Everything about those three words is false.

There’s nothing precise about these systems, there’s no really targeting because all they’re essentially doing is terrorizing the entire population. And these are not terrorists: People will sometimes use too much electricity, or sometimes go on WhatsApp.

It takes several leaps of imagination to go from someone using too much electricity to someone being a terrorist.

Now, admittedly, if you incarcerate millions of people, one of them is bound to be a terrorist. But that doesn’t mean it’s an effective system. And you destroy everyone’s human rights.

Drollette: At the end of the day, is the whole surveillance system really about simply ensuring that the Chinese Communist Party can stay in power indefinitely?

Wang: Yes. I think it’s clearly the case that in China, the Chinese Communist Party designed these systems with the explicit intention of maintaining their grip on power—though I think it’s also very important to raise the work of my fellow researcher Samantha Hoffman, who pointed out that the Chinese Communist Party’s use of this kind of techno-enhanced authoritarianism is also aimed at achieving a certain level of efficiency in the delivery of government services.

The idea is that, outside of some of the systems that I do not like—such as police surveillance—these systems are also about developing especially smart cities, which are meant to be more efficient in delivering resources, cutting down pollution, making people happier and healthier.

Drollette: What can we do to prevent this kind thing from gaining traction here?

Wang: I think it already is here to some extent, if in a different form.

I have the impression that people think about China as this center of techno-authoritarianism and that the United States is somehow a techno-democracy. But what is actually happening is that the United States also inspires and leads in many areas that involve mass surveillance and mass collection of data—just think of the revelations surrounding the National Security Agency. A number of US tech companies are pioneers in what is termed by professor Shoshana Zuboff as “surveillance capitalism.”[5] A number of Western companies are selling surveillance systems to authoritarian governments around the world, and a number of Chinese companies are selling these systems to the West.

So essentially, what we’re seeing is not so much a case of techno-authoritarianism versus techno-democracy, but more of a kind of digital race to the bottom. Though people in democracies are better protected because there are laws and institutions in place in those places to protect them, and fight for their rights.

Drollette: What first drew you to this whole topic? Did you or any of your family have any connection to Tiananmen Square?

Wang: When I started work at Human Rights Watch in early 2000, China was in the early stages of forming a thriving civil society. It was small, but it was blossoming. And that pretty much got eradicated.

Now, I think that happened not just because of the use of surveillance systems. But I do think that if you look at the history of societies, the powerless and the powerful are always engaged in a struggle. And believe it or not, but humans have generally moved towards greater equality over time.

Now, however, these surveillance systems and these powerful internal security apparatuses—especially in China—have essentially distorted that trend, so that ordinary citizens become more powerless and the state becomes more powerful. And that means that it could come to a point where dissent becomes irrelevant, and the government no longer even worries about dissent, because they could always crush it.

And for me, that would be kind of like the end of what we value: human equality, dignity, and the freedom to choose to live the way we want. If you eradicate that freedom, you eradicate humanity.

That is why I think the use of mass surveillance is particularly gripping as a problem for humanity.

Endnotes

[1] Known as the “social credit system” these government-issued cards assign each person points based on their behavior. Lose enough points—by having an unpaid fine, for example, or driving through a red light—and you can be banned from riding on a train or plane, buying property, or taking out a loan. See “The Complicated Truth about China’s social credit system” https://www.wired.co.uk/article/china-social-credit-system-explained.

[2] See “ ‘An Invisible Cage’: How China is Policing the Future” https://www.nytimes.com/2022/06/25/technology/china-surveillance-police.html.

[3] See “China’s Surveillance State” https://www.pogo.org/podcast/bonus-chinas-surveillance-state.

[4] A phone’s International Mobile Equipment Identity number is the unique 15-digit code that precisely identifies your phone or other electronic device with the SIM card.

[5] See “Shoshana Zuboff: Surveillance capitalism is an assault on human autonomy” https://www.theguardian.com/books/2019/oct/04/shoshana-zuboff-surveillance-capitalism-assault-human-automomy-digital-privacy.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Gerd Gigerenzer has also written about ‘sleepwalking into surveillance’ here. I was unaware of the extent of the surveillance in the US until I read this, something of a Chomskyan moment for me as the media had told me a lot about ‘the enemy China’s’ surveillance but little about our own. https://mitpress.mit.edu/9780262046954/how-to-stay-smart-in-a-smart-world/ In discussions I’ve often raised the issue of the absolute need for a police surveillance state if a country wanted to scale up nuclear, especially with ubiquitous SMRs, but people here in the US don’t want to hear it – “it can’t happen here”. At least the mention… Read more »