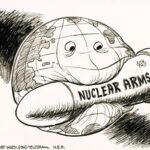

Fukushima and the inevitability of accidents

By Charles Perrow | December 1, 2011

The March 11, 2011 disaster at the Fukushima Daiichi Nuclear Power Station in Japan replicates the bullet points of most recent industrial disasters. It is outstanding in its magnitude, perhaps surpassing Chernobyl in its effects, but in most other respects, it simply indicates the risks that we run when we allow high concentrations of energy, economic power, and political power to form. Just how commonplace — prosaic, even — this disaster was illustrates just how risky the industrial and financial world really is.

Nothing is perfect, no matter how hard people try to make things work, and in the industrial arena there will always be failures of design, components, or procedures. There will always be operator errors and unexpected environmental conditions. Because of the inevitability of these failures, and because there are often economic incentives for business not to try very hard to play it safe, government regulates risky systems in an attempt to make them less so. Formal and informal warning systems constitute another method of dealing with the inherently risky systems of industrial society. And society can always be better prepared to respond when accidents and disasters occur.

But for many reasons, even quality regulation, close attention to warnings, and careful plans for responding to disaster cannot eliminate the possibility of catastrophic industrial accidents. Because that possibility is always there, it is important to ask whether some industrial systems have such huge catastrophic potential that they should not be allowed to exist.

Regulations. Nuclear safety is problematic when nuclear plants are in private hands because private firms have the incentive and, often, the political and economic power to resist effective regulation. That resistance often results in regulators being captured in some way by the industry. In Japan and India, for example, the regulatory function concerned with safety is subservient to the ministry concerned with promoting nuclear power and, therefore, is not independent. The United States had a similar problem that was partially corrected in 1975 by putting nuclear safety into the hands of an independent agency, the Nuclear Regulatory Commission (NRC), and leaving the promotion of nuclear power in the hands of the Energy Department. Japan is now considering such a separation. It should make one. Since the accident at Fukushima, many observers have charged that there is a revolving door between industry and the nuclear regulatory agency in Japan — what the New York Times called a “nuclear power village” — compromising the regulatory function.

Of course, even in Europe, where for-profit firms have less power, there are safety problems that have needed more effective oversight. But by and large, European nuclear plants, which are generally part of a state-run industry, appear to be safer than the privately owned, poorly regulated nuclear plants in the United States, Japan, and other countries.

Systemic regulatory failure — as opposed to simple error — is tricky to identify accurately. After an accident in a risky industry, it is always possible to find some failure of a regulatory agency. Everything, after all, is subject to error, in regulatory agencies as well as chemical or power plants. To say that regulation failed on a system-wide basis, one must have strong evidence of agency incompetence or collusion.

The Union Carbide chemical plant in Institute, West Virginia, is my favorite example of regulatory incompetence; in this case, it was a matter of regulators seeing what they were apparently predisposed to see. Shortly after a Union Carbide pesticide plant in Bhopal, India, leaked methyl isocyanate gas in December 1984, killing thousands, the Occupational Safety and Health Administration (OSHA) inspected the company’s West Virginia plant and gave it a clean bill of health. What happened in Third World India could not happen in the United States, it was said.

Nine months later, an accident quite similar to Bhopal occurred at the plant, though the gas released was not as toxic and the wind was in a favorable direction, so only some 135 people were hospitalized. OSHA looked again and, predictably, found “an accident waiting to happen” and cited the plant for numerous violations, despite its clean bill of health nine months before. There was a trivial fine and a Union Carbide promise to store only the small amounts of the toxic gas actually needed for production.

Union Carbide soon resumed massive storage of methyl iscocyanate. Bayer subsequently took over the plant and, in 2008, an explosion killed two workers and threatened to release 4,000 gallons of the deadly gas. Subsequent investigation by the US Chemical Safety Board again found an accident waiting to happen. OSHA appears not to have noticed that its strictures on the amount of storage were violated.

Regulations will always be imperfect. They cannot cover every exigency, and, unfortunately, almost anything can be declared the cause of an accident. One can also make the case that too much regulation interferes with safe practices, as nuclear plant operators have always claimed in the United States. But the overregulation complaint is undermined by the following anecdote: A few years ago, the NRC sharply increased the number of inspections of nuclear power plants following some embarrassing near-misses. A then-powerful US senator, Pete Domenici of New Mexico, a recipient of large campaign donations from the industry, called in top NRC officials and threatened to cut the agency’s budget in half if it did not reduce the number of inspections. The NRC reduced its inspections. I doubt that anything similar could take place in Europe.

Regulatory capture is widespread in many risky US industrial systems and often subtle — but not always. In the Interior Department’s Materials Management Service, for example, representatives of the oil industry and regulators who were supposed to be overseeing oil exploration exchanged sexual favors and drugs. This intramural partying was disclosed just before the BP-leased Deepwater Horizon oil rig blew up in the Gulf of Mexico, resulting in the largest oil spill of its kind, making the regulatory failure especially dramatic.

Charges of regulatory failure were also levied in the 2010 Massey Energy coal mine disaster in West Virginia, which killed 29; the explosion at BP’s Texas City, Texas, refinery in 2005, which killed 15 and injured at least 170; and BP’s massive oil pipeline break in 2006 in Prudhoe Bay, Alaska.

The full contents of this article are available in the November/December issue of the Bulletin of the Atomic Scientists and can be found here.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Topics: Opinion