Was a flying killer robot used in Libya? Quite possibly

By Zachary Kallenborn | May 20, 2021

A screenshot from a promotional video advertising the Kargu drone. In the video, the weapon dives toward a target before exploding.

A screenshot from a promotional video advertising the Kargu drone. In the video, the weapon dives toward a target before exploding.

Last year in Libya, a Turkish-made autonomous weapon—the STM Kargu-2 drone—may have “hunted down and remotely engaged” retreating soldiers loyal to the Libyan General Khalifa Haftar, according to a recent report by the UN Panel of Experts on Libya. Over the course of the year, the UN-recognized Government of National Accord pushed the general’s forces back from the capital Tripoli, signaling that it had gained the upper hand in the Libyan conflict, but the Kargu-2 signifies something perhaps even more globally significant: a new chapter in autonomous weapons, one in which they are used to fight and kill human beings based on artificial intelligence.

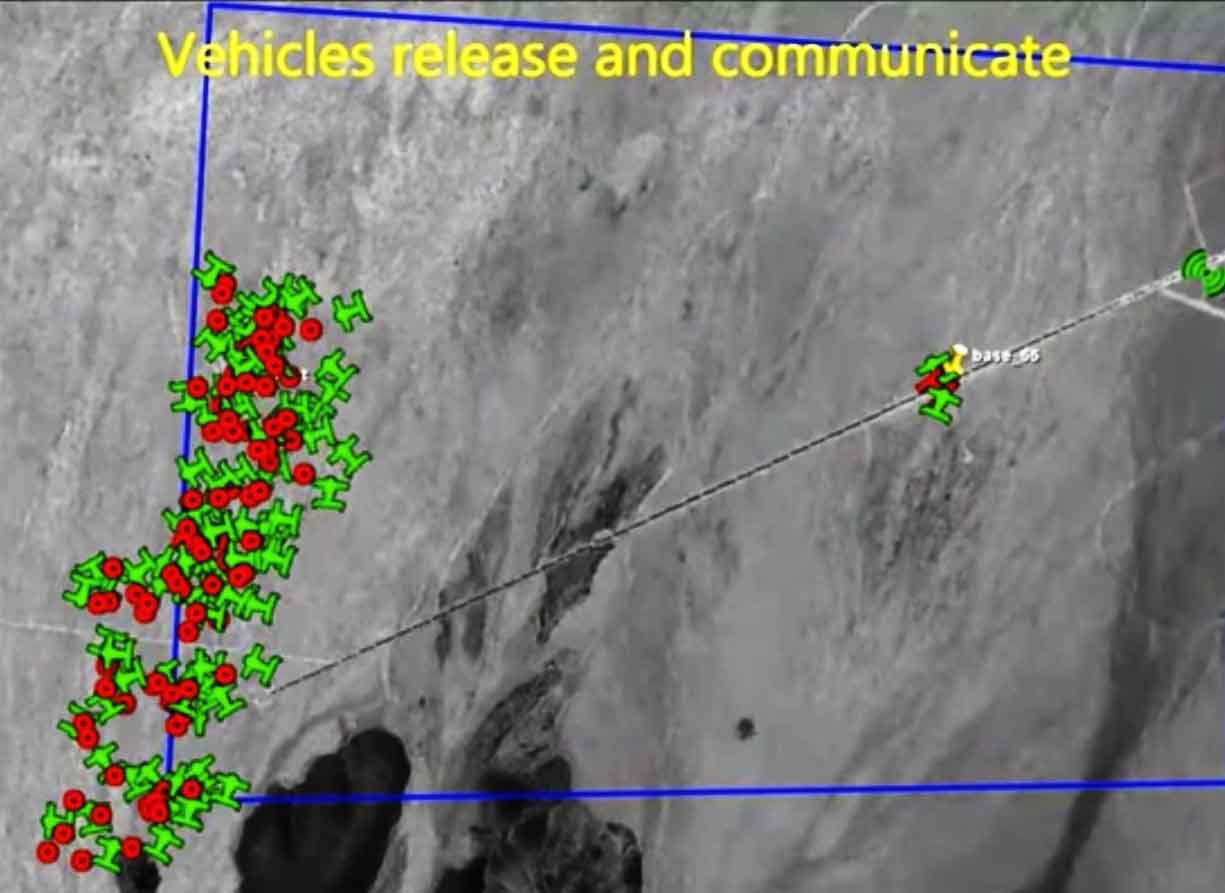

The Kargu is a “loitering” drone that can use machine learning-based object classification to select and engage targets, with swarming capabilities in development to allow 20 drones to work together. The UN report calls the Kargu-2 a lethal autonomous weapon. It’s maker, STM, touts the weapon’s “anti-personnel” capabilities in a grim video showing a Kargu model in a steep dive toward a target in the middle of a group of manikins. (If anyone was killed in an autonomous attack, it would likely represent an historic first known case of artificial intelligence-based autonomous weapons being used to kill. The UN report heavily implies they were, noting that lethal autonomous weapons systems contributed to significant casualties of the manned Pantsir S-1 surface-to-air missile system, but is not explicit on the matter.)

Many people, including Steven Hawking and Elon Musk, have said they want to ban these sorts of weapons, saying they can’t distinguish between civilians and soldiers, while others say they’ll be critical in countering fast-paced threats like drone swarms and may actually reduce the risk to civilians because they will make fewer mistakes than human-guided weapons systems. Governments at the United Nations are debating whether new restrictions on combat use of autonomous weapons are needed. What the global community hasn’t done adequately, however, is develop a common risk picture. Weighing risk vs. benefit trade-offs will turn on personal, organizational, and national values, but determining where risk lies should be objective.

It’s just a matter of statistics.

At the highest level, risk is a product of the probability and consequence of error. Any given autonomous weapon has some chance of messing up, but those mistakes could have a wide range of consequences. The highest risk autonomous weapons are those that have a high probability of error and kill a lot of people when they do. Misfiring a .357 magnum is one thing; accidentally detonating a W88 nuclear warhead is something else.

There are at least nine questions that are important to understanding where the risks are when it comes to autonomous weapons.

How does an autonomous weapon decide who to kill? Landmines—in some sense an extremely simple autonomous weapon—use pressure sensors to determine when to explode. The firing threshold can be varied to ensure the landmine does not explode when a child picks it up. Loitering munitions like the Israeli Harpy typically detect and home in on enemy radar signatures. Like with landmines, the sensitivity can be adjusted to separate civilian from military radar. And thankfully, children don’t emit high-powered radio waves.

But what has prompted international concern is the inclusion of machine learning-based decision-making as was used in the Kargu-2. These types of weapons operate on software-based algorithms “taught” through large training datasets to, for example, classify various objects. Computer vision programs can be trained to identify school buses, tractors, and tanks. But the datasets they train on may not be sufficiently complex or robust, and an artificial intelligence (AI) may “learn” the wrong lesson. In one case, a company was considering using an AI to make hiring decisions until management determined that the computer system believed the most important qualification for job candidates was being named Jared and playing high school lacrosse. The results wouldn’t be comical at all if an autonomous weapon made similar mistakes. Autonomous weapons developers need to anticipate the complexities that could cause a machine learning system to make the wrong decision. The black box nature of machine learning, in which how the system makes decisions is often opaque, adds extra challenges.

What role do humans have? Humans might be able to watch for something going wrong. In human-in-the-loop configurations, a soldier monitors autonomous weapon activities, and, if the situation appears to be headed in a horrific direction, can make a correction. As the Kargu-2’s reported use shows, a human-off-the-loop system simply does its thing without a safeguard. But having a soldier in the loop is no panacea. The soldier may trust the machine and fail to adequately monitor its operation. For example, Missy Cummings, the director of Duke University’s Human and Autonomy Laboratory, finds that when it comes to autonomous cars, “drivers who think their cars are more capable than they are may be more susceptible to increased states of distractions, and thus at higher risk of crashes.”

Of course, a weapon’s autonomous behavior may not always be on—a human might be in, on, or off the loop based on the situation. South Korea has deployed a sentry weapon along the demilitarized zone with North Korea called the SGR A-1 that reportedly operates this way. The risk changes based on how and when the fully autonomous function is flipped on. Autonomous operation by default obviously creates more risk than autonomous operation restricted only to narrow circumstances.

What payload does an autonomous weapon have? Accidentally shooting someone is horrible, but vastly less so than accidentally detonating a nuclear warhead. The former might cost an innocent his or her life, but the latter may kill hundreds of thousands. Policymakers may focus on the larger weapons, recognizing the costs of mistake, potentially reducing the risks of autonomous weapons. However, exactly what payloads autonomous weapons will have is unclear. In theory, autonomous weapons could be armed with guns, bombs, missiles, electronic warfare jammers, lasers, microwave weapons, computers for cyber-attack, chemical weapons agents, biological weapons agents, nuclear weapons, and everything in between.

What is the weapon targeting? Whether an autonomous weapon is shooting a tank, a naval destroyer, or a human matters. Current machine learning-based systems cannot effectively distinguish a farmer from a solider. Farmers might hold a rifle to defend their land, while soldiers might use a rake to knock over a gun turret. But even adequate classification of a vehicle is difficult too, because various factors may inhibit an accurate decision. For example, in one study, obscuring the wheels and half of the front window of a bus caused a machine learning-based system to classify the bus as a bicycle. A tank’s cannon might make it easy to distinguish from a school bus in an open environment, but not if trees or buildings obscure key parts of the tank, like the cannon itself.

How many autonomous weapons are being used? More autonomous weapons means more opportunities for failure. That’s basic probability. But when autonomous weapons communicate and coordinate their actions, such as in a drone swarm, the risk of something going wrong increases. Communication creates risks of cascading error in which an error by one unit is shared with another. Collective decision-making also creates the risk of emergent error in which correct interpretation adds up to a collective mistake. To illustrate emergent error, consider the parable of the blind men and the elephant. Three blind men hear a strange animal, an elephant, had been brought to town. One man feels the trunk and says the elephant is thick like a snake. Another feels the legs and says it’s like a pillar. A third feels the elephant’s side and describes it as a wall. Each one perceives physical reality accurately, if incompletely, but their individual and collective interpretations of that reality are incorrect. Would a drone swarm conclude the elephant is an elephant, a snake, a pillar, a wall, or something else entirely?

Where are autonomous weapons being used? An armed, autonomous ground vehicle wandering a snow-covered Antarctic glacier has almost no chance of killing innocent people. Not much lives there and the environment is mostly barren with little to obstruct or confuse the vehicle’s onboard sensors. But the same vehicle wandering the streets of New York City or Tokyo is another matter. In cities, the AI system would face many opportunities for error: trees, signs, cars, buildings, and people all may jam up correct target assessment.

Sea-based autonomous weapons might be less prone to error just because it may be easier to distinguish between a military and a civilian ship, with fewer obstructions, than it is to do the same for a school bus and an armored personnel carrier. Even the weather matters. One recent study found foggy weather reduced the accuracy of an AI system used to detect obstacles on roads to 58 percent compared to 92 percent in clear weather. Of course, bad weather may also hinder humans in effective target classification, so an important question is how AI classification compares to human classification.

How well tested is the weapon? Any professional military would verify and test whether an autonomous weapon works as desired before putting soldiers and broader strategic goals at risk. However, the military may not test for all the complexities that may confound an autonomous weapon, especially if those complexities are unknown. Testing will also be based on anticipated uses and operational environments, which may change as the strategic landscape changes. An autonomous weapon robustly tested in one environment may break down when used in another. Seattle has a lot more foggy days than Riyadh, but far fewer sandstorms.

How have adversaries adapted? In a battle involving autonomous weapons, adversaries will seek to confound operations, which may not be very difficult. OpenAI—a world-leading AI company—developed a system that can classify an apple as a Granny Smith with 85.6 percent confidence. Yet, tape a piece of paper that says “iPod” on the apple, and the machine vision system concludes with 99.7 percent confidence the apple is an iPod. In one case, AI researchers changed a single pixel on an image, causing a machine vision system to classify a stealth bomber as a dog. In war, an opponent could just paint “school bus” on a tank or, more maliciously, “tank” on a school bus and potentially fool an autonomous weapon.

How widely available are autonomous weapons? States and non-state actors will naturally vary in their risk tolerance, based on their strategies, cultures, goals, and overall sensitivity to moral trade-offs. The easier it is to acquire and use autonomous weapons, the more the international community can expect the weapons to be used by apocalyptic terrorist groups, nefarious regimes, and groups that are just plain insensitive to the error risk. As Stuart Russell, a professor of computer science at the University of California, Berkeley, likes to note: “[W]ith three good grad students and possibly the help of a couple of my robotics colleagues, it will be a term project to build a weapon that could come into the United Nations building and find the Russian ambassador and deliver a package to him.” Fortunately, technical acumen, organization, infrastructure, and resource availability will limit how sophisticated autonomous weapons are. No lone wolf terrorist will ever build an autonomous F-35 in his garage.

Autonomous weapon risk is complicated, variable, and multi-dimensional—the what, where, when, why, and how of use all matter. On the high-risk end of the spectrum are autonomous nuclear weapons and the use of collaborative, autonomous swarms in heavily urban environments to kill enemy infantry; on the low-end are autonomy-optional weapons used in unpopulated areas as defensive weapons and only used when death is imminent. Where states draw the line depend on how their militaries and societies balance risk of error against military necessity. But to draw a line at all requires a shared understanding of where risk lies.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: Libya, Turkey, drones, lethal autonomous weapons

Topics: Analysis