Whistleblower: Facebook undermines kids and democracy. Will Congress do something?

By Matt Field | October 5, 2021

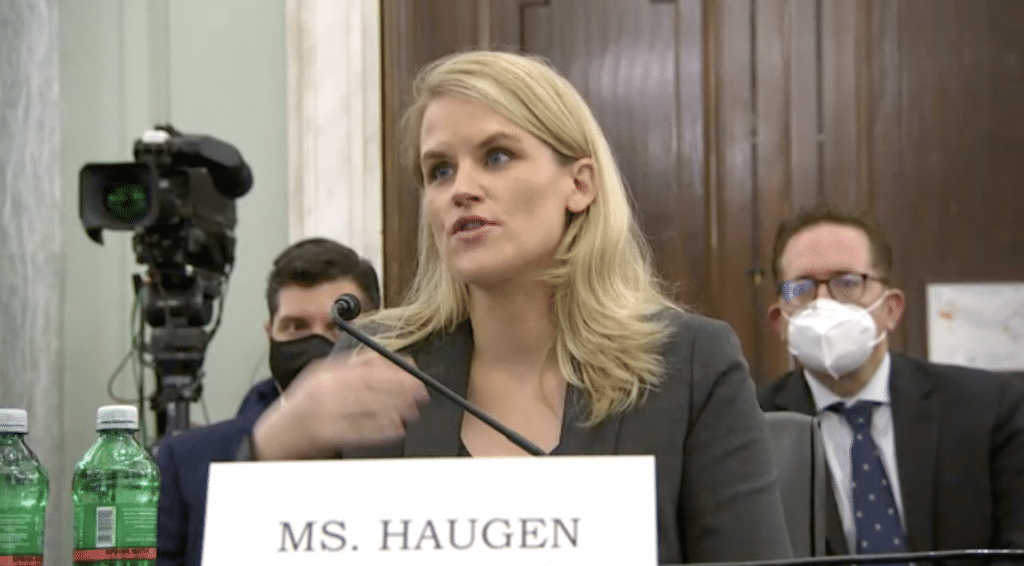

Frances Haugen, a former Facebook employee who leaked internal documents to the press and US lawmakers, testifies to Congress. Credit: US Senate.

Frances Haugen, a former Facebook employee who leaked internal documents to the press and US lawmakers, testifies to Congress. Credit: US Senate.

Social media reform is a complex issue and the litany of terms that come up in debates around the topic, from algorithms to mis- and dis-information and more, can obscure the societal implications of billions of users spending significant chunks of waking life scrolling through their social media feeds. One former employee of Facebook, however, seemed to boil down all the complexity and lay out just what was at stake: Facebook, Frances Haugen told Congress on Tuesday, promotes ethnic violence, harms kids, and threatens democratic governance.

One of Haugen’s most breathtaking assertions centered on the extent to which Facebook knows about the emotional damage its products like Instagram can inflict on children. The former product manager shared her concerns with Congress in evocative terms. “Kids who are bullied on Instagram, the bullying follows them home. It follows them into their bedrooms. The last thing they see before they go to bed at night is someone being cruel to them, or the first they see in the morning is someone being cruel to them,” she said. Haugen leaked thousands of internal Facebook documents to The Wall Street Journal, which produced an in-depth investigative series on the company.

Facebook has the internal research that shows how harmful some of its decisions have been, she told lawmakers, likening efforts to regulate Facebook to previous efforts against Big Tobacco and opioids.

“I don’t understand how Facebook can know all these things and not escalate it to someone like Congress for help and support in navigating these problems,” she said of the company’s research into the effects of Instagram on kids.

After the 2016 US presidential election, when Russian intelligence operatives orchestrated a massive social media disinformation operation to boost former President Donald Trump, Facebook went to great lengths to tamp down on the flow of problematic content on its platforms. The company didn’t want to be seen as allowing election interference to flourish ahead of the 2020 campaign. In part, the company responded by changing how easily posts could spread, Haugen said.

“The choices that were happening on the platform were really about: How reactive and twitchy was the platform? How viral was the platform? And Facebook changed those safety defaults in the runup to the election because they knew they were dangerous,” she said.

But those adjustments to Facebook’s back-end systems hurt efforts to keep users engaged—a priority for an advertising-driven company—and after the election, some changes were reversed, Haugen said: “They wanted the acceleration of the platform back. After the election they returned their original defaults.”

Of course, the threat that social media disinformation posed to democracy didn’t stop on Election Day. As votes were being tallied, Trump quickly seized on the vote-by-mail process that states had adopted during the pandemic and began to cast doubt on the results; organizers of the “Stop the Steal” effort promoted disinformation on Facebook and other platforms to rally the public behind Trump. When throngs of right-wing Trump supporters beat their way past police and broke into the US Capitol just as Congress was preparing to certify the election results, Facebook knew it had a big problem, Haugen said. The company quickly tried to address the disinformation coursing through its systems.

“The fact that they had to break the glass on Jan. 6 and turn [safety measures] back on, I think that’s deeply problematic,” she said.

A good way to do well on Facebook is to be inflammatory, Haugen told Congress, and the company knows it.

According to the Journal investigation, researchers with Facebook documented how political parties and publishers were adjusting to “outrage and sensationalism” after the company implemented a change intended to encourage users to spend time engaging with people they knew rather than “passively consuming professionally produced content.”

Haugen told lawmakers that excessively promoting engaging content, which is frequently the content designed to elicit an extreme reaction, posed deep risks.

The company’s algorithms, she said, are promoting anorexia, ethnic violence, and political divisiveness. If Facebook were “responsible for the consequences of their intentional ranking decision, I think they would get rid of engagement-based ranking.”

According to an internal April 2020 memo, a Facebook employee briefed the company’s CEO, Mark Zuckerberg, on potential changes that would reduce “false and divisive content” on the company’s platforms. While saying he was open to “testing” proposals, Zuckerberg told a team at the company that he wouldn’t accept changes that would impact engagement, including one that would have “taken away a boost the algorithm gave to content most likely to be reshared by long chains of users,” according to the Journal.

While Facebook users around the world have their experiences curated by the company’s algorithms, which can promote harmful content, they don’t always benefit from programs the company has implemented to protect people from potential harms, Haugen said.

The Journal documented how pro-government forces in Ethiopia, for example, were using Facebook to promote violence against people from a separatist region there. In many Ethiopian languages, the company’s “community standards,” which dictate acceptable behavior on the platform, aren’t even available. For most languages on Facebook, the company hasn’t built artificial intelligence systems that can identify harmful content, the Journal reported.

“They have admitted in public that engagement-based ranking is dangerous without integrity and security systems but then not rolled out those integrity and security systems to most of the languages in the world,” Haugen said. “And that’s what’s causing things like ethnic violence in Ethiopia.”

“We don’t agree with her characterization of the many issues she testified about,” Lena Pietsch, a Facebook spokesperson, said in a statement about Haugen, according to The Washington Post.

Many proposals for reforming social media companies involve changes to Section 230 of the Communications Decency Act, a federal law that absolves platforms from responsibility over user-generated content. Haugen said it’s not user content on Facebook that Congress should concern itself with, but the algorithms the company has designed to manage that content.

“User-generated content is something that companies have less control over,” she said. “They have 100 percent control over algorithms. And Facebook should not get a free pass on choices it makes to prioritize growth and virality and reactiveness over public safety. They shouldn’t get a free pass on that because they are paying for their profits right now with our safety. I strongly encourage reform of 230 in that way.”

In a US Congress that can’t accomplish basic tasks such as authorizing the US Treasury to make payments and that is stalling on everything from voting rights reform to infrastructure bills, lawmakers seemed notably united Tuesday to do something about Facebook.

“Mr. chair,” Republican Sen. John Thune said to Democratic Sen. Richard Blumenthal, “I would simply say, let’s get to work. We got some things we can do here.”

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: 2024 election, Disinformation, Facebook, Instagram

Topics: Analysis, Disruptive Technologies

I wonder if it would change anything if Facebook got put under public ownership.