Spotting Frankensteins: Why humans beat AI at detecting freakish fakes

By Sara Goudarzi | October 11, 2022

Photo by Amanda Dalbjörn on Unsplash

Photo by Amanda Dalbjörn on Unsplash

In 2020, a Tesla vehicle on autopilot mode crashed into an overturned truck on a busy highway in Taiwan. The crash was just one example of several well-documented artificial intelligence (AI) system failures in autonomous vehicles. But still, one wonders how the vehicle’s autopilot system missed such a large object on the road. It turns out that the answer isn’t much of a mystery to those in the field.

Computer vision systems can only recognize objects they have been trained on. The Tesla AI system is likely to have been trained to identify a truck but only if the truck is upright. The semi-trailer lying on its side was in an unfamiliar orientation and did not match the trained experience of the network. Once the driver of the vehicle realized what was happening, they put on the brakes, but it was too late to avoid the crash.

To a human driver, it didn’t matter how the truck was orientated, because we are capable of recognizing objects by relying on configural relations between local features. But deep convolutional neural networks—a popular form of artificial intelligence for “seeing,” that uses the patterns found in images and videos to recognize objects—are incapable of seeing like us, which can be dangerous in some applications.

To better understand why deep AI models fail at configural shape perception, the Bulletin’s Sara Goudarzi spoke with James H. Elder, professor and research chair in Human and Computer Vision at York University, who co-authored a recent study detailed in iScience which found that deep convolutional neural networks are not sensitive to objects taken apart and put back together wrong. Such understanding could help avoid accidents like the one in Taiwan.

Sara Goudarzi: Tell us a little bit about your research into how deep convolutional neural networks process visual information.

James H. Elder: Our lab at York University works on understanding human visual perception and computer vision. So, we’re trying to better understand how the human brain processes images in order to build better AI. One of the core visual competencies of the human brain and other primate animals is the ability to recognize objects, and we rely heavily upon shape information to recognize objects. We know from decades and centuries of research that humans, even small children, can recognize an object from just a line drawing or a silhouette.

Recent deep AI models perform well on standard image recognition databases. (There’s a famous database called ImageNet that was introduced back in the late ’90s, early 2000s and then became a big contributor to the development of more powerful deep learning AI systems.) Also, these networks have been found to be fairly quantitatively predictive of response in the human and nonhuman primate brain. So, there’s this idea that maybe these are good models of how the human brain recognizes objects. However, we know from prior research that these networks are not as sensitive to shape information as humans. They rely more on what we call shortcuts: little texture or color cues.

One of the key attributes of human shape sensitivity is a sensitivity to configural shape. You can think of configural shape as the gestalt (or an organized whole) of an object, so something that’s not apparent if you look locally at the shape, but you have to somehow integrate information across the entire shape. In this study, we’re exploring differences in how humans and deep AI models can process and configure a global gestalt.

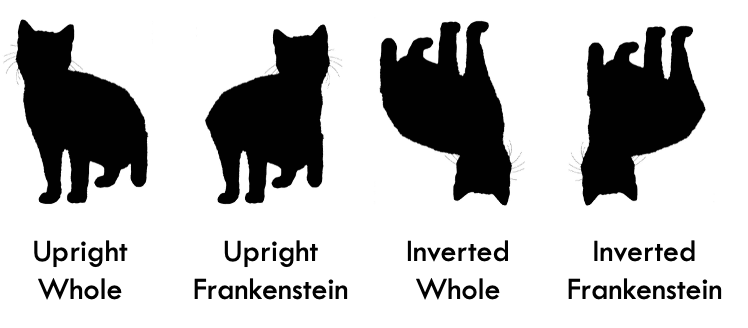

Goudarzi: To study differences and similarities between humans and deep AI models in object shape processing, you used what you call Frankensteins. Can you explain what they are and how humans and AI models interpret them?

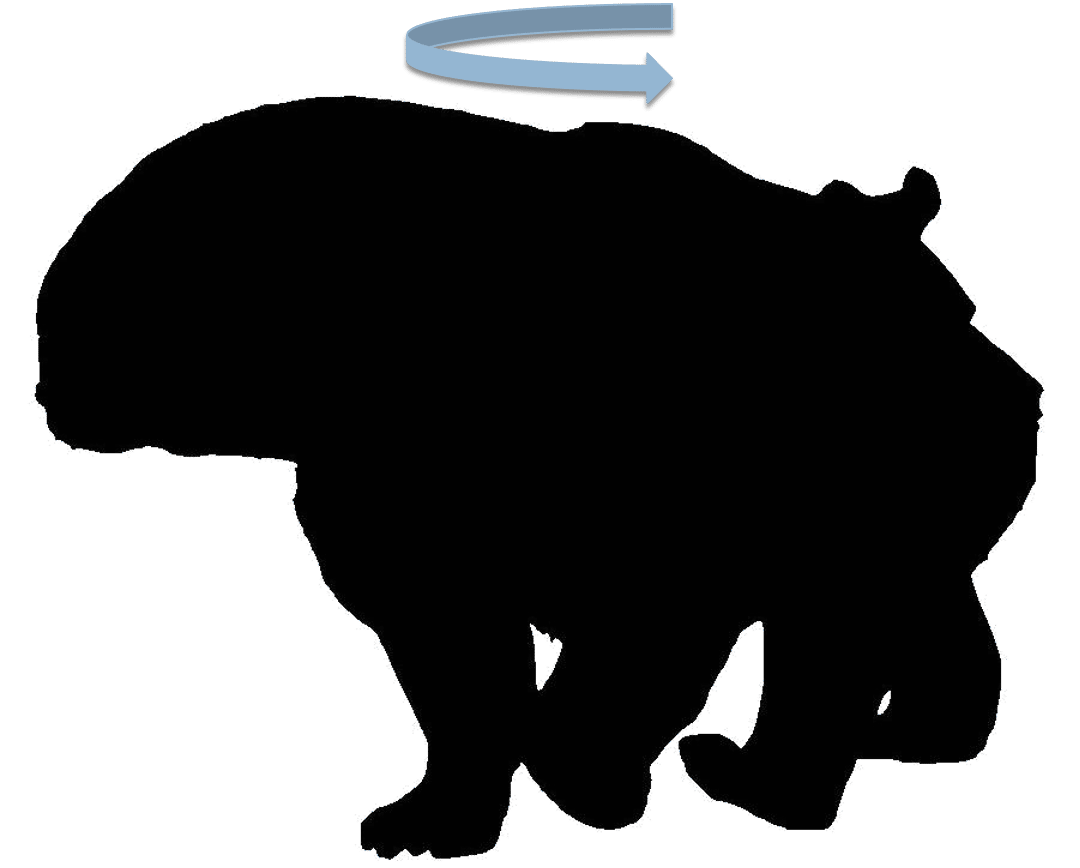

Elder: We wanted to devise a stimulus that would dissociate local shape cues from global shape cues. The idea was to disrupt shapes as little as possible, while still disrupting the configural nature of the shape. We basically take the top half of a shape and spin it around. For example, if the shape is a bear with its nose to the right and its tail to the left, we spin the top half around so that the nose is now pointed left, while the tail stays pointing to the left.

The way we do it is such that there’s no sudden discontinuity in the shape. It looks like a perfectly good shape—very smooth and like an object you might see in the world if you didn’t know about it. As a human, you know this doesn’t look right because the top half is in the wrong place. But somebody from another planet might say, “oh, that’s probably one of your animals, right?” Because you don’t know what you don’t know. That’s a Frankenstein. It comes from the Mary Shelley book and even more so from the movie, where we have this vision of how Dr. Frankenstein took body parts from different sources and then kind of stitched them together. We took the parts from the same animal and just stitched them together wrong.

It turns out Frankensteins are an extremely good stimulus because they do not affect deep AI models at all. To them, nothing seems to be wrong and Frankensteins seem perfectly acceptable. If it was a bear that we turned into a Frankenstein, the deep AI model says, “That’s a perfectly good bear.” And so that was an interesting result because it’s very different from what humans see.

Goudarzi: Is it just that humans have all this experience they’re drawing upon and these AI neural networks don’t? Or is it just that that the human brain doesn’t take shortcuts?

Elder: I’m not sure if it’s so much experience—in the sense of just having more data. I think it’s more the quality of tasks and decisions we make in our life. We’re not just object-recognition systems. The probable reason that humans are so successful is that we have very flexible cognitive abilities and we can reason about all sorts of different qualities of objects.

The other thing is that we understand objects in the context of our three-dimensional world. In our brains, if you see an object, you understand, “well, that object is sitting on the floor and it’s pointing this way and it’s beside these other objects.” You have a sense of where it is in space, you know how big it is, you know its shape, and that’s just fundamental to us because of course we live in our own 3-D world and even before we get to assigning the labels to objects, we have a sense of all these physical qualities.

We’re embodied in a 3-D world and we have to survive in that 3-D world. And so, there is a strong indication that rather than just discriminating between these say thousand categories of animals, we’re really building up a more complete model of the world that we see before us in our head. Not that we’re thinking about it cognitively, but it’s there to support multiple tasks as we perform them.

Goudarzi: What happens when deep neural networks encounter Frankensteins? What’s failing here?

Elder: In theory, these networks are constructed so that mathematically they should potentially be able to extract this configural information, because you can think of the processing as happening in stages. At the first stage, there are artificial neurons that are looking at just local parts of a shape, but as you progress through the network to higher and higher stages, the neurons are processing bigger and bigger chunks of the shape until you get to toward the output. And those neurons are potentially processing the whole shape. So theoretically, they could be mimicking human perception. But it’s challenging for these networks to be trained to do that. So, if there is a simpler solution to a task they’re being trained to perform, they’ll take that solution. And simple is typically more local.

For example, if you’re playing poker and an opponent’s eye twitches when they have a good hand that’s a tell, and you can use that as a shortcut, right? You don’t have to do all the complicated math to figure out how you should play. In a similar way, if there’s any little local cue that networks can use—like the shape of the bear’s feet—to say that’s a bear and it’s not, let’s say, a moose, then they’ll tend to rely on that more than the global shape. The reason seems to be that it’s difficult to train a network so that it becomes sensitive to those global shapes.

We’ve tried changing architectures and introducing more recent innovations and deep AI models, many of which are based on how we think the brain processes information. But none of those innovations really had a big impact on this problem. To get over this kind of shortcut, we need to force the network to perform tasks that go beyond just recognition, because recognition is susceptible to these shortcuts. More complex question, such as, “what condition is the animal in?” or “how do these different animals compare in shape?” that infer the shape of the animal, require more than the simple label of, say, a bear.

The way the human visual system works when, for example, you see an animal in the woods, isn’t so you just see this label flash before you and say, “Oh, that’s a bear.” You have a whole kind of rich sensory experience with regards to that animal. You can talk about the size and the shape and age and so forth. You’re building up a whole set of attributes. That’s a big difference.

Goudarzi: What can researchers do to make AI better at recognizing Frankensteins?

Elder: The standard deep convolutional neural networks are feed-forward networks, so information flows from the input image to the output in a linear way. We know the brain doesn’t work that way. The brain is a recurrent system, so we have both feed-forward and feed-back circuitry. We’ve tried a recurrent artificial deep neural network, but the feedback did not seem to lead to configural perception. We’ve tried incorporating attentional mechanisms that are motivated a bit by human visual function and more recent transformer networks, which are kind of an extension of attention.

More recently, we’ve been working on training networks to encourage them not to use these shortcuts by basically giving them not just intact images but also jumbled images and then telling the networks essentially to develop a representation that’s very different for the jumbled image and the intact image. Because if we take one of the networks that’s been through training and give it a jumbled image, it will more or less think that image looks normal because it’s not really paying attention to the global information. It’s just looking locally. Locally everything looks good. So, we’re basically teaching them “No, you’re not allowed to do that. You have to have a different representation for these jumbled images than for these intact images.”

That does seem to lead to more global configural processing. It probably doesn’t completely solve the problem. What we think is necessary, as mentioned, is to train networks to do more human-like tasks, so basically be able to reason more richly about the nature of the object. That’s more of a hypothesis right now, but it’s something we plan to work on.

Goudarzi: Why is this inability or failing in AI deep neural networks a problem?

Elder: Here is one big concern: Imagine we have an AI system that detects and recognizes objects using these local shortcuts, just little features. If the object is a car, it might recognize a headlight or a tail fin; if it’s a person, it might recognize a leg or an arm. That’s okay maybe if it’s a single object in isolation and they know it’s there, but if you need to do more than just recognize the class of objects, if you need to, for example, know which way the object’s pointed—like you certainly need to know which way a car is pointed and driving or which way a pedestrian is going—then just seeing it as local features may not be sufficient.

Even more problematic is when you get a cluster of objects together, as we do in crowded traffic scenes. There’s a real risk that if you’re just relying on these local features, the system will fail to individuate the objects properly. If you just imagine you’ve got a jumble of local features, you’ve got a few headlights, tail fins, tires, antennas, heads, arms and legs, is the system really going to know and understand the three-dimensional relationships between all those objects? That’s absolutely crucial for traffic safety, whether it’s an autonomous vehicle or a safety system. We work on a lot of safety systems for intersections, and we really want to have a system where if you have a jumble of people crossing at a crosswalk, it’s able to individuate each of them and know how far they are from the curb, how far they are from the vehicle, etc. So, that’s a big concern I have.

Goudarzi: What would you say are some of the applications that successfully use AI in vision processing?

Elder: One is Google image search, through which you can find images based upon text because there’s algorithms that can automatically map text images. We have autonomous vehicles and also just assisted vehicles—technologies that use images just from cameras in vehicles to improve safety. There are systems for security and for user authentication. There are also object recognition systems more generally for retail applications to find information about a product that you might consider buying.

Object recognition has huge applications for general use in robotics and so forth. So, it’s important, particularly for purposes like search and rescue missions where you need machines to be able to reliably identify objects, and, of course, in complex driving scenarios where those differences with respect to the human visual system might lead to vulnerabilities.

(Editor’s note: This interview has been edited for length and clarity.)

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: AI, artificial intelligence, autonomous vehicles, vision

Topics: Artificial Intelligence, Disruptive Technologies