A first take on the White House executive order on AI: A great start that perhaps doesn’t go far enough

By Gary Marcus | October 30, 2023

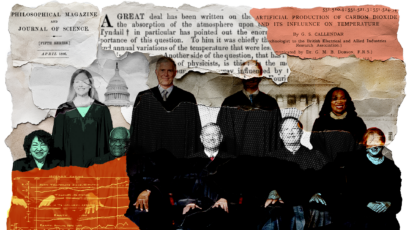

President Joe Biden and Vice President Kamala Harris arrive to announce the Biden administration's approach to artificial intelligence Monday. President Biden issued a new executive order on Monday, directing his administration to create a new chief AI officer, track companies developing the most powerful AI systems, adopt stronger privacy policies, and "both deploy AI and guard against its possible bias," creating new safety guidelines and industry standards. (Photo by Chip Somodevilla/Getty Images)

President Joe Biden and Vice President Kamala Harris arrive to announce the Biden administration's approach to artificial intelligence Monday. President Biden issued a new executive order on Monday, directing his administration to create a new chief AI officer, track companies developing the most powerful AI systems, adopt stronger privacy policies, and "both deploy AI and guard against its possible bias," creating new safety guidelines and industry standards. (Photo by Chip Somodevilla/Getty Images)

Editor’s note: This piece originally appeared in the substack newsletter “Marcus on AI” and is published here with permission.

The White House has just released a lengthy fact sheet on a wide-ranging executive order on AI that’s expected later today. I haven’t seen the final version of the order. But first impressions:

- There’s a lot to like in the executive order. It’s fantastic that the US government is taking the many risks of AI seriously. So much is covered that I can’t quickly summarize it all, and that’s a good thing. There’s discussion of AI safety, bioweapons risk, national security, cybersecurity, privacy, bias, civil rights, algorithmic discrimination, criminal justice, education, workers’ rights, catalyzing research, and much more. Clearly a lot of people have worked hard on this, and it shows.

- How effective this actually becomes depends a lot on the exact wording, and how things are enforced, and how much of it is binding as opposed to merely voluntary.

- As an example of how the details (which I can’t see yet here) really matter, one of the most important passages in the fact sheet comes early, but is a bit unclear, in multiple ways: “In accordance with the Defense Production Act, the Order will require that companies developing any foundation model that poses a serious risk to national security, national economic security, or national public health and safety must notify the federal government when training the model, and must share the results of all red-team safety tests.” First, and this is a big one, does this section apply only to defense procurements, or to any consumer product (e.g. a chatbot psychiatrist) that could pose safety threats? Second, who decides what poses a serious risk, and how? (Would e.g. GPT-4 count? If not, what kind of risk would forthcoming models like GPT-5 or Gemini have to carry before these provisions would activate?) Third, will the results of the red-team safety tests be shared publicly? With whom? (And on what time scale? Before or after the product is released?) What if scientists foresee a risk that the company has not adequately tested?

- The executive order, as good as it is, may fall short. Companies will poke for loopholes and surely find them. With respect to the example above, they will probably try to argue that their specific products don’t meet the risk threshold. Even if they do agree, if I understand correctly, they only have to share results of internal testing, no matter how bad the risks might be. This is a long, long way from the kind of FDA approval process that I advocated for here and in the Senate. Even if a company had a product that poses clear risks, they would (if I read this correctly) still be free to release it, so long as they told the government the risks. When the FDA approves a drug, they look for evidence that the benefits outweigh the risks; paperwork alone is not enough. What we really need is a peer-review process by independent scientists; it still seems like the companies are free to do largely as we please. That’s not enough.

- On the whole, there is lot of good stuff around guidance, but I hope that we ultimately see more that is binding, with teeth.

…

Secondarily, a major UK journalist just asked me,“Wondered if you’d seen the executive order on AI? Do you think this takes the wind out the sails of the [upcoming UK AI] summit?”

Let’s put it this way. Biden’s executive order sets a high initial bar. The executive order is broad, focusing on both current and long-term risks, with some—though probably not enough—teeth. The UK Summit, later this week, seems to have greatly narrowed its own focus, primarily looking at long-term risk, with not enough focus on the here and now, and it’s just not clear how much with teeth will come out of it, or even what authority it really has. We don’t need another position paper; we need concrete implementation. The US executive order is an important first step in that direction; it will be interesting to see whether the UK Summit is able to carry the ball further down field.

…

Update, a few minutes later: Upon reading this essay, George Mason University expert Missy Cummings pointed out a loophole big enough to sail an aircraft carrier through. The order requires that companies developing “foundational models” are subject to the requirements of the executive order. Not all AI uses foundational models at all (e.g., until recently probably few driverless car systems relied in a significant way on foundational models, and some probably still don’t), and it’s easy to add some other gadgets in and say your model is not a foundational model. Framing things as only pertaining to foundational models is plainly too narrow.

I am thrilled to see at least a little of what I have been talking about set to practice. Let’s make sure we get all the way over the goal line.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.