Where Biden’s AI policies fall short in protecting workers

By Hanlin Li, Nick Vincent | February 5, 2024

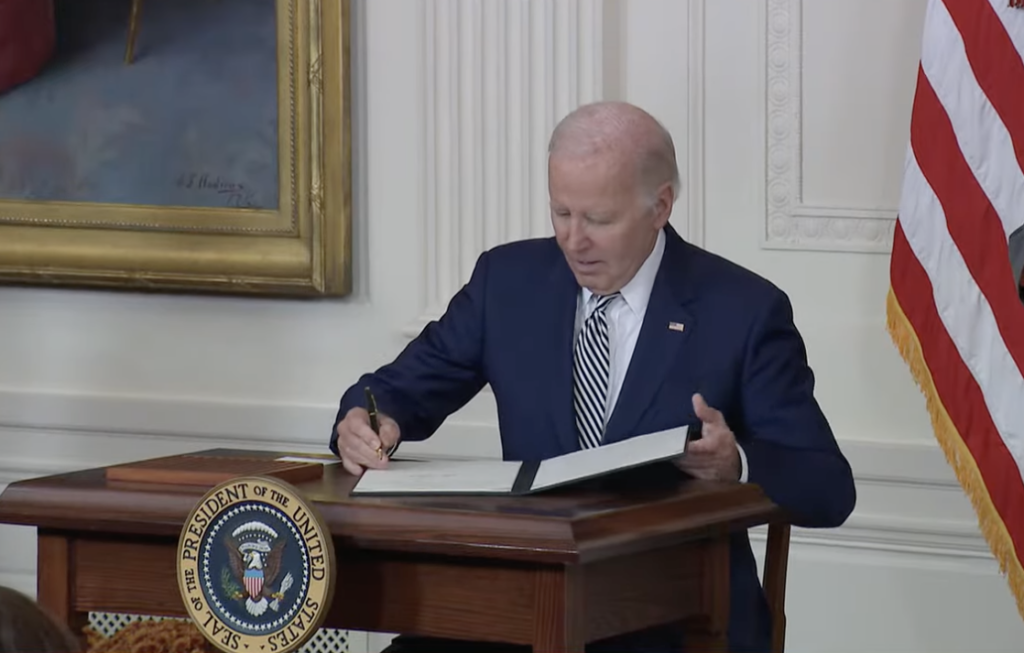

President Biden signs an executive order on safe, secure, and trustworthy development and use of artificial intelligence. Image: video screenshot from the White House

President Biden signs an executive order on safe, secure, and trustworthy development and use of artificial intelligence. Image: video screenshot from the White House

On October 30th, 2023, President Biden’s administration published an executive order on “safe, secure, and trustworthy artificial intelligence,” available in summary form here. This marked a major step forward in an era of more widespread and capable AI systems. The fact that government officials are gesturing towards serious investment in auditing and standardization of this field is particularly heartening, in line with calls to support the involvement of public bodies in artificial intelligence (similar to initiatives like “Public AI”).

Members of the public have played an essential role in supporting artificial intelligence by performing “data labor”—activities that generate the records underlying AI systems. Data laborers include a variety of hired workers around the world, as well as people who produce data outside of a formal job, such as everyday internet users, both of which are sometimes referred to as “crowdworkers.” Most prominent AI systems would not have been feasible to build without the data, content, and knowledge that humans contributed to online spaces. These records now make up the training datasets for AI models.

Supporting the workers whose efforts underlie these systems will be crucial for building a sustainable and strong AI economy. Doing this well and quickly is imperative as most of us will in one way or another likely be some form of these workers in the coming years. To better protect workers in the age of AI, world leaders should focus on how work is defined, look to the Fair Labor Standards Act as guidance, strengthen workers’ data control, and support audits that create a healthy relationship between data’s use and value.

First, it’s important to note that the executive order does directly touch on concerns facing workers, especially creatives and others likely to be directly impacted by generative AI like chatbots. The executive order indicates direct support for collective bargaining, echoing past statements from the current White House outside the context of AI. Worker compensation and labor disruptions are also recognized as an important issue. These concerns resonate with discussions in popular media about the economics of digital technologies. For instance, in a book published last year, Power and Progress, authors Daron Acemoglu and Simon Johnson highlight the role of worker power in steering new technologies towards broad benefit.

Section 6 of the executive order mentions some very specific concrete actions related to workers’ concern, including “a report analyzing the abilities of agencies to support workers displaced by the adoption of AI” and a plan to “publish principles and best practices for employers that could be used to mitigate AI’s potential harms to employees’ well-being and maximize its potential benefit.” So, it seems on top of safety and trust-related concerns, the executive order includes substantive plans to support workers. To build on this progress however, the White House and other world leaders will need to take further steps.

The first step is to broaden the definition of “work” to accommodate all the ways humans create valuable data in the AI era. While all the key points highlighted in the executive order regarding workers—compensation, organizing, job displacement, and more—are critical, there is room to expand on these points in future policy work.

Currently, AI systems are powered by a multitude of data laborers, some of whom do not produce data as part of their formal job. Data-generating activities—including interactions with technology, writing Wikipedia articles, answering questions on Reddit, and sharing images online—make language and text-to-image models possible and thereby underlie AI capabilities.

Such far-reaching data collection apparatus for AI also means that more and more people are going to experience the issues currently facing creative workers. In short, one might not think they are a data laborer, or that AI could affect their job. But given that most people have some connection to the data pipelines upstream of modern AI, in the long run it is likely that an increasing number of workers will be impacted in some way. Therefore, legislators should be seriously concerned about AI’s potential disruptions to labor.

A great deal of activities mediated by computers and mobile devices ought to be seen as a kind of work, because they power AI systems—a point that was highlighted in Microsoft CEO Satya Nadella’s testimony in the recent Google antitrust trial. As such, when thinking of federal level interventions related to job displacement, fair compensation, and collective negotiation, it could be helpful look to existing cases in which there is already some degree of invisible work powering AI by crowdworkers, independent contractors, and uncompensated data creators. The executive order referenced the Fair Labor Standards Act of 1938 when discussing how workers “monitored or augmented by AI” should receive appropriate compensations. This provision should apply to all data laborers, including those whose work is harvested to train AI without compensation.

Furthermore, setting standards regarding the relationship between federal AI regulations and intellectual property will be valuable, especially regarding the issue of the possible theft of creative output. The government might enforce new standards regarding transparency about how employers use workers’ and contractors’ data and content, including but not limited to creative work, trace data, and communication data.

The second step to building on the current executive order is taking measures to bolster worker agency—that is, the feeling of some degree of power and control over their actions (and the consequences of those actions)—when it comes to AI. Increasing workers’ awareness of their contribution to this technology will be essential so they can bargain with full knowledge about the value they bring to AI and their employers.

The executive order also suggests that there is a need to develop principles and best practices around data collection in the context of AI work. This point is not expanded on quite as much as some of the other focuses of the executive order, but it is centrally important. Workers should have access to the data collected from them and information about how such data contributes to the AI used.

New federal guidelines about data collection and use for AI may provide workers with the necessary resources and infrastructure to understand their role in AI and potentially enable workers (broadly defined) to leverage their data in their collective negotiation with employers.

This direction also underlies concerns around privacy that are laid out in the executive order. Privacy-related initiatives could provide more fine-grained control over data flow to workers. Ideally, if the federal government can help workers take data-related actions, this could really lower the barrier to workers “voting with their data” and provide workers with more bargaining chips with their employers.

Once workers have stronger control over their data, a natural next step would be to audit and or challenge workplace AI systems. Due to the opaqueness of existing artificial intelligence systems, workers have no ways of investigating algorithmic harms in workplaces, such as wage discrimination, job displacements, and biases. With more access to worker-generated data, workers and labor unions would be able to conduct time studies, audits, and investigations to gain a more comprehensive understanding of AI’s impact on labor and labor relationships.

Given that the executive order also centers on maintaining American leadership, such steps could help spread “data agency” globally. Standard-building and global leadership could be a big deal for both workers most immediately affected by AI and members of the public interested in collective action to promote responsible artificial intelligence outcomes.

So, while prioritizing safety-related concerns (ranging from fraud to national security), this executive order sets the stage for federal support for data-related empowerment of workers—both those who directly interface with AI systems, and those members of the public who generate data but may not immediately experience job displacements or other harms from this technology.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: AI, artificial intelligence, data, labor, training data, workers rights

Topics: Artificial Intelligence, Disruptive Technologies

AI doesn’t scare me. If jobs CAN be taken by AI, they SHOULD be taken by AI. It just underscores even further the need for a UNIVERSAL BASIC INCOME! At least for the bottom 25% of Americans. How do we pay for it? By consolidating all existing social spending and going after the waste, fraud and abuse at the Pentagon. That’s how!