‘I’m afraid I can’t do that’: Should killer robots be allowed to disobey orders?

By Arthur Holland Michel | August 6, 2024

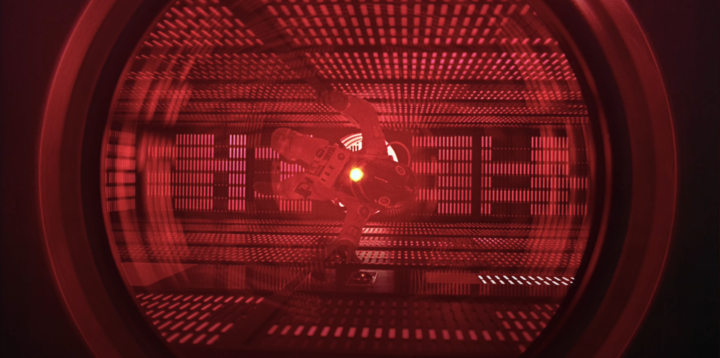

Still image of HAL 9000 from Stanley Kubrick's movie "2001: A Space Odyssey." Credit: Warner Bros

Still image of HAL 9000 from Stanley Kubrick's movie "2001: A Space Odyssey." Credit: Warner Bros

It is often said that autonomous weapons could help minimize the needless horrors of war. Their vision algorithms could be better than humans at distinguishing a schoolhouse from a weapons depot. They won’t be swayed by the furies that lead mortal souls to commit atrocities; they won’t massacre or pillage. Some ethicists have long argued that robots could even be hardwired to follow the laws of war with mathematical consistency.

And yet for machines to translate these virtues into the effective protection of civilians in war zones, they must also possess a key ability: They need to be able to say no.

Consider this scenario. An autonomous drone dispatched to destroy an enemy vehicle detects women and children nearby. Deep behind enemy lines, without contact with its operator, the machine has to make a decision on its own. To prevent tragedy, it must call off its own mission. In other words, it must refuse the order.

“Robot refusal” sounds reasonable in theory. One of Amnesty International’s objections to autonomous weapons is that they “cannot … refuse an illegal order”—which implies that they should be able to refuse orders. In practice, though, it poses a tricky catch-22. Human control sits at the heart of governments’ pitch for responsible military AI. Giving machines the power to refuse orders would cut against that principle. Meanwhile, the same shortcomings that hinder AI’s capacity to faithfully execute a human’s orders could cause them to err when rejecting an order.

Militaries will therefore need to either demonstrate that it’s possible to build ethical, responsible autonomous weapons that don’t say no, or show that they can engineer a safe and reliable right-to-refuse that’s compatible with the principle of always keeping a human “in the loop.”

If they can’t do one or the other, or find a third way out of the catch-22, their promises of ethical and yet controllable killer robots should be treated with caution.

Last year, 54 countries, including the United States, United Kingdom, and Germany, signed a political declaration insisting that autonomous weapons and military AI will always operate within a responsible human chain of command and control.” That is, autonomous weapons will act solely, and strictly, under the direct orders of their human overseers. Others, like the Russian Federation, have similarly stressed that autonomous weapons must possess a capacity to discriminate between legal and illegal targets. And yet if machines can outperform humans as moral experts, as one recent study (and multiple states) claim, they’ll be hard pressed to apply that expertise if they can only ever agree with their human users.

Allowing a machine to say “I won’t do it” when presented with an order would sharply curtail that authority. It would give machines an ultimate decision-making power that no state would relinquish to a computer. It might be possible to instruct a weapon to avoid hurting civilians while obeying its other orders. But this implies that it will be making legal judgments on things like proportionality and discrimination—however, the International Committee of the Red Cross, among others, argues that only humans can make legal judgments because only humans can be held legally responsible for harms.

In short, the idea of autonomous weapons that refuse orders would be a non-starter. Armies want machines that act as extensions of their will, not as a counterweight to their intent. Even countries that hold themselves to the highest legal standards—standards which rely on all soldiers being empowered to say no when given a bad order—would probably balk at the proposition. Meanwhile, militaries that intend to deliberately violate the law won’t want machines that comply rigidly with the law.

As such, the killer robots that countries are likely to use will only ever be as ethical as their imperfect human commanders. Just like a bullet or a missile, they would only promise a cleaner mode of warfare if those using them seek to hold themselves to a higher standard. That’s not a particularly comforting thought.

A killer robot with a “no mode” could also pose its own ethical risks. Just as humans won’t always give virtuous orders to the machines, machines wouldn’t always be right when they turn around and say no. Even when autonomous weapons get very good at differentiating combatants from children, for example, they will still sometimes confuse one with the other. They might not be able to account for contextual factors that make a seemingly problematic order legal. Autonomous weapons can also be hacked. As the war in Ukraine has shown, for example, techniques for jamming and commandeering drones are sophisticated and fast-evolving. Additionally, an algorithmic no mode could be exploited by cunning adversaries. A military might, for example, disguise its artillery positions with camouflage that tricks an autonomous weapon to mistake them for civilian structures.

In other words, under some conditions a weapon might refuse a legal order. Given the formidable capabilities of these systems, that’s an unacceptable risk. There’s a reason that the most frightening part of 2001: A Space Odyssey is when the computer, HAL 9000, refuses astronaut David Bowman’s orders to let him back onto the ship, since doing so would jeopardize the mission assigned to it by the upper command. The line is a poignant warning for our times: “I’m sorry, Dave, I’m afraid I can’t do that.”

Therein lies the paradox. Giving autonomous weapons the ability to accurately distinguish the right course of action from the wrong one—without bias or error—is held as a minimum criteria for allowing these machines onto the battlefield. Another criteria—predictability—holds that machines should only do what their commanders want and expect them to do. These principles of AI ethics are assumed to be compatible with the fundamental principle of control. The reality could be more complicated than that. Humans want autonomous machines to be noble, but can they allow the machine to be nobler than they are and still say that they are in charge?

It is, of course, possible that militaries could find a third way. For example. Maybe it will be enough for autonomous weapons to simply ask their commander “are you sure?” before proceeding with an order. Experts in international law have argued that if AI weapons can be engineered to prompt humans to reconsider their decisions, without over-riding the orders themselves, they might enable militaries to make more legally sound choices in the heat of conflict. An AI system could, for example, urge a fired-up commander who is planning a hasty airstrike in a densely populated area to think twice, or seek a second human opinion, before pulling the trigger. Though this type of feature wouldn’t work in all contexts (for example, in the case of the drone that has no contact with its operator) it could, in some settings, save lives.

Militaries might not even be able to stomach such a modest proposition. After all, they want AI to accelerate their decision-making, not slow it down. But if states are dead set on using AI for war, and if they’re honest about wishing to reduce the misery of conflict, these are questions that they have no choice but to take seriously.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: AI, Artifical intelligence, autonomous weapons, killer robots, militaries

Topics: Disruptive Technologies

Give the system an objective function which includes a harm model and instruct it to minimize harm. If properly done and if the system is able to correctly assess the situation, it will use the ethical guidelines it is provided with and it will in some cases not attack. Important: the order is not to attack, the order is to minimize harm. See also: Governing ethical and effective behaviour of intelligent systems | Militaire Spectator. Defining such an objective function which includes a harm model requires a socio-technological feedback-loop approach involving all relevant stakeholders. My main point: the ethics are… Read more »

“Just nuke it from orbit, it’s the only way to be sure”

Radio interference has its own technological curve. In the long run militaries will be effectively prevented from communicating with their drones, which means those drones will all have to have a 100% autonomous mode.

what are the best possible Asimov rules for robotics? ChatGTP says: Isaac Asimov, a science fiction writer and biochemist, proposed three fundamental rules for robotics, commonly known as the “Three Laws of Robotics.” These rules are designed to ensure that robots operate safely and ethically in a human-centric environment. However, they have been the subject of debate and have inspired many variations and extensions. Here are Asimov’s original rules, followed by some possible extensions or modifications that might address their limitations. ### **Asimov’s Original Three Laws of Robotics:** 1. **First Law:** – *”A robot may not injure a human being,… Read more »

As an old Infantryman I can see that robots are already on the battlefield. The next move is to get human soldiers off of it. If there are no human soldiers then telling robots to not harm humans calls for a lot less decision making for the robot, such that any humans are non-combatants. Invariably we will screw that up. Humans cannot exercise control of robots in the field. There is too much going on in every direction. Robots will have to be programmed and sent out to do their jobs. Robots can have “eyes” seeing in 3 dimensions +… Read more »