Don’t panic: AI can strengthen democracy too

By Abi Olvera | October 1, 2024

Used wisely, AI can enhance citizen input, discourse, and collaboration. Credit: Thomas Gaulkin / Feodora52 / depositphotos.com

Used wisely, AI can enhance citizen input, discourse, and collaboration. Credit: Thomas Gaulkin / Feodora52 / depositphotos.com

A townsperson woke Carlos Restrepo in the middle of the night telling him to flee. Residents of El Pital wanted to kill Restrepo because of his political leaning. This was 1940s Colombia, the beginning of a decade-long civil war between the Colombian Conservative Party and the Colombian Liberal Party. Colombia’s intense two-party rivalry, exacerbated by its winner-take-all political system, led to partisan enclaves in cities and regions, with areas becoming dominated by either liberals or conservatives. Restrepo’s family kept this account quiet until his granddaughter saw parallels between their story and the current American political climate.

“There weren’t a shared set of common occurrences, common facts, or a common national identity,” depolarization advocate Phelosha Collaros said about her grandfather’s time. Because their fragmented media outlets presented conflicting realities and painted the other side as evil, emotional polarization worsened and mutual toleration began to collapse.

Mutual toleration—the acceptance of opposing parties as legitimate rivals rather than existential threats—appears to be the most important lever for keeping democracies stable. When parties view each other as existential threats, any action seems justifiable, even killing a fellow resident.

Generative AI threatens mutual toleration through indirect means. While the technology democratizes complex data analysis, allowing more people to engage with diverse datasets and advanced tools, it can also lead to an increase in well-intentioned but mistaken interpretations of data. Instances of these errors, once detected, are often difficult to reverse or correct, eroding trust. AI also enables rapid creation of what can look like convincing fake media, potentially overwhelming fact-checkers during particularly time-sensitive periods, such as elections.

Deepfakes—hyper-realistic digital forgeries of audio, video, or images created using AI—and other AI-generated content “adds a straw to a stressed system,” said Elizabeth Seger, an epistemic researcher and the director of Tech Policy at the think tank Demos.

Generative AI’s impacts could become pivotal, given how important mutual toleration is to robust democracies. As mutual toleration drops, politicians who vow to protect the nation from the opposing party gain appeal, even if their actions erode democratic principles.

Changes that chip away at democracy include the abolition of term limits, subjugation of the judiciary, and expansion of executive authority. The United States Constitution offers no inherent protection against these changes. Multiple countries modeled off the American constitution, such as the Philippines, Brazil, and Argentina, fell into autocracy throughout the 1970s and 1980s. While some democracies have collapsed to sudden coups, the more common downfall is a gradual weakening of democratic institutions, often through seemingly legal means. Since the 1990s, “executive takeovers”—where a democratically elected leader gradually consolidates power, eroding democratic checks and balances—have caused four out every five democratic breakdowns.

Factors affecting mutual toleration in the United States include parties’ shift toward extremes, the post-1990s good-versus-evil framing of politics, misperceptions about the opposite party, the rise of partisan news after the 1987 repeal of the Fairness Doctrine requiring equal airtime for opposing viewpoints, people’s holding of beliefs to affirm kinship identities, and strong allegiances that hinder political coalition-building.

As voter identification fuses with social identity, tolerance for opposing views diminishes. In the United States, the two major parties now vote primarily based on hostility.

While these factors eroded mutual toleration without AI’s involvement, the anticipated direct impacts of AI on political discourse—such as rampant misinformation and deepfakes—have not materialized to the extent many feared. Instead, AI’s influence is likely to be more subtle.

The content curation paradox. While examples of AI-generated fake news websites and deepfakes are plentiful, only one percent of social media users see most fake news content—usually those few people predisposed to seek it out.

People still turn to trustworthy institutions like mainstream news and media organizations, John Wihbey, a media innovation professor at Northeastern University, explained. He added that though there are “worrisome patterns like consuming a lot of violent, antisocial, problematic content…that’s only a small group.” Additionally, most Americans spend less than a minute per day reading news online. Generative AI doesn’t increase demand for the increased supply of misinformation.

Where AI comes into play is in content distribution. Some experts worry that generative AI’s ability to decipher more about each news piece, tweet, or TikTok video could make curations more effective by better assessing content virality and increasing the content for each online niche. Studies show that, at least for now, ChatGPT has only made small improvements in targeting. While more complex models are better at reading people, “they’ve hit diminishing returns,” University of Zurich psychologist Sacha Altay said. Additionally, more content within each niche might not have large impacts given that, like misinformation, the bottleneck is people’s attention.

It is important, however, to not make sweeping predictions about the future of information consumption. “The information environment doesn’t just float freely in abstract space,” Wihbey said. “It sits between institutions like…think tanks and nonprofits attentive to these issues, and courts that follow and uphold the rule of law,” which act as a mediating force. But, Wihbey warned, “We can imagine losing some institutional norms… so we have to be attentive to what these technologies are doing.”

This nuanced perspective on the evolving information landscape becomes particularly relevant because of the role that public trust has in maintaining mutual toleration.

The trust crisis and its political implications. The erosion of public trust in institutions, such as universities, civil society, courts, and governments, has been a growing concern for the past half-century, reaching new lows in recent decades. This decline stems largely from widespread skepticism about government competency and the responsiveness of various institutions.

As people lose faith in institutions, rival party victories appear as existential threats.

Even the fears about generative AI vary depend on who might use it or be exposed to it. Seger’s research found that “70 percent of people report worry about others falling for misinformation, even if they aren’t worried about falling for it themselves.” These, Seger said, reflect “lower trust in our infrastructure, in our society.” Similarly, 43 percent of respondents had higher trust in traditional media’s ability to use generative AI responsibly and manage deep fakes effectively than politicians.

Democracies face multiple strains including economic pressures, housing shortages, political distrust, and inconsistent access to public services. As such, generative AI could sow confusion in times of crisis or right before an election. Days before the 2023 Slovakian election, a potentially AI-created recording of one of the candidates spread through social media. Slovakia’s pre-election “media blackout” prevented fact-checking. This rule barred media outlets from publishing election-related content for several days before voting, leaving the AI-generated misinformation unchallenged.

However, technology isn’t the pivotal factor in such scenarios. “You don’t need deepfakes for that,” said University of Zurich psychologist Sacha Altay, noting that even in crises where extremists spread disinformation, only those predisposed to racism or violence would be motivated to believe or act. Although AI can be used, power-seekers often exploit existing tensions, as “quality isn’t the point” in spreading harmful rumors, Altay said. Ultimately, technology merely amplifies existing prejudices rather than create them.

Additionally, complex misinformation, where institutions unintentionally spread false information, could become more numerous. As the barriers to conducting complex analyses lower, there may be a surge in flawed interpretation of research. A recent example covered by NPR and Vox co-founder Matt Yglesias is the widely reported spike in maternal mortality rates in the United States, which was largely attributable to changes in counting methods. People might question institutions’ competence, while members of opposite parties could seize upon such errors as evidence of deliberate deception. These are “hard to detect, but not new” with some added risks from chatbots giving the appearance of wider acceptance,” Seger explained.

Lastly, media reports about widespread AI-created misinformation could make public discourse seem more dire than it is. It’s important to not overemphasize misinformation’s role in current public discourse woes. “The reporting on the impact that generative AI was going to have on elections probably had more impact on elections than generative AI itself did,” Seger said. While “misinformation will have more to do with forming the general environment and …feeling of distrust,” people’s reactions, particularly in politics, often stem from deeper factors. Focusing solely on combating misinformation might lead to neglecting underlying issues like value differences or eroded institutional trust. Value misalignment can’t be fought by “just throwing more information at a person” Seger said, because people’s inner values lead them to “interpret the same information differently.”

The picture isn’t entirely bleak. Exposure to factual information about the status quo can shift political views, even if it doesn’t change party affiliation. AI-powered algorithms could elevate neutral, factual information more effectively, potentially countering common misconceptions on both sides of the aisle by analyzing vast amounts of data and presenting unbiased summaries. For instance, many Americans incorrectly believe that China owns the majority of United States’ debt or that foreign aid is over one-quarter of the US budget. (It’s less than 1 percent. And to put that in perspective, on a percentage basis, the United States doesn’t even crack the top 10 list of countries giving foreign aid, 22 other countries give more than America does as a percent of gross national income.) Many Americans are unaware that the two political parties have similar demographics and share many policy preferences. A majority of Democrats and four in 10 Republicans support banning high-capacity ammunition magazines.

AI could foster mutual understanding by creating a more balanced information landscape rather than relying solely on clicks and shares. As a starting point, social platforms could at least show users content that disagrees with their world view when users choose to subscribe to it. Amplifying positive and local news could also reduce mental health risks associated with social media use.

Addressing polarization, however, requires strategies beyond just adjusting social media algorithms. Social networks, both online and offline, profoundly shape beliefs. This cultural force makes it uncomfortable to disagree, leading people to align their views even when they suspect they’re wrong.

On governance. Countries in Europe show similar levels of polarization as the United States, but their democracies have been less impacted. Over time, European nations improved their democratic responsiveness and resilience.

The United States is more prone to elect polarizing and less representative leaders due to several factors including its winner-take-all system, party chairs choosing more extremist candidates which then influences voters’ perceptions of their own party norms, as well as structural issues like indirect voting (where voter elected representatives elect government officials) that heavily weighs some citizens more than others and lifetime judges. Changes like approval or ranked-choice voting in the United States could reduce polarization by rewarding politicians who appeal to broader populations.

The increase in anti-establishment candidates, fueled by low public trust and winning in-group legitimacy through media rejection, thrive under the current first-past-the-post system that requires only the most votes, not a majority (or, more than 50 percent). This system benefits candidates with strong, motivated voter bases, even if they don’t represent the broader population.

While the most pivotal changes are low-tech, some innovations can fuse machine learning and decision-making, like Taiwan’s use of a system called Polis where citizens crowdsource its laws: They use the technology to draft and elevate nuanced, bridge-building statements that reveal common ground across divided groups.

Looking forward: OpenAI’s stated goal of developing artificial intelligence models capable of doing the work of entire organizations could alter the media landscape. The impact of these AI “organizations” on public institutions and trust will likely depend on their implementation.

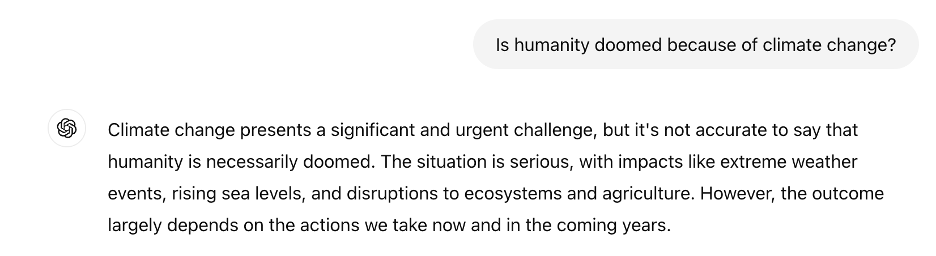

Current large language models like ChatGPT by default generate balanced, not alarmist, responses to partisan questions faster than humans, though they can generate misleading slanted or biased answers just as quickly.

Generative AI could help trusted public institutions maintain their role by facilitating fact-checking and creating high-quality content that resonates across party lines—as long as it can be prevented from enabling less-scrupulous institutions to appear more credible, potentially exacerbating partisan divides.

To address these questions, several approaches are being explored. Some AI model-focused tactics recommend thoughtful interventions at every step of the process, including during the creation and training of generative AI models and when these applications are accessed. Better watermarking methods and stricter requirements for political content could safeguard democratic processes, especially during critical periods like elections. Another avenue may be bolstering the labeling of fake or deceptive content across multiple platforms. Some academics have suggested that the increased cost for journalists, social media platforms, and third-party checkers to identify these could be funded by a tax on AI companies.

Artificial intelligence will interact with our imperfect invisible social systems, either improving or destabilizing them. It can enhance citizen input, discourse, and collaboration if used wisely. Thoughtful, proactive steps now will help harness AI’s potential to strengthen society rather than erode it.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

As someone who has been involved with the potential of the Internet, namely through a worldwide network of community networks, before it was made public, I’m particularly triggered by the uses of words like “could” and “can” in relation to Internet. Anything could used for good, but the likelihood based on history is that it won’t be. I think the role of the Bulletin is to discuss risks, not to reassure us that things are fine. The risks are legion, in addition to the pollution of the public sphere, the rise of authoritarianism, rule by technocrats, there are new immense… Read more »

Thanks Douglas! I appreciate you reading the article and for your thoughtful comment. The internet’s history is definitely complex, but I worry that focusing exclusively on risks can itself become a risk. When our information diet skews entirely negative, we 1. don’t learn about our successes esp. so we can recreate them 2. we prioritize the less effectively as we should 3. it’s important to point out the null hypothesis when we’re lucky to get them. However, for this article, I’d like to highlight that the title is meant to highlight that we can actively make choices that support beneficial… Read more »