AI misinformation detectors can’t save us from tyranny—at least not yet

By Walter Scheirer | September 5, 2024

AI misinformation detectors can’t save us from tyranny—at least not yet

By Walter Scheirer | September 5, 2024

Online misinformation that can fuel political violence and disrupt elections is a leading problem (McQuade 2024) of our time—an issue that technologists and policy makers widely discuss, but poorly address. This has to do with the complex interplay between the scale of social media platforms, the emergence of sophisticated content editing and synthesis tools, and the very nature of human communication. Those seeking potential solutions have consequently missed the mark on integrating these elements across the technical and social dimensions of misinformation. Nonetheless, tools powered by artificial intelligence have emerged as a contender, with a rush of academic research (Horowitz 2021) and corporate products (Hsu 2023) leveraging recent breakthroughs in machine learning to attempt to detect evidence of deceit in a broad range of content.

TrueMedia.org (TrueMedia.org 2024), a non-profit organization dedicated to detecting deepfake, or artificial, videos, boasts that its web-based detector tool is 90 percent accurate. A recent product offering from start-up Sensity AI (Sensity 2024) which detects false videos, images, audio, and identities, purports to be 98 percent accurate. Intel, the semiconductor giant, has also entered this arena with its FakeCatcher (Intel 2022), an AI algorithm designed to measure what Intel calls “subtle blood flow” in people depicted in videos, which is powered by the company’s high-performance chips. (The company’s website claims “When our hearts pump blood, our veins change color. These blood flow signals are collected from all over the face and algorithms translate these signals into spatiotemporal maps. Then, using deep learning, we can instantly detect whether a video is real or fake” [Intel 2022]). Intel says it is 96 percent accurate.

While these are exciting claims, users should be cautious about such technological interventions. It is important to understand that the proposed solutions are new and largely untested by third parties in the complex information environment of the online world. (The entirety of the virtual space of the internet blends both fact and fiction from user-generated content that can be malicious, but mostly isn’t.) Developers typically only tout the strengths of their detection algorithms, not the fundamental limitations that are associated with them. By not considering the limits of today’s technology for misinformation detection, users may end up with technologies that “cry wolf” or miss salient warnings, as well as policies based on malfunctioning technology—something that is perhaps as dangerous as the misinformation itself. With these weaknesses in mind, it is important to maintain a critical perspective on current trends in AI for misinformation detection, with the aim of redirecting work in this area to more effective directions

Misinformation on the internet takes on different forms. Some of it is text-based. An example of this is traditional “fake news” (Zimdars and McLeod 2020), or false information that is reported as if it were true.

Deceitful content can also take on the form of still imagery. A prevailing concern with images is that they will be edited or synthesized to revise history (Shapiro and Mattmann 2024) in a way that will trigger action on the part of the observer.

Some misinformation can be in the form of audio. Recent scams (Bethea 2024) targeting the elderly through telephone calls that impersonate their relatives demonstrate the threat of this signal.

Multimedia, such as a deepfake (Somers 2020) video post to social media that combines both imagery and audio to simulate a real person, can also be phony. Artificial intelligence tools can examine (Verdoliva 2020) all these signals individually or together. But the more signals that are involved, the more difficult the processing becomes.

Most AI detectors rely on machine learning—the use of example training data to automatically learn patterns—to identify examples of different categories of misinformation. The patterns the detectors learn are typically simplistic: inconsistencies in the statistics of the pixels in an image, the repetitive use of certain words or phrases in text, or mismatched images and captions. Crucially, two different forms of error are associated with any misinformation detection task: false positive errors, whereby an innocent piece of content is flagged as being misinformation, and false negative errors, where content that is misinformation is ignored. With these things in mind, there are five key problems—with associated policy implications—facing technologists designing AI detectors.

The arms race nature of the problem

Any machine learning-based strategy for misinformation detection will necessarily be limited to what is reflected in the available training data. As a result, a detector will only identify known strategies for producing misinformation and fail to detect anything that is unknown, resulting in a very high false negative error rate. Malicious actors producing misinformation hold a distinct advantage in this scenario, in that they can always formulate new approaches to content creation that will not be reflected in the training data for any detection model. This reduces to a classic setting in computer security, where the defense is always playing catch-up to an offense (Schneier 2017) that continues to develop new capabilities.

It is also emblematic of what is known as the novelty problem (Boult et al. 2021) in AI, where new things must be detected, characterized, and learned by an algorithm on-the-fly. That problem is notoriously difficult to address given today’s algorithms (Geng et al. 2020)—as is the case with computer security, mitigation instead of elimination is the best possible outcome. Knowing this, one can recognize that the very high published accuracy figures for existing detectors reflect performance on known misinformation techniques through controlled laboratory testing. This type of experimentation brings into immediate question the usefulness of these approaches when deployed on the internet.

Most visual content on the internet is altered

Today’s smartphones make use of algorithms from an area of computer science known as computational photography (Carleson 2022), which promotes the use of software to augment or enhance the capabilities of camera hardware. These algorithms automatically remove noise, sharpen images, and increase resolution—often through the use of machine learning to add data to the photographs that are sampled from other images found on the web. People have raised concerns (Chayka 2022) that digital photographs are moving farther away from accurate portrayals of the scenes in the physical world towards more idealized depictions based on what engineers believe to be desirable for users. A secondary problem in this regard is the prevalence of near duplicate images that are the product of compression or recapture (through screenshots). User-shared images go through different transformations as they are automatically processed by different apps and platforms. Recent research (VidalMata 2023) has shown that all these image processing operations result in a very high false positive rate for algorithms that are designed to detect intentional image editing and synthetic imagery.

Non-realistic or obviously fake misinformation is prevalent

An often-underappreciated aspect of misinformation on the internet is that obviously fake content is the real problem (Yankoski et al. 2021), and it’s immune to any form of analysis that performs tampering or synthetic media detection. This has coincided with the rise of meme culture, where users spread messages through humorous images and videos that are heavily edited in obvious ways. Memes are effective for transmitting potentially dangerous messages because of their comic effect, which draws the observer into a new viewpoint in a familiar way, sometimes changing their beliefs. Algorithmic detectors fail in this scenario because the observer already knows that the image has been altered; warning them about it is beside the point. Political extremism (Lorenz 2022), conspiracy theories (Lorenz 2019), and vaccine hesitancy (Goodman and Carmichael 2020a) have all spread through memes. An example of the latter can be seen in Figure 1, which depicts a meme (Baker and Walsh 2024) that invokes the Holocaust, Microsoft, and microchips to represent the alleged victimization of those who chose not to get vaccinated against Covid-19. The last two items allude to the association of billionaire technologist Bill Gates with absurd Covid vaccine conspiracies (Goodman and Carmichael 2020b), and coupled with the obviously poor image editing job, are intended for comic effect. To effectively detect the presence of misinformation in a meme, an AI algorithm would have to be able to interpret multiple-levels of meaning to move beyond the surface-level joke to understand the true intent of the message. At present, no such algorithm exists.

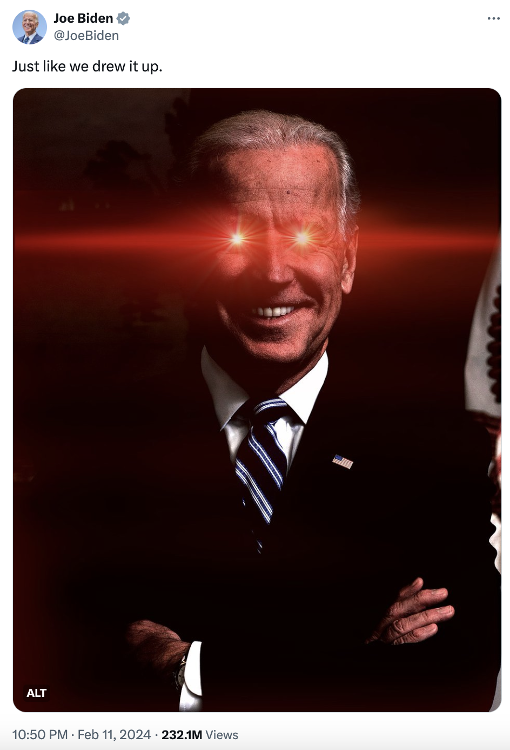

Parody and satire fuel internet humor

A related problem to the one just mentioned is the presence of parody and satire on the internet. Some of it is transgressive and looks like misinformation. For example, shortly after the 2024 Superbowl, President Joe Biden posted (Biden 2024) a far-right “Dark Brandon” (Know Your Meme 2024) meme of himself on the social media platform X and seemingly affirmed that he had taken part in a conspiracy to rig the game (Paybarah 2024) in order to influence the upcoming presidential election. (See Figure 2.) Of course, Biden’s use of this political caricature of himself was purely satirical in nature and intended to poke fun at Republican narratives criticizing the state of his campaign in an ironic way. Such content serves important political and social purposes in many parts of the globe (especially in the United States). It would be an authoritarian move for a government or corporation to flag any of it as misinformation, which would inevitably happen if it were misinterpreted. As I’ve written in A History of Fake Things on the Internet (Scheirer 2023), this dilemma is inherent in an internet where users are able to express their views freely through the use of creative software tools. There is a very large intersection between storytelling and politics, and this is nothing new in the realm of human communication; only the medium has changed.

Automatic semantic analysis (or an analysis of the meaning of content) remains a challenge for AI, and even large language models do not possess a capacity (Mitchell and Krakauer 2023) for understanding language in the same manner as people. A high false positive rate will be inevitable in what are currently the most effective approaches, which make use of simple features like keywords or iconography associated with misinformation. Today’s AI is good at perceptual analysis but doesn’t possess cognitive ability. A great deal of cognitive machinery is invoked when we try to understand the meaning of a satirical news article from a publication such as The Onion. Recent findings (Luo 2022) from the DARPA Semantic Forensics program (DARPA 2024), a major US government effort to develop semantic analysis approaches to detecting misinformation, have shown that AI is good at detecting mismatched pieces of information in various cases. But sometimes the mismatch between an image and its caption is obvious and intentional, as any reader of The New Yorker knows.

Provenance is difficult to determine

In misinformation studies, there is a persistent belief that much of the questionable content is the product of state-based actors—specifically Russia and China (Bandurski 2022)—who can conduct sophisticated operations through their intelligence services. However, this belief is not backed by solid evidence, given what is known about the prevalence of content editing on the internet, coupled with an extremely polarized electorate in many countries, including the United States. Image bulletin boards—“imageboards”— like 4chan have been the source of much of the most alarming content over the past decade, but the posting to that influential board is done in an anonymous manner, and it is extremely difficult to attribute posts to any particular source. Further complicating things is the ease with which (Griffith 2024) one posting to any social media platform can use a proxy server (an intermediate server that makes it appear that the user is on another network) and temporary mobile phone numbers through SIM swapping to obscure their origin. Note that none of these strategies is inherently bad—all are very useful when anonymity must be invoked (such as for political dissidents posting about human rights abuses in authoritarian countries). But their use does inherently complicate the determination of provenance of posted content. And today’s AI tools provide no help in this regard.

Some have suggested digital watermarking (Cox et al. 2007) as an alternative to a provenance investigation that happens after content has been posted. By adding an invisible mark to a piece of content at creation time that can be cryptographically verified later (the watermark), the assumption is that every new piece of uploaded content can be immediately traced back to its origin. Adobe’s Content Authenticity Initiative (2024) provides a comprehensive framework for tagging original content, but there’s no obligation for anyone to use it, or any other watermarking scheme. Threat actors obviously will not. Further, as already mentioned, compression and recapture are common operations on the internet, which in some cases may strip the watermark from its associated content.

While AI is not a silver bullet in the fight against misinformation, the five just-discussed key problems should not dissuade experts from using artificial intelligence to address misinformation in any capacity. A frank dialogue on what can realistically be achieved by using AI is needed—genuinely dangerous social media campaigns that pose a threat to democratic societies exist on the internet and must be identified. Indeed, AI has strengths that can be brought to bear for this purpose. It is excellent at pattern recognition, especially in cases where there is too much data for human analysts to sift through. AI can thus be deployed to watch for trends (Theisen et al. 2021) in content in a way that doesn’t require interpretation, like the identification of newly emerging meme genres that are swiftly gaining an audience. A person will still need to be in the loop to interpret the messages such memes carry, but this makes the analysis more tractable by automatically finding out what is popular and potentially influencing an audience.

Most importantly, policy makers need to be aware of the limits to technological interventions for misinformation detection. Some of these limits, like the presence of parody and satire in political discourse, exist external to technology and touch on some of the most fundamentally good aspects of human communication. Confusing them with malicious intent is not healthy for a functioning democracy. People should think twice when developing and evaluating AI algorithms that might lead to the control of speech in some way, lest they unintentionally lapse into technologically mediated tyranny.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: 2024 election, AI, Internet, artificial intelligence, deepfakes, machine learning, misinformation

Topics: Special Topics