The campaign volunteer who used AI to help swing Pakistan’s elections: Interview with Jibran Ilyas

By Thomas Gaulkin | September 5, 2024

The campaign volunteer who used AI to help swing Pakistan’s elections: Interview with Jibran Ilyas

By Thomas Gaulkin | September 5, 2024

On December 17, 2023, a political event like no other caught the world by surprise. In the final minutes of a four-hour-long virtual rally ahead of Pakistan’s national elections, millions of supporters of the political party Pakistan Tehreek-e-Insaf (“Pakistan Movement for Justice,” commonly known as “PTI”) waited expectantly for a message from the party’s founder, former Pakistan prime minister Imran Khan.

But there was a surprise: The Imran Khan who addressed them was not actually Imran Khan. It was an AI clone of his voice, generated by a $99 software app, coordinated by a PTI volunteer sitting thousands of miles away in Chicago.

Khan, a hall-of-fame cricket player turned politician, served as Pakistan’s prime minister from August 2018 until his April 2022 ouster from parliament and has been jailed since August 2023 on various controversial charges. Despite restrictions imposed on Khan and his party’s activities, both remain immensely popular throughout Pakistan and among Pakistani citizens living abroad. To reach those supporters as the February 8 election approached, PTI’s social media team organized the rally to get around the obstructions. Led by the party’s social media lead, Jibran Ilyas, the volunteer team got Khan’s approval to train an AI neural network on his voice and produce the simulated speech.

The December 17, 2023 virtual rally for Pakistan’s PTI party culminated with an AI-generated speech by former prime minister Imran Khan.

International media took note of the novel event, which happened shortly after Sam Altman’s shock dismissal from OpenAI—creator of ChatGPT—and amid rising concern about the rapid and unchecked explosion of AI technologies. Over the next few months, the PTI team posted four more videos featuring the AI-generated voice of Imran Khan, including a “victory speech” released the day after the election. Each time, the use of AI was deliberately emphasized in the videos, not concealed.

Ilyas calls his team’s approach to AI a “noble” effort to resist political repression, and believes that the energy from the artificial speeches, along with the global attention they received, helped galvanize a resurgence of PTI that changed the outcome of the election. Overcoming a ban on the party’s inclusion on the ballot and other obstacles—including a deepfake video of Khan calling on his supporters to boycott the vote—PTI-backed candidates won more parliamentary seats than any other party.

But many experts fear that the use of AI to bolster (or damage) political campaigns has become a potential threat to democratic elections. Deepfakes emerged prominently during the 2020 US presidential election; robocalls using AI impersonated Joe Biden to dissuade New Hampshire voters from turning out for their state’s January primary. And within days of Kamala Harris becoming Joe Biden’s replacement as the Democratic candidate in the US presidential election, deepfakes of her emerged on social media sites like TikTok and X (formerly Twitter). Other AI-powered tools, like social media bots, have also helped make foreign interference in elections more insidious.

After the February election in Pakistan, Freedom House—a US non-profit that monitors and advocates for democracy globally—noted that “PTI’s use of AI has been cited in the media as an example of how a political party equipped with a strong digital organizing infrastructure can leverage technology to counter state suppression and reach voter audiences. However, it also demonstrates the ease with which generative AI can create hyperrealistic imagery and audio, blurring the line between what is real and what is not.”

Ilyas was born in Pakistan but moved to the Chicago area in his teens. Today he’s a cybersecurity expert investigating data breaches for Google and teaches digital forensics at Northwestern University. He began volunteering for Imran Khan’s party in 2011, and in 2022 became its social media lead, coordinating the party’s digital strategy from his home in Chicago.

The Bulletin’s multimedia editor, Thomas Gaulkin, spoke with Ilyas in June to learn more about the process of faking a voice to win elections, and why Ilyas remains so sanguine about deploying such a disruptive technology despite the potential for its misuse.

(This interview has been edited and condensed for clarity and brevity.)

Thomas Gaulkin: Let me start by getting this straight: You trained artificial intelligence to reproduce the voice of the former prime minister of Pakistan so he could speak to his supporters from the confines of his jail cell? And then you told those same supporters that it’s all an AI-generated imitation? Why?

Jibran Ilyas: It was kind of a no-brainer for us. We were not allowed to hold political rallies on the ground. So we thought to ourselves, okay, well, if they’re not gonna let us do anything on the ground, we can hold a virtual rally. That saves us a lot of cost. And it gives us more attendance, right? A political rally in a city, we’d have a few thousand people; we ended up with some 10-, 14-million viewers from our first virtual rally.[1]

But we’ve never had an event without a speech by Imran Khan. And because that’s usually the crescendo of a PTI political rally, there was always this question: What are we going to do at the end of a virtual rally? So we thought to ourselves, well, we can show his old speech, maybe we’ll just play some graphics of his latest statements? Folks were like, yeah, probably won’t have that flavor… But I was familiar with the AI world, and we thought, we have this tool, we can actually use it for good—somebody who is illegally incarcerated, we can bring his voice to the public.

I’m in cybersecurity. I’ve seen impersonations of a CEO’s voice telling an employee, “Hey, go make this payment” to an obviously unauthorized account, using AI, a voice clone. I had seen the deepfakes of the Ukrainian president, for example. So I had seen the bad of AI. What we wanted to do was use AI for the good, for a noble purpose, for somebody who is incarcerated, for somebody whose voice has been suppressed. AI is great technology, but it can be used both ways. We saw a lot of use of it for malicious purposes; we wanted to use AI for something positive.

Gaulkin: Was Khan familiar with AI, and how it would work with your plan for the online event?

Ilyas: I think he wasn’t. When we introduced the concept of a virtual rally, to be honest, Imran Khan did not understand what we were saying. He was like: “So, you’re just gonna do some interviews on Zoom, right, that’s it?” We were like: “No, no, no, it’s a little bit more than that.”

Because what we thought was hey, in Pakistan, people are not allowed to wave the PTI flag, or go out with an Imran Khan T-shirt. But overseas, every country has a PTI chapter. We asked them to hold the rallies in their towns and wear the PTI tee-shirts, wave the PTI flags, and raise a lot of slogans, just like we do in the physical political rallies. And we told him—Imran Khan—that it’s going to be like that, it’s going to be more than Zoom interviews, it’s going to be very spirited. That’s when Imran Khan got excited. He said: “Oh, actually, I want to say something in that event. However, how are you going to execute it?” We got really, really excited, and were like, okay, AI is the way we’re gonna go.

But we didn’t tell a lot of people—to be honest, because we didn’t want this to be shot down. So for most of the people it was a surprise, including PTI leadership. The surprise element was pretty important.

Gaulkin: Imran Khan has been in prison since August 2023, and banned from speaking publicly. How did he communicate what he wanted to say to you? Did he provide the text?

Ilyas: Good question. The way we communicate with him while he’s in prison, is through the lawyers. And basically, lawyers get us shorthand write-ups. It’s not full sentences that Imran Khan wanted to speak, these are topics and short phrases. So we took that and built the text first, based on those messages, and then we converted that into his AI voice.

But that’s always like that—we know, Imran Khan’s lingo, for lack of a better word. If he wants some Facebook message posted, he would give the gist of it and then we would create the actual words, but we’d never change the gist of it. Maybe we’d use a different word than he did. But the gist was still the same. We didn’t add anything or subtract anything.

Gaulkin: Describe the process of creating the AI audio for the speech. What does it take to clone the voice of a former head of state?

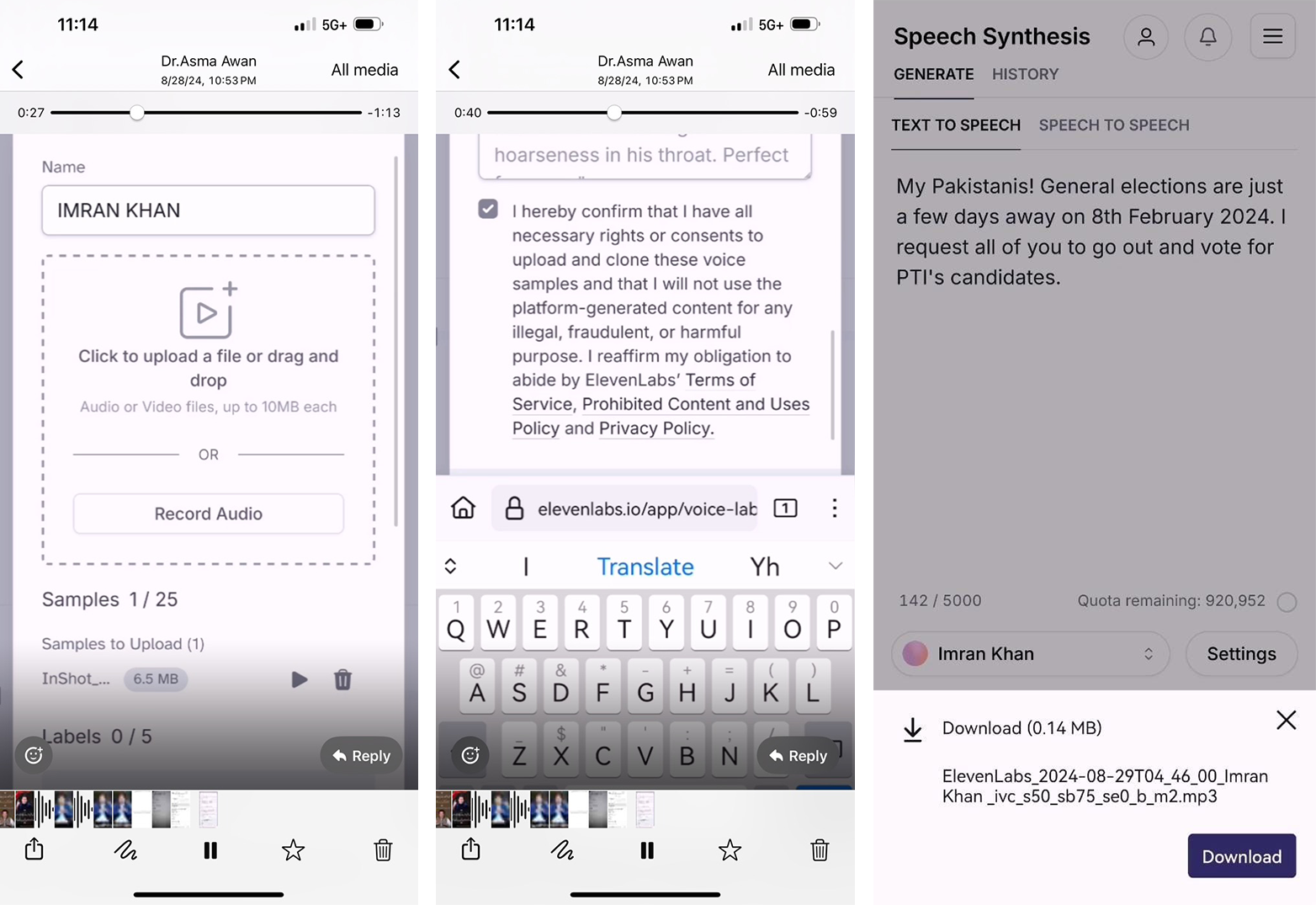

Ilyas: So first and foremost, you have to educate the software by uploading samples of Imran Khan’s real voice. And those samples need to be distinct enough that it covers most of the words in the speech that we wrote from his shorthand notes. So we were trying for as many samples, as many variations, as possible. We had to have a forceful tone, we have to have a mellow tone. Basically, how many speeches of Imran Khan can we find that we can upload that would cover most of the tone, and most of the words that we’re going to actually insert for the AI voice. That effort was huge, because we had to go back in our archives and download from platforms like YouTube, then convert to a voice format, then upload [to the software]. So all that took some time.

Gaulkin: What software did you use?

Ilyas: We’re using ElevenLabs. It has a paid version. I think we paid $9 first, but then we went for the $99 version.

Gaulkin: So the next step was just to take the text that you want read in his voice, and then have that software dictate it?

Ilyas: Yeah. After we feed all the Imran Khan voice samples, as many distinct ones as possible, then we feed the text, and then the first draft comes of the AI version of Imran Khan’s voice audio. And then the real work starts after that. If the voice decibels are too low or the tone is not matching, then we correct that. Like, if the end of a line needed more force, then we had to break up those words or sentences into chunks and then create new audio chunks and merge all the chunks together to make one cohesive speech.

Same with the pronunciation. We had to choose better words that went with the flow. English would have been much easier. If you check our samples, our English AI voice is so close to his real voice. For the Urdu one, we had to compromise a little because the software wasn’t speaking the Urdu words in correct dictions. We tried to change some words into English but that wasn’t working out either, it seemed too artificial. So we basically figured out how to break the words so that when it gets pronounced in Urdu it is pronounced right. A lot of back and forth on those little things that we tried to perfect the for the final version.

We were so tired. The last 36 hours before the virtual rally, we did not sleep, we had lots of iterations of his AI speech, maybe like 50 or 60? I was thinking that even if it only matches 60 or 65 percent with Imran Khan’s real voice, people will forgive it, because they miss him so much! But we still had hours left to improve on that.

Gaulkin: So the virtual rally took place on December 17, with the AI Imran Khan making its first appearance. What was the immediate reaction?

Ilyas: It became global news within hours. The general public response, I mean, it’s hard to even put it in words. Imran Khan has been in public life for 50 years, be it cricket, philanthropy, or politics, he’s been there. So, after so many days without him speaking to the public, and then people hearing that 65 or 70 precent match to his voice—people forgot all that. It was very emotional.

We had said that there would be a surprise at the end of the rally. And everyone thought it would maybe be just one line from Skipper—we call him Skipper, because he was a cricket captain—one line from him for us. But when we played this four-minute AI speech, I could see the comments; we do sentiment analysis on the words people are using on Twitter, Instagram, Facebook, Tik Tok. And it was so positive yet emotional. Everybody was in a spell, if you will.

One thing you have to understand, we had a lot of good visuals going with the audio about whatever topic he was talking about. So in their subconscious, they were probably thinking that Imran Khan is speaking, it’s not AI. After the first few lines, I think people’s imagination took over. And we didn’t get any flack for that 30 percent miss in the AI.

We expected it would be good. But we didn’t expect it to kind of dominate tech industry news and you know, world politics news. To be honest, I hadn’t seen any of that because we hadn’t slept in 36 hours and we were just so dead tired. I was actually leading the jalsa [an Urdu word for “gathering”—in this case a virtual one] as an emcee as well. So I woke up 12 hours later, and I was like, what happened? Why is The Guardian talking about me? Why is CNN? Forbes? There were so much global news. We were just taken aback by all this positive buzz about this AI.

Gaulkin: You used AI again a few more times before and after the election to deliver statements and speeches in the voice of Imran Khan. What would you say the political impact has been?

Ilyas: What happened—and this next part is very, very important, because our critics claimed that we wouldn’t even find candidates for the next election—after this virtual event happened in mid-December, we not only had candidates, but we had the highest number of candidates who filed for nomination papers [to run as independents to get around the ban on PTI candidates]. So this virtual event kind of gave a new lease of life to PTI. They gave Imran Khan years of prison time in just the last week before the election, they wanted to give the message that Imran Khan is out, there’s no way he can come back. But because of the enthusiasm that was kept generating through these AI speeches of Imran Khan, the people didn’t give up. They went out to vote.

Gaulkin: On the eve of the vote, a deepfake video circulated of Imran Khan calling for a boycott of the election. What was its impact? And how do you square that use of AI with the “noble purpose” you described?

Ilyas: I am aware of that video surfacing. I think where they miscalculated was that people know Imran Khan—his whole struggle since he was ousted was to have free and fair elections. He would never boycott the elections because all of his struggle for the last two years would have gone to waste. You know, he fights until the last ball just like he did in his cricketing days. So this video coming just a day before it was to happen, people smelled something bad. They didn’t believe it. They came right to our official platforms, and we clarified things very quickly. And then because we’re all over the world, and we were able to debunk it from our official accounts, it became no news.

Gaulkin: What keeps less noble uses from becoming more likely?

Ilyas: I would say there’s a huge cost when somebody does a fake version of it. I think our opponents were just so desperate that they were trying like things that were not even plausible. So that didn’t work out for them. And then some of the journalists that kind of sympathized with the other side, they posted that and their credibility went kaput, in a matter of minutes. So I think, you know, the plausibility—the credibility—that counts a lot. Like if it was semi, if it was well done, it could have had an impact. It looked like such a desperate attempt on the last day, on the eve of elections, that it didn’t work out that well.

And, you know, we were asked by the PTI candidates if Imran Khan could speak about each of their 900 constituencies to get more votes. They wanted to do robocalls with his AI voice that we created. And we said no, we want to keep the AI authenticity. In fact, people kept criticizing us, especially around the time that the PTI party symbol was blocked from appearing on the ballots: “Hey, you have this AI tool, why don’t you do more of Imran Khan’s voice?” But our point was that “Look, we can’t overdo AI, because then it would be misused.” We’ve posted just five AI appearances from December 2023 to now [in June]. All five came at very critical junctures. And the election eve deepfake proved us right—people were like, “We know exactly where they document their official use of AI. So we’re just gonna go there to verify if the boycott news is real or not.” So that strategy really paid off, of not misusing or not overdoing it.

Gaulkin: Were there any moments before or after the virtual rally when you considered not being transparent about the fact that you were using AI?

Ilyas: No, no, that was always part of the strategy. I’m in cybersecurity. And I’m a technologist. I care about ethics a lot. I care about being transparent a lot. In fact, some of the people were saying that it actually was Imran Khan speaking from jail—we corrected them. We were on the other side, trying to convince people: “Hey, look, look at the first slide. Look at the bottom ticker. It says very clearly that this is an AI version of Imran Khan’s voice, and this is the third version of his authorized voice, and it was released on this date.” So we doubled down on the awareness, and everybody kept repeating the message that this was AI, and it kind of went viral. And then the global media coverage also helped us in that regard.

Gaulkin: Beyond transparency, what other responsibilities do you have or do others have who might use this kind of tool in political settings?

Ilyas: I think we had a double challenge. We had to be really careful of not misusing his trust in AI. We were very careful and never added anything that, you know, he wouldn’t say. I mean, the power was there, the opportunity was there. But we never misused it. We were very, very responsible.

You know, we’re all volunteers. We don’t get a dime. In fact, we pay for the software that we use. He knows that we’re not doing it for self-interest. The people who use it for malicious purposes, that’s a whole different world compared to how we used it.

My own ethos, or philosophical belief on this malicious use, is that it gets revealed sooner or later, right. And in elections, politics, credibility matters the most. If you are willing to risk that, yeah, you might win a battle or two. But if you lose your credibility, you’re done and dusted forever.

Gaulkin: Any thoughts on how government regulation of AI might help?

Ilyas: I don’t like the idea of restricting technology. I’m not the kind of guy who recommends censorship or regulations because, as a technologist, I know there are so many ways to get around it. With AI, the bad use can fool some people some of the time, but you can’t fool all the people all the time—kind of going Lincoln on you, sorry—but that’s the truth of it. I think people see through it. You have to give people a lot of credit—maybe times are changing on social media! Instead of believe first and doubt later, I think it’s the other way around these days.

Gaulkin: I saw that Donald Trump recently claimed in an interview that one of his speeches was written using an AI-generating tool. But he also said he was worried about how deepfakes could be used to trick a state into launching nuclear weapons. Given your experience, do you have any advice for Trump, or for anyone else in the political world who might consider using artificial intelligences?

Ilyas: Absolutely. My thought, in politics more than anything, is that you win hearts and minds. If you don’t connect to a voter on a personal level, you’re not convincing him to go out and vote in lines, right? You could be faulty, people forgive that. What people don’t forget is deception. So I would advise candidates to not write their speeches via ChatGPT. My recommendation would be to connect on a human level to them, and you do that when you’re being your true self, not smarter than what you are through ChatGPT, or Gemini, or Copilot, any other AI there is. Because, you know, at the end of the day, what convinces people is when they really connect, when you win their hearts and minds.

A lot of journalists from different countries really wanted to understand how we did it, why it mattered so much I think, for us. It was the context, the context was the most important thing. Had Imran Khan been out of prison, why would we need AI? He would have just actually spoken. So the whole point was that the establishment of the country is silencing him. The media, they can’t even read his name. They have to say, “founder of PTI,” they can’t say “Imran Khan.” His picture can’t be shown on mainstream media. So all that fascism added to the context: He’s not allowed on mainstream. What can we do on digital that makes up for for that?

I mean, if you think about, just purchasing the software, spending what, some 36 hours, that’s very, very insignificant. It’s the context, and the environment, what the country was feeling in hearing a voice that they really, really wanted to listen to—that is the main part of it. So, as I told those reporters from other countries, you kind of don’t have the context to make that big of an impact [with AI in political campaigns], you know?

Gaulkin: You don’t really seem that worried about AI in elections. Is that a fair statement?

Ilyas: My thought is that, you know, disinformation comes in many flavors, right? AI is just a part of that and this information, right? It’s, you could say, it’s a little more powerful, right? Because it’s new and people are trying to grasp. AI is just another tool in the arsenal.

I mean, I think AI in isolation will not be extremely dangerous. People will see through it. But if there’s a strategy, if there’s so many things going on, and AI basically adds to the plausibility of some disinformation campaign, then it’s going to be effective. But then that happens in every election, right? There needs to be a certain cell in a political party to fight disinformation. And fighting AI has to be part of that. But just by itself? I think people are seeing through it.

But can I ask you a curious question? You asked me this question, that I don’t seem too worried about AI. What bothers you about it? Like, what am I missing there? Because, you know, our context is really different. We have a very powerful social media, we can debunk things within minutes, my worry is less. What worries you about this AI?

Gaulkin: From the Bulletin’s perspective, we’re looking at all sorts of global threats, starting with nuclear weapons, and now we’re talking about other disruptive technologies, global climate change, pandemics, and things like that. Everything that can have a global impact on human civilization. And so, from that perspective, our concern is that the information ecosystem that societies now depend on is being threatened by disinformation. And many of the experts that we talk to who are worried about it don’t think of AI as some sort of magical thing that’s causing that by itself, but rather that it really accelerates the spreading of harmful information that’s already underway.

Ilyas: It does, it does.

Gaulkin: So the fear is that it becomes sort of unmanageable.

Ilyas: Okay. Got it. Thank you for the insight, I appreciate it.

Gaulkin: You don’t share that concern?

Ilyas: It’s just that I’m not very close to that world. On an even level playing field, I could see it being a problem, let’s say, if we were just up against a political opponent, not up against suppression.

But we’re in a world where Imran Khan enjoys so much popular support, that anything now that’s against him already faces a lot of skepticism first—everything that they’re trying to do, like deep fakes or fake audios that are meant to malign him, it’s not working. It’s the definition of madness, right? They’ve been doing it for two years, and nothing has worked for them. So, I’m in a different world, a different context. And that’s why I probably don’t share the same level of threat that you’re seeing in the world.

Editor’s note: Since this interview, a UN panel of experts found that Imran Khan’s detention “had no legal basis and appears to have been intended to disqualify him from running for political office.” Despite the government’s ongoing attempts to limit or ban PTI activities, Pakistan’s Supreme Court formally reinstated the party in parliament on July 12. PTI’s YouTube channel posted a sixth video featuring the AI clone of Imran Khan’ voice delivering a message for Pakistan’s August 14 independence day.

Endnotes

[1] The Bulletin could not independently verify the total number of viewers of the PTI virtual rally. Reports from Reuters, CNN, and other media indicated at least 1.4 million people streamed the rally live on the PTI’s YouTube channel, and “tens of thousands” more watched via other social media platforms. As of this writing, there are about 2.2 million views combined of the rally livestreams shared on the official YouTube channels of the PTI and Imran Khan.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: Elections, Imran Khan, PTI, Pakistan, artificial intelligence, deepfakes, election 2024

Topics: Special Topics