Historically, nuclear reactions have been mostly associated with weaponry. In his 1953 “Atoms for Peace” speech, President Dwight Eisenhower pledged to change that and make nuclear technology available for the benefit of mankind . Fission research was unveiled at a 1955 conference in Geneva, but governments kept fusion research largely secret for another three years. In January 1958, Britain revealed its ZETA reactor, which researchers believed had made a major breakthrough toward practical fusion energy. The breakthrough turned out to be illusory, but fusion was now public property, and the US, UK, and Russian governments completely declassified the field at another Geneva conference later that year.

If commercial fusion power plants are ever proved practical, they could offer an attractive alternative to fission reactors, which have safety, radioactive waste, and nuclear proliferation issues. Robert J. Goldston, a former director of the US Department of Energy’s Princeton Plasma Physics Laboratory, is a steadfast proponent of fusion as a commercial power source.

“Fusion can come on line later in the century, as electric power needs double between 2050 and 2100, and as the scale of electricity production puts strong pressure on the issues for other energy sources,” Goldston wrote. “What sets the timescale for fusion development? Until recently, the answer has been the science. The very hot gas, called plasma, that supports fusion is tricky, and it has taken time for scientists to understand its behavior. In that time we have made immense strides.”

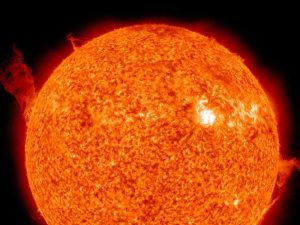

But attempts to create a fusion reactor that produces more power than it consumes have continued for decades and have achieved nothing like commercial success. While the process of splitting an atom to produce energy is, relatively speaking, rather straightforward, it is a “grand scientific challenge” to fuse two hydrogen nuclei together to create helium isotopes (as occurs in fusion), research physicist and fusion expert Daniel Jassby wrote in 2017. The sun constantly creates fusion reactions, burning ordinary hydrogen at enormous densities and temperatures. But to replicate that process of fusion on Earth—where we don’t have the intense pressure created by the gravity of the sun’s core—we would need a temperature of at least 100 million degrees Celsius, or about six times hotter than the sun. In experiments to date, the energy input required to produce the temperatures and pressures that enable significant fusion of hydrogen isotopes has far exceeded the energy generated.

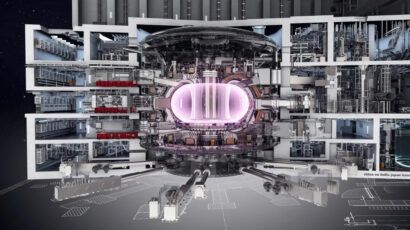

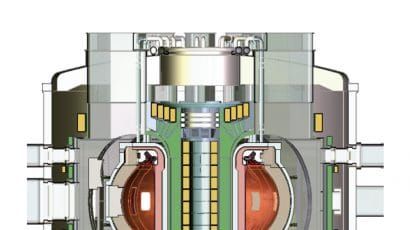

But research continues. Collaborative, multinational physics projects in this area include the International Thermonuclear Experimental Reactor (ITER) joint fusion experiment in France, which broke ground for its first support structures in 2010—with the first experiments on its fusion machine, or tokamak, expected to begin in 2025.

ITER’s impact is still in question, Jassby wrote in 2018. “If successful, ITER may allow physicists to study long-lived, high-temperature fusioning plasmas. But viewed as a prototypical energy producer, ITER will be, manifestly, a havoc-wreaking neutron source fueled by tritium produced in fission reactors, powered by hundreds of megawatts of electricity from the regional electric grid, and demanding unprecedented cooling water resources. Neutron damage will be intensified while the other characteristics will endure in any subsequent fusion reactor that attempts to generate enough electricity to exceed all the energy sinks identified herein. When confronted by this reality, even the most starry-eyed energy planners may abandon fusion.”

The Bulletin of the Atomic Scientists publishes stories about nuclear risk, climate change, and disruptive technologies. The Bulletin also is the nonprofit behind the iconic Doomsday Clock.