AI can accelerate scientific advance, but the real bottlenecks to progress are cultural and institutional

By Abi Olvera | April 29, 2025

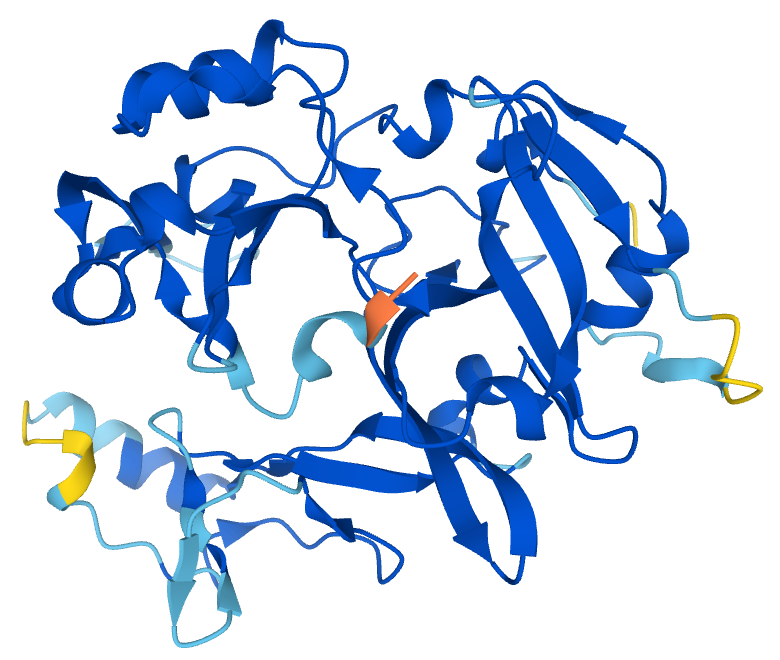

DeepMind’s AlphaFold made waves by predicting how proteins fold, a problem that scientists had struggled with for years, by using data patterns. Image: CSBIOPASSION, CC BY-SA 4.0

DeepMind’s AlphaFold made waves by predicting how proteins fold, a problem that scientists had struggled with for years, by using data patterns. Image: CSBIOPASSION, CC BY-SA 4.0

In 2024, Joseph Coates was preparing for death at age 37. His medical team had informed him that his rare blood disorder—which leads to kidney failure, numb limbs, and an enlarged heart—was untreatable. Then, a doctor whom Coates and his girlfriend had met a year earlier at a rare disease summit saved his life, thanks to an artificial intelligence model that suggested an unconventional drug combination.

The doctor, David Fajgenbaum, and his team at the University of Pennsylvania used a model that sifts through thousands of existing medications to find unexpected treatments for rare diseases, coming up with a combination of chemotherapy, steroids, and immunotherapy for Coates. Drug repurposing using artificial intelligence offers hope to patients who have diseases that affect relatively few patients—and that pharmaceutical companies therefore see as unprofitable research targets.

Stories like Coates’ fuel headlines that make bold claims about artificial intelligence: AI will cure cancer, reduce climate change risks, and unlock the secrets of the brain. Tech companies promise a future shaped by machine-driven discoveries, and machine intelligence can certainly help in some aspects of science.

But the biggest blocks to accelerating the pace of scientific advance may not be technical at all. From grant committees that favor incremental and focused over novel or interdisciplinary research, to academic systems that reward individuals rather than teams, to laboratories that are ill-equipped for automation, the challenges of advancing science lie in the funding, structuring, and guidance of scientific work. Artificial intelligence tools can help speed some important research, but transforming the pace at which science progresses will require addressing deep cultural and institutional barriers, too.

Science and AI. At its core, science asks questions, tests ideas, and builds knowledge. AI can help with portions of that process, but not all of it. For example, DeepMind’s AlphaFold made waves several years ago by predicting how proteins fold. This is crucial because a protein’s shape determines its function—and misfolded proteins are linked to diseases like Alzheimer’s and cancer. Predicting protein folding is a problem that scientists had struggled with for years. AlphaFold solved it using data patterns, but the model didn’t reveal the underlying rules of folding. AI biodesign tools of this sort act like microscopes: They help researchers see better. But scientists have to test AlphaFold’s predictions—similar to the way in which an AI model that reviews drugs can only suggest, not test, which drugs might help a patient with a rare disease.

Artificial intelligence models can be used in other parts of the science research ecosystem, too. Large language model powered apps like ChatGPT, for instance, can read, summarize, find connections, and write.

Researchers spend nearly 45 percent of their time writing grants instead of conducting actual research. AI could speed up or replace the idea generation, hypothesis thinking, and theoretical parts of science such as pulling together various strands of research in literature reviews or grant proposals, says Matt Esche, Metascience Fellow at Institute for Progress. AI assistance could also be used as a tool to free up researcher time and help prepare proposals.

There is a flip side to this sort of automation, however. If AI tools increase the number of research proposals but don’t result in more focused proposals, they risk increasing reviewer workloads—much like how “terms and conditions” ballooned in length once digital files replaced paper. “Grant proposals could spiral out of control with more applications and more verbose, less refined proposals,” Esche says. To avoid proposal bloat, AI tools need to be used thoughtfully with the goal of streamlining workload.

Some of the biggest breakthroughs in science happen when people mix ideas from different fields. And AI tools can help decode technical aspects of one field for scientists in different fields.

For instance, vaccines came from combining research in biology and animal studies. mRNA vaccines pulled from “research on HIV, cancer immunotherapy, and RNA biology,” Esche says. But research proposal reviewers tend to understand one field very well, not two or three; cross-field projects have a hard time finding consensus, Esche notes. AI tools could help make the details and import of cross-discipline research more understandable for research reviewers.

AI could improve funding decisions. Although the United States has led the world in basic scientific research, research productivity has been declining for decades. To explain the decline, some point to the structures of the grant review committees: Because of the consensus-driven review process, grants that focus on incremental research do better than those proposing novel research. Analysis of reviewers showed a clear bias against interdisciplinary research or previously unfunded researchers.

Sometimes, reviewers reject a research proposal not because the idea is truly risky, but simply because it’s outside their area of expertise, and they don’t fully understand it, “confusing a knowledge gap with a risk gap,” Esche says. AI could act as a translator to explain unfamiliar terms, and it might be used to help categorize proposals by their risk profile (like “further frontier” for novel but uncertain projects, or “high risk high reward” for bold projects with potential for major impact if successful). This categorization could help reviewers balance funding portfolios between safe bets and transformative longshots. “Experimenting with AI tools and building the institutional scaffolding to do so lets science agencies test where these tools actually help improve and speed up science funding,” he adds.

Josh New, director of policy at SeedAI, a nonprofit that is leading an initiative to accelerate science, notes that a more comprehensive understanding of research—for instance, via an AI-driven “scientific copilot” that stays up to date on the millions of research papers published each year— could counter instances of groupthink in science funding and help funders avoid defaulting to dominant theories while ignoring dissenting (but promising) views. The Alzheimer’s research field doubled down for years on a theory that focused on the buildup of “amyloid” proteins and plaque in the brain; that avenue of research has so far produced little progress toward useful Alzheimer’s therapies, while alternatives languished unfunded. An AI scientific copilot might not have stopped the interpersonal power structures that led to the Alzheimer’s research problem, but it could have surfaced the neglected thread of inquiry into the causes of Alzheimer’s disease sooner.

Even if the process for reviewing research is improved, validating and testing ideas through experiments in laboratories face a daunting array of bottlenecks. Most labs aren’t structured well for automation, New explains. Equipment is outdated, fragmented, and hard to network. Procurement rules are vague and often incompatible. Academic and government labs lack the funds to upgrade, even as private labs already appear to run automated experiments.

But the biggest challenges to large advances in science aren’t technical; AI and upgraded equipment can’t solve them on their own. The most difficult challenges are cultural. “We have to decide to prioritize [big ambitious science] as a country; AI can’t solve political will,” New says.

The Human Genome Project made history because it forced collaboration across many scientists in several fields. It showed that large teams, not solo geniuses, are often the key to breakthrough progress. But science culture still rewards individuals, not teams. Grants go to known names. Papers list one first author. Yet most discoveries come from interdisciplinary teams solving problems together. Changing such a system requires changes in policy. How society chooses to fund, reward, and attribute credit for discoveries is a matter of human decision-making and can be changed with political will.

Human-machine relationship. Together, artificial and human intelligences can enhance scientific research. The human element becomes particularly relevant, for example, when considering AI’s role in literature reviews. AI apps can process enormous volumes of research papers, Lisa Messeri an anthropologist at Yale University says, but they lack the capacity for serendipitous discovery that can happen when scientists read with personal curiosity. An algorithm might efficiently summarize known patterns but miss the unexpected connections that drive breakthrough innovation.

This was precisely the kind of serendipity that led David Fajgenbaum—the University of Pennsylvania doctor who helped save Joseph Coates from a rare blood disorder—to begin identifying treatments that repurpose existing drugs for use in battling under-researched diseases. During medical school, Fajgenbaum discovered that a transplant-rejection medication could treat his own lymph node disorder—a discovery he made by spending weeks reading medical literature and self-experimenting. AI can now accelerate such a process, but not entirely replace its human component.

“There’s an illusion of objectivity that comes with AI systems,” Messeri explains. Many proponents of AI suggest it is more neutral than human researchers, but this perspective misunderstands science. Scientific inquiry has never been truly neutral, it reflects the priorities, questions, and biases of those conducting it. Rather than pursuing the enlightenment ideal of perfectly unbiased knowledge, science advances most effectively when researchers acknowledge their human perspectives and limitations. AI could exacerbate the inability “to push the bounds of science precisely because of what it’s trained on, existing work,” Messeri says. AI can give false confidence that we are canvassing the whole territory, “but really what’s happening is that we are narrowing to what AI is good at,” she adds.

The most effective implementations of artificial intelligence in science research may be those that automate routine tasks while preserving space for human intuition and exploration. But such changes could come at a cost: An MIT study suggests that scientists’ job satisfaction could plummet in more automated lab settings as their work shifts from hands-on experimentation to more repetitive system-monitoring roles, New explains. Still, if used properly, automation in the lab can leave researchers to focus on creativity and interest.

Large language models and specialized AI tools for biodesign already accelerate routine scientific tasks. AI models with agentic or autonomous capabilities—like OpenAI’s Operator, which can handle some multi-step tasks through a virtual browser—could speed research even more. But their impact depends on how institutions absorb and integrate them. Core bottlenecks remain: validating experiments at scale; organizing large collaborative projects across scientific sectors; and building the institutional scaffolding for bold, high-risk research. Without structural reform, even transformative tools risk being trapped in systems optimized for incrementalism.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: AI, artificial intelligence, protein folding, research, science

Topics: Artificial Intelligence, Disruptive Technologies