The layered, Swiss cheese model for mitigating online misinformation

By Leticia Bode, Emily Vraga | May 13, 2021

The layered, Swiss cheese model for mitigating online misinformation

By Leticia Bode, Emily Vraga | May 13, 2021

COVID-19 has threatened the world with the worst pandemic in a century (Steenhuysen 2021), resulting in more than 100 million cases and more than 2 million deaths worldwide (WHO 2021). And despite the spectacular scientific achievement of developing multiple safe and effective vaccines in record time (Petri 2020), the world is not out of the woods yet. Accompanying the pandemic itself is what the World Health Organization has dubbed an “infodemic”—an overwhelming surplus of both accurate information and misinformation (WHO 2020).

In general, most information circulating online is accurate. One study, for example, found that only about 1 percent of the links about COVID-19 that a sample of voters shared on Twitter were to “fake news” sites (Lazer et al. 2020). However, several common myths have persisted during the so-called “infodemic”—for example, the conspiracy theory that researchers created the virus in a Wuhan laboratory (Fichera 2020), or that 5G cell phone towers are responsible for its spread (Brown 2020). Newer myths relate to vaccine development, like the one about COVID-19 vaccines being made from fetal tissue (Reuters 2020).

For a variety of methodological reasons, it’s hard to say how many people believe the misinformation they see online and form what social scientists call misperceptions. People report varying levels of belief in certain prominent myths. About 22 percent of people, as of last August, thought that the virus was created in a Chinese lab and 7 percent of people thought the flu vaccine could increase the chance of getting COVID-19 (Baum et al. 2020). Misperceptions matter: Belief in conspiracy theories related to the virus is associated with people being less willing to get vaccinated (Baum et al. 2020). And from mask wearing to vaccination, COVID-19 public health measures are about protecting not just the individual, but also others in society; one person’s decision to forgo a vaccine is a risk to everyone else. There’s no silver bullet to countering the online misinformation that can lead to these sorts of consequential misperceptions, but the good news is that interventions like correcting false information can work.

Information overload.

Social media platforms are now a major way people get news (Pew 2019b), but it is often the most sensational or emotional content that people engage with most (Marwick 2018). Misinformation is frequently just that sort of novel and emotional content tailor made for virality, and research has shown it can spread faster than truthful content (Vosoughi, Roy, and Aral 2018). While platforms are making significant progress in moderating the content pushed out by billions of social media users, even with very high accuracy of automated content evaluation, misinformation will still circulate (Bode 2020). The problem, quite simply, is one of scale.

An infodemic is problematic not just because of the presence of misinformation, but because of the abundance of information in general. People can have trouble sifting through this information and distinguishing good from bad. Compounding matters is that people are hugely and understandably interested in the pandemic—57 percent of people were paying close attention to COVID-19 as of September, and 43 percent reported finding it difficult to find information (Associated Press/NORC 2020). When demand for information outpaces the supply of reliable information, it creates a data deficit, where, as Tommy Shane and Pedro Noel write in First Draft, “results exist but they are misleading, confusing, false, or otherwise harmful (Shane and Noel 2020).”

The fact that COVID-19 is a new virus exacerbates these issues. Researchers and medical practitioners are still learning new things about the novel COVID-19 virus and public health recommendations are constantly evolving. While this is an unavoidable characteristic of the scientific process, exposure to conflicting health messages can not only create confusion but also lead people to distrust health recommendations (Nagler 2014). Researchers can most clearly define misinformation when there is clear expert consensus and a large body of concrete evidence (Vraga and Bode 2020a). COVID-19, especially in its early days, lacked both of these features, making misinformation harder to identify and thus harder to address.

How to confront an infodemic and correct misinformation.

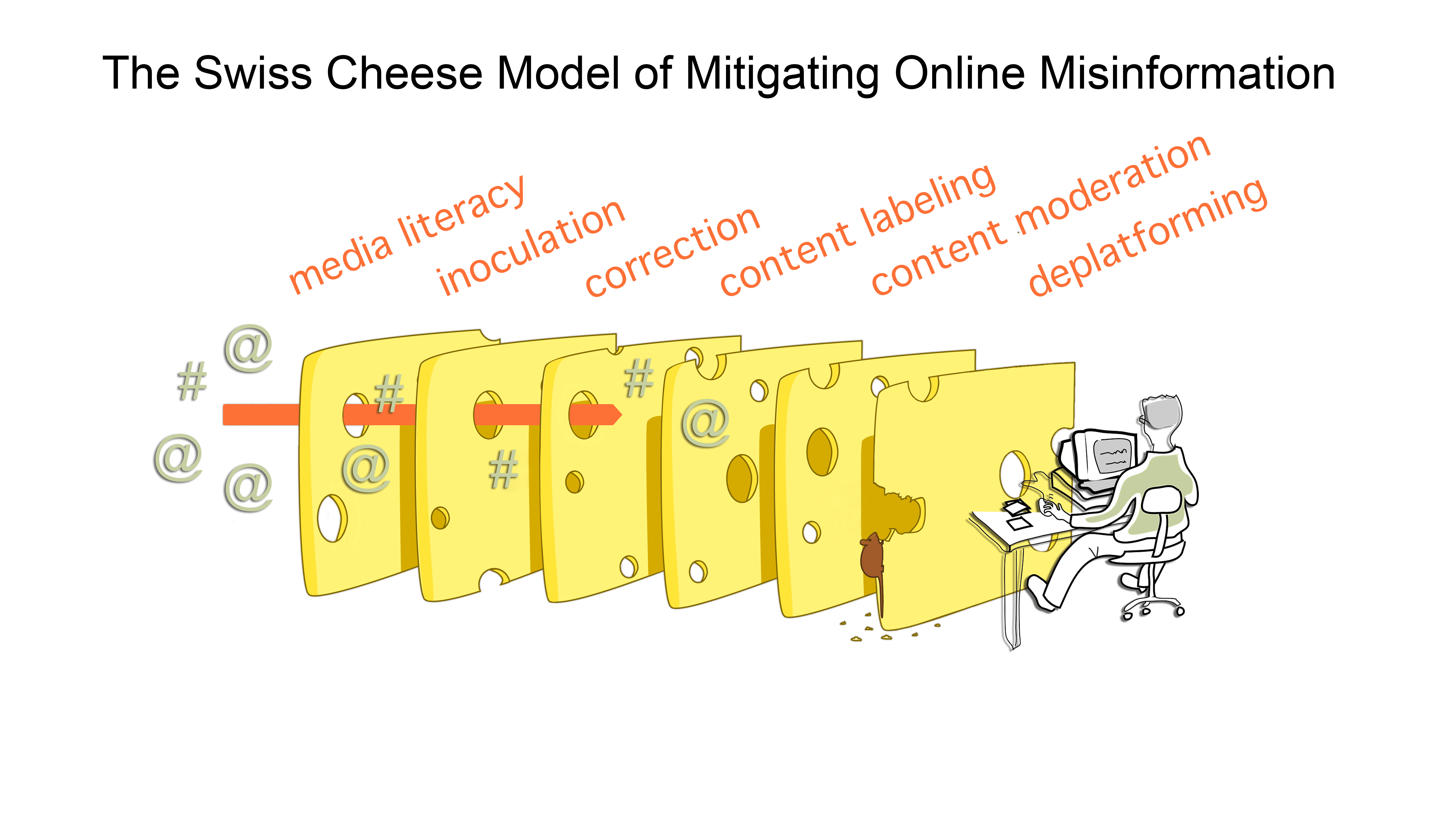

Given the scale of these overlapping problems, no single solution to the COVID-19 misinformation problem will do. Instead, much like the “Swiss cheese model” of layering defenses against COVID-19 itself—vaccines, masks, social distance, ventilation, etc. (Mackay 2020)—multiple overlapping misinformation interventions can help. As a recent Scientific American article put it, “every layer in the model—blocking on platforms, fact-checking, online engagement, and creation of a science-friendly community—has limitations. Each additional layer of defense, however, slows the advance of deceptions (Hall Jamieson 2021).” The answer is not correction, inoculation, media literacy, content moderation, or deplatforming—it is all of these things.

Importantly, this also means that everyone has a role to play in mitigating the spread and impact of misinformation. To solve the misinformation problems of the COVID-19 infodemic, public health authorities need to promote clear and reliable information (Malecki, Keating, and Safdar 2021). Social media platforms can engage in content moderation (Myers West 2018) and content labeling (Clayton et al. 2020), as well as employing interventions to nudge (Pennycook et al. 2021) people away from misinformation and towards accurate information (Bode and Vraga 2015). Public officials need to depoliticize scientific issues and invest in education (Bolsen and Druckman 2015). And educators serve an important role in inoculating students against misinformation (Banas and Rains 2010) and engaging in media literacy efforts (Vraga, Tully, and Bode 2020).

While these are all important, the average social media user can play a part, too.

Across more than 10 studies, our research has consistently shown that even when anonymous social media users correct misinformation they can reduce the misperceptions of the (sometimes large) audience of social media onlookers who witness the interaction (Vraga and Bode 2020b ).

Why might this be?

First, social media fosters weak social ties as opposed to strong ones (De Meo et al. 2014). Weak ties tend to represent a more diverse group than people with whom someone has strong ties; compared to people someone sees and interacts with every day in offline life, these weak ties may be more likely to bring novel perspectives or information to a discussion (Granovetter 1973). In the context of misinformation, this could mean diverse social media contacts are better able to recognize misinformation and have the information needed to correct it, whereas closer ties might not have been exposed to or believe that corrective information.

Second, the threaded nature of social media means that audiences see corrections essentially simultaneously with the misinformation. Research shows that the shorter the time between misinformation and correction, the more effective the correction is (Walter and Tukachinski 2020). Essentially, misinformation has less of a chance to take hold in someone’s mind if it is immediately corrected.

Third, even just observing corrections on social media may remind people about the potential social or reputational cost of sharing misinformation (Altay, Hacquin, and Mercier 2020). No one likes being wrong—which is one reason why many people resist corrective efforts. Being corrected can cause people to engage in motivated reasoning (Kunda 1990) to explain away the threatening piece of information. In the context of misinformation, this sometimes means that people will not accept correction of misinformation that aligns with their worldview.

But the people witnessing the correction of someone else are less emotionally involved than the person being corrected, and may be more amenable to accepting the correction. They see the reputational cost being imposed on someone sharing misinformation (Altay, Hacquin, and Mercier 2020), which can reinforce existing societal norms that value accuracy.

Research consistently shows positive effects of this sort of intervention (Vraga and Bode 2020b). Everyday social media users therefore have a clear role to play in mitigating the negative effects of misinformation.

What’s the most effective way to correct misinformation?

First, expertise and trust both matter for corrections. That expertise can be personal or organizational—a well-known health organization is going to be more effective in responding to misinformation about health given their perceived expertise (Vraga and Bode 2017). But that expertise can also be borrowed, such as when users provide links to these credible and trusted sources (Vraga and Bode 2018). And trust might matter even more than expertise when it comes to correction (Guillory and Geraci 2013), meaning that close friends and family on social media may be especially well-positioned to correct misinformation (Margolin, Hannak, and Weber 2018).

Second, repetition can be important as well. Misinformation is often sticky in part because of its familiarity—and familiar information feels more credible (this is called the “illusory truth effect”) (Fazio et al. 2015). Corrections need to be as memorable as the misinformation they are addressing, and repetition can make correction more memorable in the same way it does for misinformation. This is especially important when corrections come from social media users. Multiple users should correct misinformation when they see it to emphasize that public support is behind the facts, not the falsehood (Vraga and Bode 2017). As part of this repetition, people should emphasize the correction itself, not the misinformation—which they should reference only to demonstrate exactly why and where the misinformation is wrong (Ecker, Hogan, and Lewandowsky 2017). Simply telling people what is wrong does not work as well as also telling people what is correct. For example, stating that a COVID-19 vaccine will not give a person COVID-19 is less convincing than explaining that current vaccines do not contain any live virus and therefore cannot make you ill with COVID-19 (CDC 2021).

Finally, corrections do not need to be confrontational or cruel in order to be effective (Bode, Vraga, and Tully 2020). Offering empathy and understanding as part of a response to misinformation is equally effective in reducing misperceptions and might make the interaction more palatable for everyone involved (Hyland-Wood et al. 2021).

While many people have expressed concerns about correcting others on social media (Tandoc Jr., Lim, and Ling 2020), overall people tend to appreciate and even like the idea of correction of misinformation on social media. Recent surveys we’ve conducted suggest majorities of the US public hold favorable attitudes towards user correction on social media, including a belief that it is part of the public’s responsibility to respond (Bode and Vraga 2020). That isn’t to say people are oblivious to the possible downsides of correction, including the possibility of trolling or confusion. But recognizing that a large percentage of the public says that they have corrected misinformation on social media and that correction is valuable should reassure everyone that correction is not a social taboo. Indeed, the bigger harm to reputation is likely to come from sharing misinformation, rather than from correcting it (Altay, Hacquin, and Mercier 2020).

To sum up, people think it’s valuable to correct each other on social media, and it works when people do it. As a result, we hope that future interventions can focus on how to increase the number of people engaging in correction and ensure that those who do are well informed. Efforts like #iamhere, that mobilize and empower users to counter hate speech and misinformation on social media, are one model for this sort of approach (iamhere international 2021).

Together, layering peer correction with other approaches including content moderation, media literacy, content labeling, inoculation, and deplatforming can help stem the tide of misinformation.

Together, we make the world safer.

The Bulletin elevates expert voices above the noise. But as an independent nonprofit organization, our operations depend on the support of readers like you. Help us continue to deliver quality journalism that holds leaders accountable. Your support of our work at any level is important. In return, we promise our coverage will be understandable, influential, vigilant, solution-oriented, and fair-minded. Together we can make a difference.

Keywords: 2024 election, COVID-19, conspiracy theories, corrections, infodemic, misinformation, public health, social media, vaccination

Topics: Disruptive Technologies